Meta’s Open Compute Project held an OCP 2023 Summit in San Jose last week, showcasing a variety of storage products.

Distributor TD SYNNEX is in the storage business through its Hyve Solutions Corp subsidiary. Hyve said it will support OCP through motherboards and systems compliant with the the OCP’s DC-MHS (Data Center Modular Hardware System) specification.

It also showed a roadmap of future products, including P-core and E-core-based Intel Xeon processors. Steve Ichinaga, Hyve president, said: “The DC-MHS modular server architecture is yet another way OCP works with the community to create and deliver innovation to the hyperscale market.”

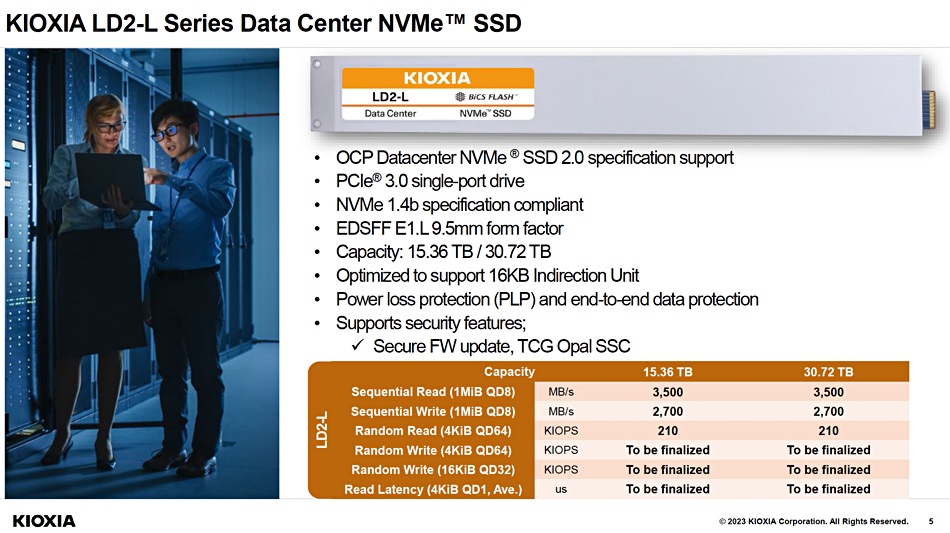

NAND fabricator and SSD supplier Kioxia exhibited:

● XD7P Series EDSFF E1.S SSDs.

● LD2-L Series E1.L 30.72TB NVMe SSD prototype, with PCIe 3.0, supporting 983 terabytes in a single 32-slot rack unit. That’s near enough 1PB per RU, meaning up to 40PB in a standard rack. An FIO sequential read workload saw throughput from 32 SSDs top 100GB/sec.

● CD8P Series U.2 Data Center NVMe SSDs, which are among the first datacenter-class PCIe 5.0 SSDs in the market.

● The first software-defined flash storage hardware device in an E1.L form factor, supporting the Linux Foundation Software-Enabled Flash Project.

Kioxia claims software-defined technology allows developers to maximize as yet untapped capabilities in flash storage, with finer-grained data placement and lower write amplification.

Phison Electronics said it had PCIe 5.0, CXL 2.0-compatible, redriver and retimer data signal conditioning IC products. These have been designed to meet the demands of artificial intelligence and machine learning, edge computing, high-performance computing, and other data-intensive, next-gen applications.

Michael Wu, president and general manager for Phison US, said: “Phison has focused … R&D efforts on developing in-house, chip-to-chip communication technologies since the introduction of the PCIe 3.0 protocol, with PCIe 4.0 and PCIe 5.0 solutions now in mass production, and PCIe 6.0 solutions now in the design phase.”

All of Phison’s redriver solutions are certified by PCI-SIG – the consortium that owns and manages PCI specifications as open industry standards. Phison uses machine learning technology to work out the best system-level signal optimization parameters to store in the redriver’s non-volatile memory for each customer’s environment.

Pliops showed how its XDP-Rocks tech helps solve datacenter infrastructure problems, and how its AccelKV data service can take key-value performance to higher levels.

SK hynix and its Solidigm subsidiary showed HBM (HBM3/HBM3E), MCR DIMM, DDR5 RDIMM, and LPDDR CAMM (Low Power Double Data Rate Compression Attached Memory Module) memory tech products.

The HBM3 product is used in Nvidia’s H100 GPU. CAMM is a next-generation memory standard for laptops and mobile devices with has a single-sided configuration half as thick as conventional SO-DIMM modules. SK hynix claims it offers improved capacity and power efficiency.

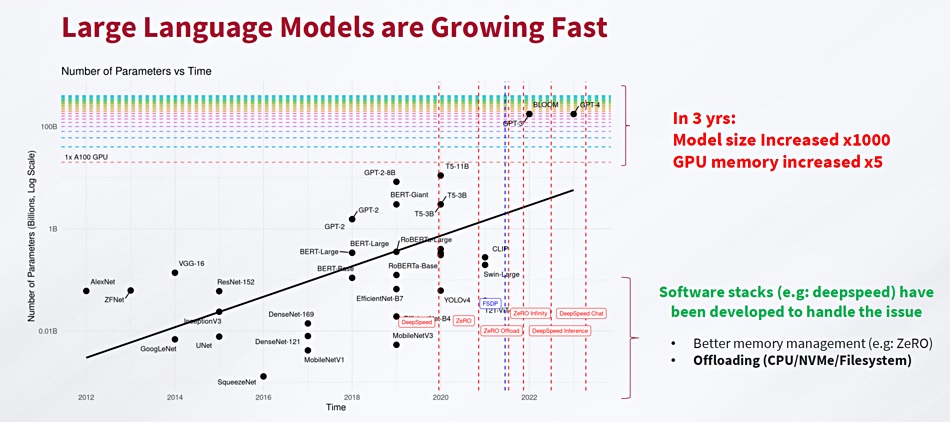

It also showed its Accelerator in Memory (AiM) – its processor-in-memory (PIM) semiconductor product brand, which includes GDDR6-AiM, and is aimed at large language model processing. There was a prototype of AiMX, a generative AI accelerator card based on GDDR6-AiM.

SK hynix CXL products were exhibited including pooled memory with MemVerge software and a CXL-based memory expander applied to Meta’s software caching engine, CacheLib.

It also showed off its computational memory solution (CMS) 2.0. This integrates computational functions into CXL memory, using near-memory processing (NMP) to minimize data movement between the CPU and memory.

The SK hynix stand featured a 176-layer PS1010 E3.S SSD that first appeared in January at CES.

XConn Technologies exhibited its Apollo CXL/PCIe switch in a demonstration with AMD, Intel, MemVerge, Montage, and SMART Modular. It is a CXL 2.0-based Composable Memory System featuring memory expansion, pooling, and sharing for HPC and AI applications. XConn says the Apollo switch is the industry’s first and only hybrid CXL 2.0 and PCIe 5 interconnect. On a single 256-lane SoC it offers the industry’s lowest port-to-port latency and lowest power consumption per port in a single chip at a low total cost of ownership.

XConn says the switch supports a PCIe 5 only mode for AI-intensive applications and is a key component in Open Accelerator Module (OAM), Just-a-Bunch-of-GPUs (JBOG), and Just-a-Bunch-of-Accelerators (JBOA) environments. The Apollo switch is available now.