StorPool Storage has added erasure coding in v21 of its block storage software, meaning its data should be able to survive more device and node failures.

The storage platform biz says it has also added cloud management integration improvements and enhanced data efficiency. StorPool has built a multi-controller, scaleable block storage system, based on standard server nodes, running applications as well as storage. It’s programmable, flexible, integrated, and always on. It claims that its erasure coding implementation protects against drive failure or corruption with virtually no impact on read/write performance.

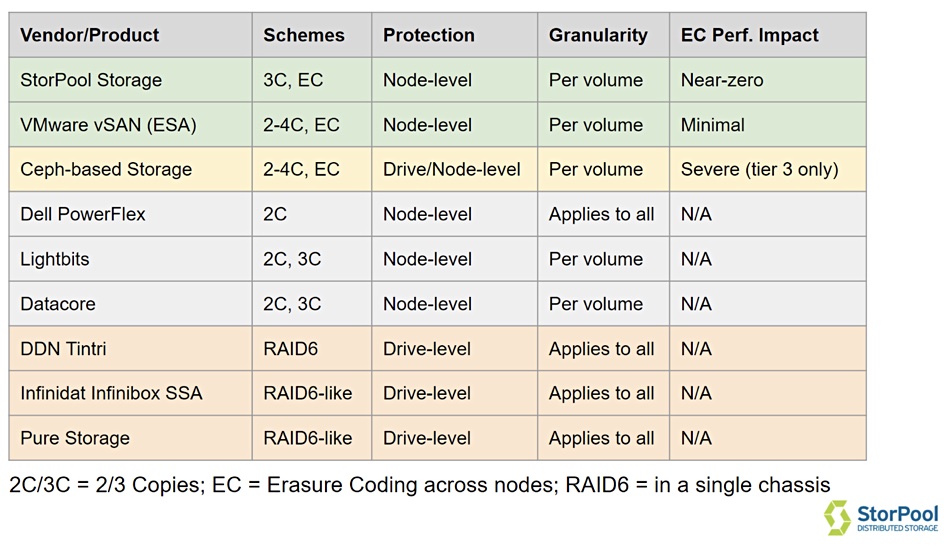

StorPool CEO Boyan Ivanov told us: “Contrary to the common belief, most vendors only have replication of RAID in the same chassis, not Erasure Coding between multiple nodes or racks.”

StorPool produced the chart below that surveys the erasure coding competitive landscape from its point of view:

StorPool’s erasure coding needs a minimum of five all-NVMe server nodes to deliver four features:

- Cross-Node Data Protection – information is protected across servers with two parity objects so that any two servers can fail and data remains safe and accessible.

- Per-Volume Policy Management – volumes can be protected with triple replication or Erasure Coding, with per-volume live conversion between data protection schemes.

- Delayed Batch-Encoding – incoming data is initially written with three copies and later encoded in bulk to greatly reduce data processing overhead and minimize impact on latency for user I/O operations.

- Always-On Operations – up to two storage nodes can be rebooted or brought down for maintenance while the entire storage system remains running with all data remaining available.

Customers can now select a more granular data protection scheme for each workload, right-sizing the data footprint for each individual use case. In large-scale deployments, customers can perform cross-rack Erasure Coding to enable their storage systems to benefit from data efficiency gains while simultaneously ensuring the survival of failure from up to two racks.

The v21 release also includes:

- Improved iSCSI Scalability – allowing customers to export up to 1,000 iSCSI targets per node, especially useful for large-scale deployments.

- CloudStack Plug-In Improvements – support for CloudStack’s volume encryption and partial zone-wide storage that enables live migration between compute hosts.

- OpenNebula Add-On Improvements – supports multi-cluster deployments where multiple StorPool sub-clusters behave as a single large-scale primary storage system with a unified global namespace.

- OpenStack Cinder Driver Improvements – enabled deployment and management of StorPool Storage clusters backing Canonical Charmed OpenStack and OpenStack instances managed with kolla-ansible.

- Deep Integration with Proxmox Virtual Environment – with the integration, any company utilizing Proxmox VE can benefit from end-to-end automation.

- Additional hardware and software compatibility – increased the number of validated hardware and operating systems resulting in easier deployment of StorPool Storage in customers’ preferred environments.

The company’s StorPool VolumeCare backup and disaster recovery function is now installed on each management node in the cluster for improved business continuity. VolumeCare is always running in each of the management nodes with only the instance on the active management node actively executing snapshot operations.