AI is developing so fast and being applied across the board so widely that its accelerative effect will be exponential, said DDN co-founder Paul Bloch, and it will need tens of thousands of GPUs and exabytes of storage.

Bloch, DDN’s president, was presenting to the faculty of Purdue University at a Cyberinfrastructure Symposium last month. He said that modern AI’s arrival and speed was astounding.

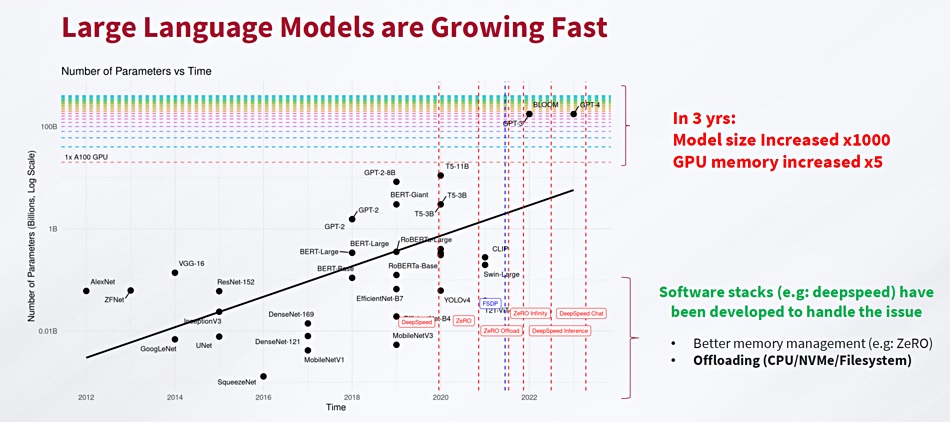

He had a chart that shows “how LLM has evolved over the past few years. And, what you can see here is that in the short three years, the models’ size increased over a thousand times and the GPU memory has increased times five.”

But this is small potatoes: “Picture the fact that right now ChatGPT uses data that’s about four or five years old, so ChatGPT doesn’t even have the data for the past four years. We’ve probably created much more data than the years before over the past four years. So, this is just the infancy.”

He likened Gen AI stages to biological brains: “I recall AlexNet being able to classify in a smart way. … It’s about 262 petaflops in AI terms. It’s not the same as HPC petaflops. But this is equivalent to a worm brain.”

He said: “Then, you have autonomous driving. It’s a race to get to full autonomy and to do that, you need to have about 6 billion miles proven or in simulation. So, you need to show 6 billion miles on your software without any accident or any problem. That requires a lot of compute and storage as well, and what’s interesting is a large-scale SuperPOD is still the size of a fly brain.”

Accelerating storage bandwidth

GPUs need fast data transfer from storage, with Bloch saying: “As an overall rule, one GPU will require about one to two gigabytes a second of data storage. … If you buy a thousand GPUs, you’re going to need about a terabyte a second. .. We’re deploying systems today over the past couple quarters that are delivering 5 terabytes, 10 terabytes a second.”

He added: “Some of the larger systems that we’ve deployed … in the past two-three months is 20,000 GPUs, and the only reason why it’s not more is because they cannot get their hands fast enough on them. So, 20,000 GPUs and a hundred of our systems. So, a hundred of those systems deliver about 10 terabytes a second. And, the capacity is about 40 or 50 petabytes and they’re just starting.”

It’s not going to be enough. Storage delivery speeds are going to have to increase, as are the capacities needed: “We’ve been thinking gigabytes a second. Now it’s going to be hundreds of gigabytes a second or terabytes a second or tens of terabytes a second. We will have a handful of systems running at 50 to 100 terabytes a second before the end of 2024. And, they will have probably between 100 and 500 petabytes of data attached to it. That’s the hot file system, right? I’m not even talking about the cold file system behind which will be in the exabytes.”

Accelerated AI buy decisions

Generative AI development speed and potential is so huge that AI-related IT purchasing decisions are being made much faster than others. Bloch said: ”We started the year saying, ‘Okay, it’s going to be the same year as usual.’ Great, having fun…and then ChatGPT happened and the world changed. At all levels, it’s already changed. That means that what we have seen in the second quarter, and in the third quarter is totally inordinate. We are seeing companies go from ‘we need to get into AI, we need to do either internal ChatGPT or we need to do LLM’ and so forth decision process within two weeks.

“From the first talks to those companies to an actual purchase order to deliver systems…about two weeks. We’ve never seen that. We don’t know if it’s going to keep on going, but this is basically the way industry sees AI.

“They see it as a ‘do or die’ situation and a lot of them, the big guys, are putting their money where their mouth is.”

Part of the urgency they feel is “because if you don’t do it, and someone’s going to have a better service, better access to customers, better service to customers, better response, more efficiency people-wise – you’re going to have an issue with your company.”

Block thinks: ”We are in a new era because the world has been delivering a lot of R&D, a lot of advancements across the board. You can talk about transportation, precision therapies, digital wallets, biology, bioinformatics, 3D printing, autonomous – you name it. All these technologies have already been developed and delivered, but now they’re going to be catalyzed with AI.

He says: “You can get to a whole different level if you apply AI to any and all of these technologies.”

And the future?

The accelerated AI development rate is huge. Bloch said: “What’s next? I don’t even know. All I know is that the speed of creation is going to accelerate… So, the cycles are shortening themselves very fast.”

What’s next for DDN’s filesystem? “We’ve been working on the next generation file system for the past six-seven years. It’s not replacing the current one we have, but it’s going to be a, we call it, a next generation for the future. So, on-prem, in the cloud, instantiating as software or appliances that’s going to be able to be workload-centric, intelligent in fashion, on a guarantee of service, and being able to deliver access to data in a simpler, more efficient and cost-efficient matter.”

More of the same but faster, smarter and simpler to use.