Cohesity-commissioned research shows organizations are fueling ransomware attacks through their readiness to pay. Over half of all UK companies surveyed had been attacked so far in 2024, with three in four willing to pay a ransom and some admitting having paid up to £20 million. One of the most striking findings is the correlation between countries where people are most likely to pay a ransom and those reporting the highest incidents of ransomware attacks.

In detail, 95 percent of UK respondents said cyber attacks were on the rise – a fact supported by more than half (53 percent) having fallen victim to a ransomware attack in 2023. This is a stark rise from the 38 percent that reported a ransomware attack in the previous year. Some 74 percent said they would pay a ransom to recover their data after an attack, and 59 percent had indeed paid a ransom in the previous year. Only 7 percent ruled it out, despite two in three (66 percent) having clear rules not to pay. Get a copy of the report here.

…

Data streamer Confluent has a suite of product updates:

- New support of Table API, which makes Apache Flink available to Java and Python developers – helping them easily create data streaming applications using familiar tools.

- Private networking for Flink, providing a critical layer of security for businesses that need to process data within strict regulatory environments.

- Confluent Extension for Visual Studio Code, which accelerates the development of real-time use cases.

- Client-Side Field Level Encryption encrypts sensitive data for stronger security and privacy.

Check out the new Confluent Cloud features here.

…

Wikimedia Deutschland has launched a semantic search concept in collaboration with search experts from DataStax and Jina AI to make Wikidata’s openly licensed data available in an easier-to-use format for AI app developers. Wikidata’s data will be transformed and made more convenient for AI developers as semantic vectors in a vector database. DataStax provides the vector database while Jina AI provides the open source embedding model for vectorizing the text data. The vectorization will enable direct semantic analysis and could help facilitate the detection of vandalism in the knowledge graph. Vectorization also simplifies the process of using Wikidata in RAG applications in the future. Wikimedia Deutschland started creating the concept in December 2023. The first beta tests of a prototype are planned for 2025.

…

Firebolt announced a next-generation Cloud Data Warehouse (CDW) that delivers low latency analytics with drastic efficiency gains. Data engineers can now deliver customer-facing analytics and data-intensive applications (data apps) more cost-effectively and with greater simplicity. It’s a modern cloud data warehouse that combines the ultra-low latency of top query accelerators with the ability to scale and transform massive, diverse datasets from any source, while using standard SQL to handle any query complexity at scale. Read a launch blog for more information.

…

Hitachi Vantara has redesigned its Hitachi EverFlex infrastructure-as-a-service (IaaS) portfolio as a scalable and cost-efficient Hybrid Cloud as-a-service solutions for modern enterprises. Hitachi EverFlex enables customers to transition to hybrid cloud environments by offering a consumption-based model that aligns costs with business usage. EverFlex Control leverages AI to automate routine tasks, reduce human error, and optimize resource allocation. Users can scale resources up or down to meet fluctuating business demands. Check out an EverFlex blog here.

…

Malware threat scanning Index Engines announced its new chief revenue officer, Neil DiMartinis, previously president of Cutting Edge Technologies, and advisory board member, Jim Clancy, formerly president of Global Storage Sales at Dell Technologies. They will both be involved in creating new relationships and spearheading the company’s channel expansion with new strategic partners.

…

Micron announced the availability of the Crucial P310 M.2 2280 PCIe 4 NVMe SSD, which offers twice as fast performance than Gen 3 SSDs and 40 percent faster performance than Crucial’s P3 Plus. It has capacities from 540GB up to 2TB and read and write speeds of 7,100 and 6,000MB/sec respectively. The drive features random reads up to 1 million IOPS and random writes up to 1.2 million IOPS.

…

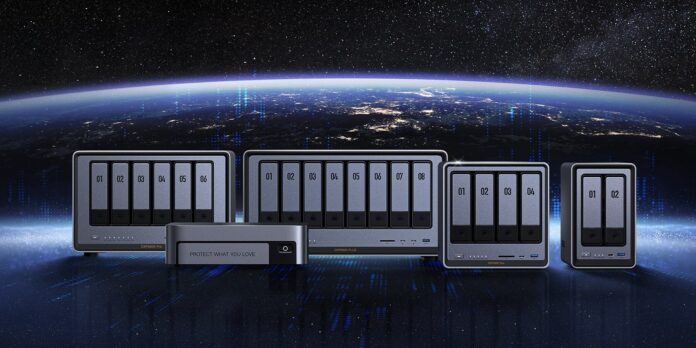

Distributor TD SYNNEX France has signed a distribution agreement with Object First for its Ootbi (Out-of-the-Box-Immutability) ransomware-proof backup storage appliance purpose-built for Veeam.

…

Percona announced that its new database platform, Percona Everest, is GA. Percona Everest is an open source, cloud-native database platform designed to deliver similar core capabilities and conveniences provided by database-as-a-service (DBaaS) offerings but without the burden of vendor lock-in and associated challenges.

……

Quantum has launched an improved channel partner portal and program “to make it easier and more lucrative for partners to sell Quantum’s comprehensive data management solutions for AI and unstructured data.” Quantum Alliance Program enhancements:

- “Expert” level partners can now earn double the rebate previously available while “Premier” level partners can earn up to three times the rebate.

- Automated lead generation tools using social media and email drip campaigns with full analytics, reporting, and built-in dashboards.

- Campaign-in-a-Box marketing programs on trending topics including AI, ransomware and data protection, cloud repatriation, Life Sciences data management, and VFX and animation.

- New Quantum GO subscription models to meet customers’ growing data demands and budgetary requirements.

Learn more here.

…

Rubrik Cyber Recovery capabilities are now available for Nutanix AHV. Rubrik Cyber Recovery enables administrators to plan, test, and validate cyber recovery plans regularly and recover quickly in the event of a cyberattack. AHV customers can now:

- Test cyber recovery readiness in clean rooms – Create pre-defined recovery plans and automate recovery validation and testing, ensuring recovery contains necessary dependencies and prerequisites.

- Orchestrate rapid recovery to production – Identify clean point-in-time snapshots, reducing the time required to restore business operations.

Check out the details here.

…

SMART Modular Technologies announced a proprietary technology to mitigate the adverse impact of single event upsets (SEUs) in SSDs. Its MP3000 NVMe SSD products with SEU mitigation reduce annual failure rates from as high as 17.5k/Mu (million units) to less than 10/Mu and can save hundreds of thousands of dollars in potential service costs by helping to ensure hundreds of hours of uninterrupted uptime – especially important for tough-to-repair remote deployments.

SEUs are an inadvertent change in “bit status” that occurs in digital systems when high-energy neutrons, or alpha particles, randomly strike and cause bits in memory – logic components – to literally flip their state. Addressing these errors or upsets within the SSD enables recovery without the need for a full system reboot. It claims its SATA and PCIe NVMe boot drives can slash annual failure rates by as much as 99.7 percent by recovering from soft errors due to single event upsets. By having the ability to gracefully reboot itself without a host system reboot, the SSD also handles possible flipped bits in other components within the SSD, which might account for an additional 10 percent of failures.

The ME2 SATA M.2 and mSATA drives with SEU mitigation provide 60GB to 1.92TB of storage. The MP3000 NVMe PCIe drives provide 80GB to 1.92TB of storage in M.2 2280, M.2 22110 and E1.S form factors. Both products are available in commercial grades (operating temperature: 0 to 70°C) and industrial grades (operating temperature: -40 to 85°C). The M.2 2280 also supports SafeDATA power loss data protection.

…

StarTree, a cloud-based real-time analytics company, showcased new observability capabilities at Current 2024 in Austin, Texas. It highlighted how StarTree Cloud, powered by Apache Pinot, can now be used as a Prometheus-compatible time series database to drive real-time Grafana observability dashboards.

…

Distributed cloud storage company Storj introduced two new tools at IBC to simplify remote media workflows:

- NebulaNAS (from GB Labs) – A cloud storage solution that delivers cloud flexibility with on-premises-like performance, enabling global access, collaboration, and enterprise security.

- Beam Transfer – A breakthrough data transfer solution built on Storj’s distributed cloud, offering speeds of up to 1.17GB/sec, designed for fast, global collaboration in media production.

…

Titan Data Solutions, a specialist distributor for edge to cloud services, has become Vawlt’s first distribution partner in the UK. Titan will drive channel recruitment and engagement to accelerate go-to-market momentum and customer adoption of Vawlt’s distributed hybrid multi-cloud storage platform across the region.

….

VergeIO has partnered with TechAccelerator to deliver a suite of hands-on labs designed for IT professionals looking to migrate from VMware to VergeOS. These self-paced VMware Migration Labs provide a comprehensive, interactive experience to help organizations transition smoothly to VergeOS, offering a learning opportunity without needing hardware. The VergeIO Labs are available for potential customers to try and they can register here.

…

WTW, a global advisory, broking, and solutions company, has launched Indigo Vault, claiming it’s a first-to-market document protection platform that provides advanced cyber security for sharing and storage of business-sensitive files. Using WTW-patented, end-to-end quantum resistant security, Indigo Vault allows assigned users to decide where and how documents are stored, who can access them and for what length of time on a specific device, and how documents are used, to prevent them from being saved, seen, or shared outside of specifically defined parameters. Indigo Vault encryption uses NIST-certified algorithms that cannot be cracked by standard computers and are resistant to quantum computer attacks. Find out more here.

…

Software RAID Supplier Xinnor has announced a strategic partnership with HighPoint Technologies for its PCIe to NVMe Switch AIC and Adapter families with Xinnor’s xiRAID for Linux. A single Rocket series Adapter empowered by xiRAID can accommodate nearly 2PB of U.2, U.3, or E3.S NVMe storage configured into a fault-tolerant RAID array, and is capable of maximizing a full 16 lanes of PCIe host transfer bandwidth. This enables the system to deliver up to 60GB/sec of real-world transfer performance, and up to 7.5 million IOPS. In RAID5 configurations, xiRAID outperformed the standard mdraid utility by a significant margin, demonstrating over 10x improvement in both random and sequential write performance.