Pure Storage CEO Charlies Giancarlo believes that customers need a single- and multi-protocol storage environment to supply and store data for modern needs, and not restricted block or file-specific platforms or many different and siloed products that are difficult to operate and manage.

He was present at a Pure Accelerate event in London, UK, and his views on this – and on the hyperscaler flash-buying opportunity – came apparent during an interview. We started by asking him what, supposing he was meeting a customer who had been having a conversation with Vast Data, he would suggest they think about that company.

Charles Giancarlo: “Vast is certainly an interesting company. They focused on a non-traditional use case when they first got started, which was large-scale data that required fast processing for a short period of time. And they’ve made a lot of themselves over over the intervening year or two. They have a very different strategy than Pure. Their strategy is, basically, they created a system, and they’re attempting to make that one system … as the solution for everything.

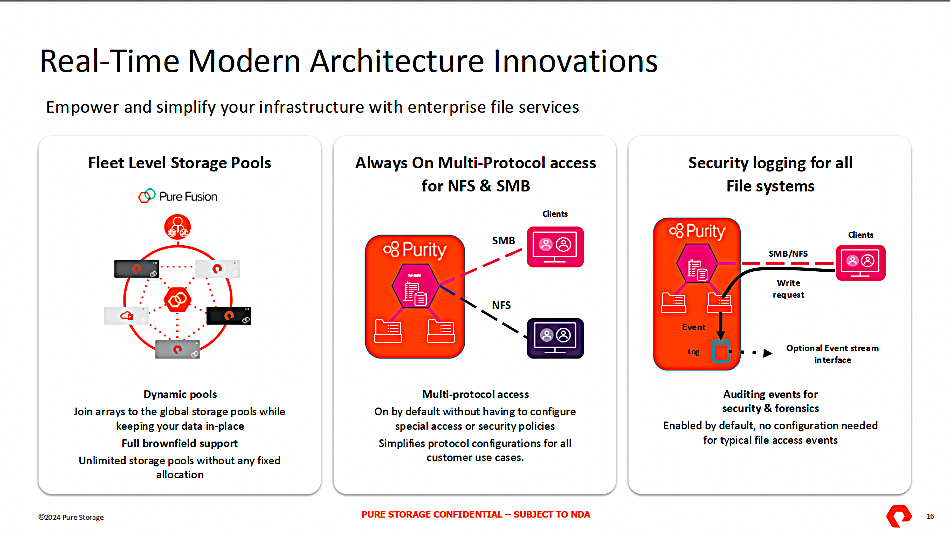

“Our strategy rather, is that we’ve created one operating environment for block, file and object, across two different hardware architectures – scale up and scale out – but the main focus being to really virtualize storage across the the enterprise, such that the enterprise can create a cloud of data rather than individual arrays.”

He went on to say that: “Enterprise storage never made the transition that was made for personal storage – or for, let’s say, networks or compute – where you were able to virtualize the environment.”

Giancarlo suggested that enterprise on-premises developers moved to the cloud “because they could set up a customized infrastructure in the period of about an hour, through GUIs and through APIs. Your developers are not able to do that with enterprise. Why is that? Well, you have IP networks, you have Ethernet networks, just like the cloud does. You have your own virtualization, whether that was VMware or something else. So you have that. But it’s your storage that’s not been virtualized, and so your developers don’t have the ability to just set up new compute and open up new storage or access data that already exists with just a few clicks and through APIs.

“With Pure Storage now, a customer can manage their entire data environment as a single cloud of storage where they can set up the policies and the processes whereby their data is managed, and have that managed automatically in an orchestrated way – but furthermore, be able to share that storage among all of their different application environments.”

Blocks & Files: And they can only do that in the cloud? Because Azure, AWS and Google have taken the time and trouble to put an abstraction software layer in place to enable them to do that. So in theory, you can take that same concept and bring it on premises?

Charles Giancarlo: “Exactly. You finish my story for me, because you already have it in place for compute and network, and now we complete the circle, if you will, with the storage side, with APIs. And what’s behind our capability is Kubernetes. We’re already put you on your Kubernetes journey with this, and now you can create that virtual storage.

“[The software] is really now an orchestration layer. All of the APIs already exist. Your employees already understand how to use either VMware [and are] starting to become familiar with Kubernetes and containers.

“What’s really interesting about this is that enterprises, of course, have requirements that they have to fulfil – whether they’re regulatory or compliance or even to just to fulfil the needs of the enterprise itself – which may at times be different from those of the developer.

“What I mean by that is the developer may not be thinking about resiliency, or how fast to come back from a failure, or when things should be backed up, right? So the organization now can set up policies for their data. They can set up different storage classes and then make those storage classes available by API to their developers, so the developers can choose a storage class that’s already been predefined by the organization to fit the organization’s needs. So it’s really a beautiful construct to allow an enterprise to operate more like a cloud.”

Blocks & Files: Pure has put Cloud Block store in place, which crudely speaking could be looked as Flash Array in the cloud. Is FlashBlade in the cloud coming?

Charles Giancarlo: “Flash blade in the cloud is coming. Think of it this way. Both of them are [powered by the ] Purity OS. Cloud Block Store looks like FlashArray in the cloud, but more importantly, it looks like block in the cloud. So what you’re asking really about is, what about file in the cloud? Because once up in the cloud, it is scale-out by definition, and FlashBlade is nothing but a scale-out version of FlashArray, right? So, the next step we want to take is file in the cloud, which we are working on. But we still want to see the block environment grow. Object in the cloud we’re uncertain about right now.”

Blocks & Files: There’s a very big S3 elephant in that forest.

Charles Giancarlo: “We’ve talked very, very long and hard about about block in the cloud to where we could provide a superior service at lower cost to the customer. With file in the cloud, we believe we’ll be able to do the same thing, albeit it’s taken us longer to get to understand what that would look like. Object, of course, was invented in the cloud to begin with, pretty much – not exactly, but for all intents and purposes, it was. And we have to find that if we can’t improve upon that, then it wouldn’t make sense. Certainly, what we want is make it fully compatible with S3 and and Azure.”

Blocks & Files: You don’t want simply to have say, a cloud object store, which is simply an S3 gateway.

Charles Giancarlo: “Of course. If we’re not adding value, we’re not going to do it.”

Blocks & Files: Do you think that Pure will continue its current growth rate, or that it will settle down to some extent, or even possibly accelerate?

Charlie Giancarlo: “I think we have the opportunity to accelerate for several reasons. One is – I’m already on record, so I’m not going to change it now – that we should win our first hyperscaler this year.”

Blocks & Files: It will be a revolutionary event, if you do that, because they’ll be buying from you. At the moment, they buy raw disk drives from Seagate, etc., but sure as heck, don’t buy disk arrays from anybody.

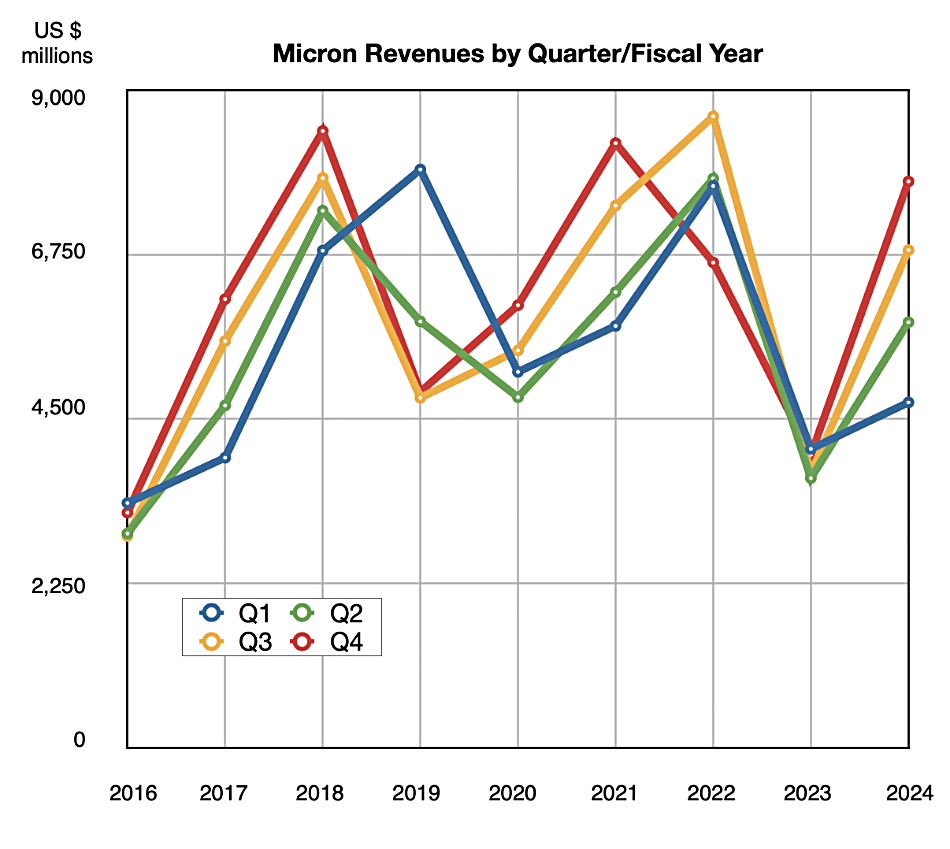

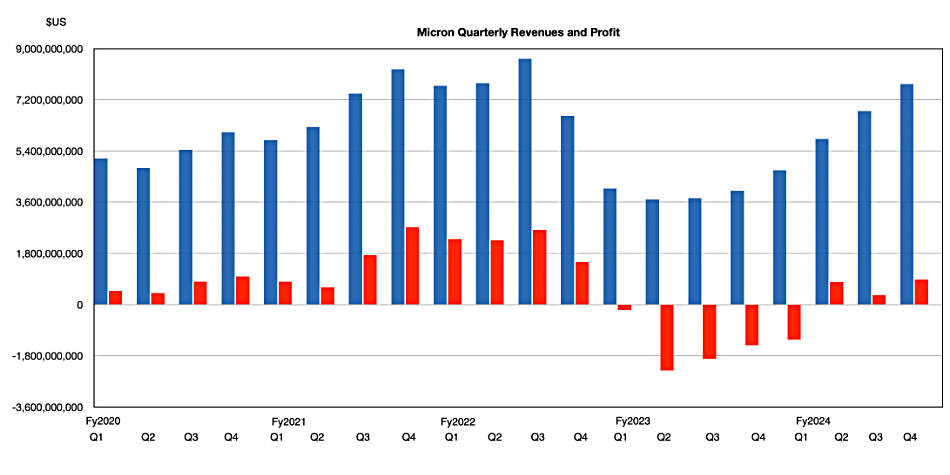

Charlie Giancarlo: “That’s right. And not as much, but they also buy raw SSDs. Roughly 90 percent of the top five hyperscalers, and maybe 70 to 80 percent of the top 10 hyperscalers, are hard disk-based.

“And there’s no question that, at some point in time, in my opinion, it will be all flash. Whether it’s us or SSD. That being said, because of our direct flash technology, we’re at the forefront of this, and we think we bring other value. We’re fairly certain we bring other value to the hyperscaler beyond just the fact that it’s flash rather than disc.

“We’re trying to be very careful about when we’re talking about when we refer to a hyperscaler design win and a hyperscaler buying our infrastructure. We’re separating that, for example, from AI, even if it’s a hyperscaler buying for AI purposes. The reason is, when we’re talking about the hyperscaler, we’re talking about them buying it for their core customer-facing storage infrastructure.

“In most of the hyperscalers, they have three or four unique layers of storage. Just three or four, you know, some with the lowest possible cost. And I’m talking about online now, not tape, from the lowest price-performance right to their highest price performance. And every one of their services – of which there could be hundreds or thousands – use one of the three or four infrastructures that they have in place.

“What we’re talking about at first, is just replacing, let’s call it the nearline disk environment. But frankly, what we found is, as we get further along in our conversations, they say, ‘Well, if we’re going to use your technology for that, we might as well use it for all of the layers’ – because we’re not performance-limited, because we’re flash. And so, if you make sense at the lowest price layer, well you also make sense at higher performance layers.”

Blocks & Files: Seagate has been having enormous problems getting its HAMR disk drives qualified by hyperscalers.

Charlie Giancarlo: “There are two problems with disk that that Seagate won’t admit. And really I’m not trying to be competitive with disk. But the first is, the I/O doesn’t get any better. You can double the density, and the I/O doesn’t get any better. So eventually it just gets so big that you can’t really use all the capacity that’s in the system.

“And the second is the power space and cooling doesn’t get doesn’t get any better. And so between those two things we’ll be at that point in flash. We’re not there yet, but we’ll be at the point where they can give away the discs, but the infrastructure costs will be more than the full system cost.

“The flash chip that’s not being accessed uses practically no power, almost no power. And of course, the chips themselves are getting denser. So it’s not as if you’re using twice as many chips. You’re using the same number and with twice the amount of capacity on each chip. So the thing that uses most of the power out of the DFM [Direct Flash Module] is our microcontroller that that runs the firmware that we download into it. Think of it as a co-processor. We don’t need more than one per DFM. We don’t really have any RAM … a SSD has a lot of DRAM on it that use a lot of power, so we’re lower power than SSDs as well.”

Blocks & Files: Could you envisage a day when a Pure array controller includes GPUs?

Charlie Giancarlo: “I don’t see the purpose of a GPU for the for the sole purpose of of accessing storage and for delivering storage. It wouldn’t accelerate, for example, the recovery of storage. So there’s several questions that would come from that. One is, could the GPU be used for other things – such as maybe some AI enhancement to the way that data is masked or interpreted before being written. The second thought that comes to my mind would be, would a customer want to place an AI workload of one type or another on the same controller?

“I think I would tend to think not on the second one, because it’s too constraining to the enterprise. Because there’s always going to be some constraint, whatever that is – scale, speed, whatever. I think most enterprises would want to separate out their choice of compute – a GPU compute platform – from the storage. Keep application compute and storage largely separate.

“We have been thinking – and I have nothing to to announce right now – but we have been thinking that there might be reasons why customers may want to do some AI work on data being written. In order to do things such as auto-masking, to be able to separate out some types of data from other types of data into different buckets that then could be handled in the background, differently than other buckets, to maybe vectorize the data, you could think of it as vectorization.

“Customers have told me this directly. They don’t know which of their files contain PII (Personally Identifiable Information). They don’t know this until after it’s been stolen and they’ve been ransomed.

“So imagine now that you had an engine that could somehow, auto-magically, start to do some amount of separation of the type of data that gets written, such that your PII data is held in a more secure environment than the non-PII data. If your non-PII data is stolen, OK, well, you don’t like it being stolen – but it’s not quite as damaging [as PII data being stolen]. So there are ideas such as that where the answer is maybe, possibly, more to see.”