PARTNER CONTENT: Most retailers are saddled with aging, heterogeneous IT environments spread across wide geographical areas, making it difficult to adopt the latest and greatest advancements of the data-driven, artificial intelligence (AI) age. With the ability to consolidate resources and run new and legacy applications in a cloudlike manner, retailers can embrace AI while getting the most out of legacy investments.

When I think of the modern retailer, I think of “A Tale of Two Realities.” On the one hand, we live in this digital age where analytics, AI and generative AI (GenAI) promise to change the world as we know it. On the other hand, we’re well into the 21st century, and retailers are still dealing with the same old challenges they’ve faced for decades — if not centuries. Some reports suggest labor costs are growing, margins are tightening, and shrink and theft losses are at an all-time high. And while AI and GenAI introduce promising capabilities to address these challenges, most retailers are faced with distributed, heterogenous IT footprints. These two realities leave the modern retail organization like a kid in a candy shop with a pocket full of savings bonds: The value to get what you want is there, but it takes an extra step to unlock it.

For example, think of a typical retailer with hundreds or thousands of brick-and-mortar locations. Then imagine the proverbial IT closet at the back of every store. For most retailers, this closet is a bit of a mess — lots of hardware of varying ages and capabilities from multiple vendors, all individually siloed for a particular application and only partially utilized. Each was duly selected and deployed to solve a specific business need, whether that’s running the point-of-sale (POS) machine or the video surveillance system or something else. But a jumble of aging equipment, complete with security vulnerabilities, network connectivity issues and the ensuing suck on IT resources, is not the right foundation for the retailers of tomorrow.

You need to be able to deploy new applications at the edge in a cloudlike manner — quickly and cost-effectively. But purchasing and rolling out all new infrastructure to every store is a non-starter; you need to continue leveraging some of these legacy IT investments, both hardware and software.

Addressing the edge attack surface

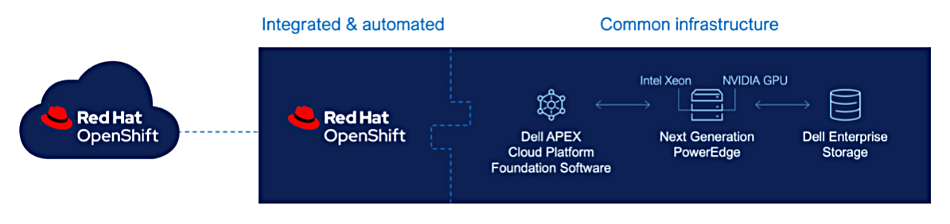

Consolidating workloads with an edge operations software platform built on cloud-based technologies can solve these challenges by disaggregating the hardware and software lifecycle so you can deploy applications as virtual machines (VMs) or in containers. This means you can continue to use software applications as long as you need to without having to worry about the underlying hardware. Plus — and this is a very powerful plus for retailers and other industries with extremely distributed IT estates — you can also now deploy and manage it all from a distance.

So, when it comes time to deploy a new application you can simply select or create an application blueprint (which is basically a configurable script that may even be site-specific) and deploy it across all locations with just a couple of clicks. Many of these blueprints already exist in public catalogs, or they can be created on private catalogs for home-grown or lesser-known applications.

There’s no doubt that expanding your IT footprint expands your attack surface. That’s why deploying new technology with Zero Trust principles is a great start toward mitigating security risks. Zero Trust means that the applications, the data, the system and the infrastructure itself is all cryptographically signed in the factory, and then the orchestrator uses a public key to make sure that it’s the exact device that left the factory. Nobody’s tampered with it, nobody’s touched it and it’s going to be very secure.

And as we all know, there’s no such thing as perfect security. Hackers are always looking for ways to infiltrate and compromise systems; it’s just a question of when and how you’re going to respond when it happens. The best way to address security breaches is to act quickly. Server telemetry can be used in conjunction with an operations software platform to help security teams rapidly detect and respond to breaches. The Dell edge operations software further gives you the ability to restore back to a known-good state or quickly tear down a VM and deploy another one.

Taken all together, an edge operations software platform like Dell NativeEdge, combined with advanced security and automation features, can help you leverage legacy IT investments, address your biggest business challenges, deploy AI and GenAI at the edge faster and improve cybersecurity — all while reducing truck rolls and increasing the speed of deployment.

To learn more, you can watch the webinar where Samir Sandesara and I discuss how we helped a large retailer overcome common IT challenges during a recent customer engagement.

Contributed by Dell Technologies.