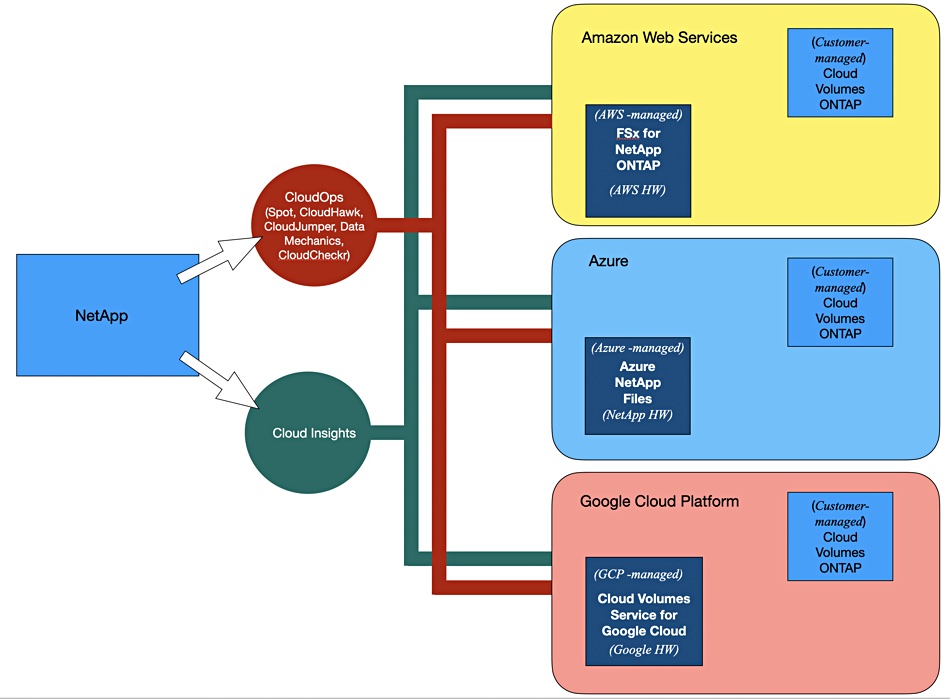

NetApp’s OEM deals with the AWS, Azure and Google public clouds set it ahead of all other file-focused storage providers.

Jason Ader, a William Blair financial analyst, gave subscribers the benefit of his interview with NetApp EVP and general manager for public cloud Antony Lye. “No other storage vendor’s technology sits behind the user consoles of three big cloud service providers and is treated as a first-party service, sold, supported, and billed by the CSPs themselves.”

He is referring to OEM-type deals in which NetApp’s ONTAP file and block data management software is sold as a service by the three big CSPs: AWS, Azure and Google.

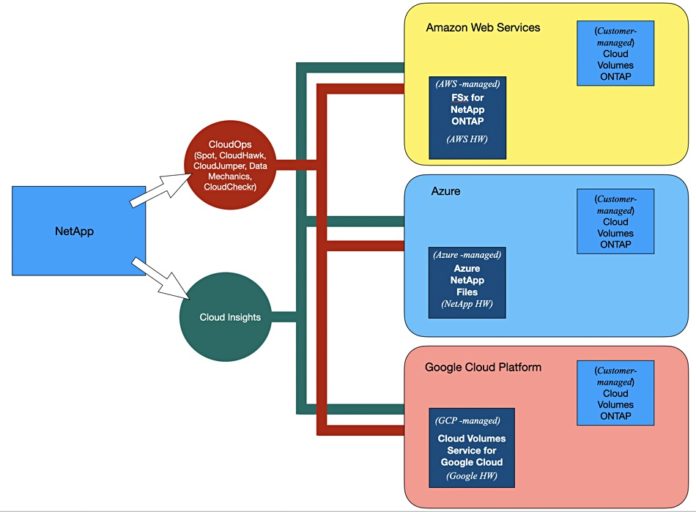

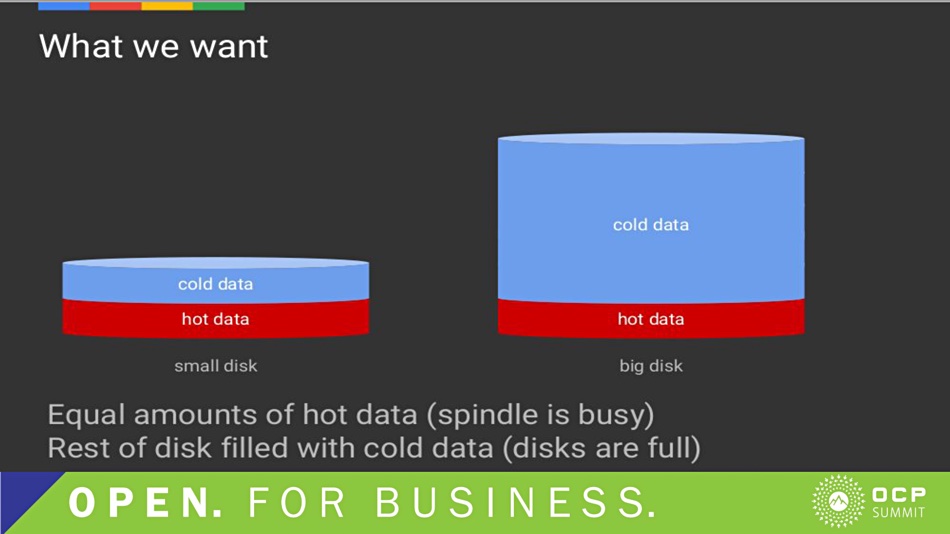

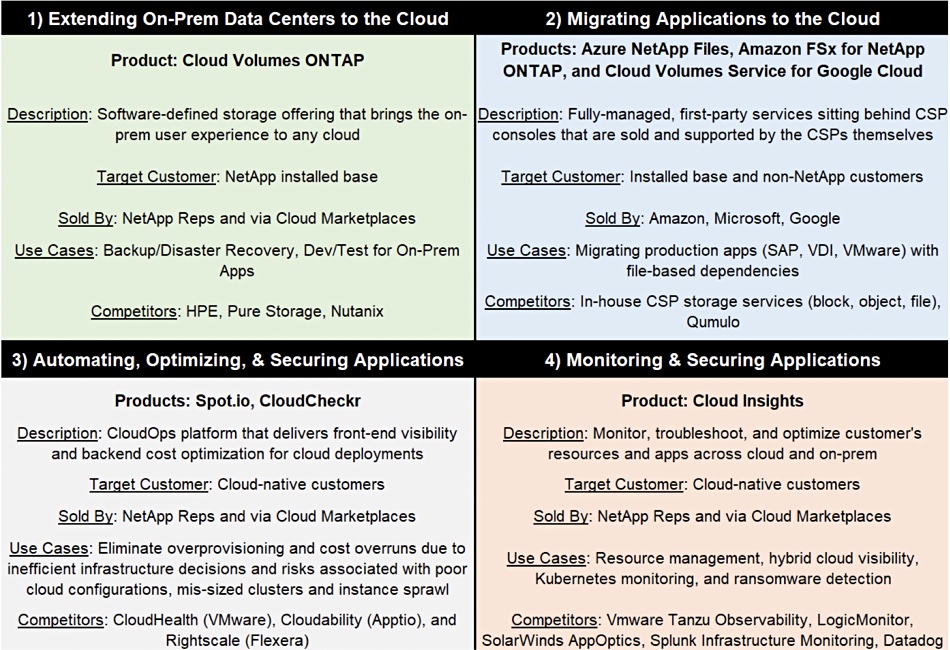

NetApp provides two ways for customers to get ONTAP in the three public clouds. The first is as a self-managed Cloud Volumes ONTAP service with software available through the respective marketplaces, while the second is through services sold, supported and billed by the cloud providers themselves.

These are:

- Amazon FSx for NetApp ONTAP, which runs on AWS hardware;

- Azure NetApp Files, which runs on NetApp hardware located in 35 of Microsoft’s regional Azure datacentres;

- Cloud Volumes Service for Google Cloud, which runs on Google hardware.

NetApp receives a cut of the revenue from these services. Thus, with Azure NetApp Files (ANF), “NetApp is compensated monthly by Microsoft based on sold capacity.” Customers for ANF typically run SAP, VMware-based apps, VDU apps and legacy RDBMS apps. SAP has certified ANF so “NetApp is able to offer backups, snapshots and clones in the context of the application itself.”

Lye told Ader that Amazon FSx for NetApp ONTAP “took two and a half years to stand up.” And, because FSx sits behind the AWS console, NetApp has been able to provide native integrations with popular Amazon services like S3, AKS, Lambda, Aurora, Redshift and SageMaker.”

AWS also offers FSx for Lustre and FSx for Windows File Server but AWS execs “have publicly stated that FSx for NetAppONTAP is one of AWS’s fastest-growing services right now.” According to Lye, 60 per cent of FSx for ONTAP customers are new to NetApp.

Because NetApp is seen as “the clear leader in file storage and file-based protocols” the three cloud titans “have not felt the need (at least not yet) to integrate as deeply with NetApp’s competitors.”

NetApp also provides a set of CloudOps services (Spot by NetApp) covering DevOps, FinOps and SecOps (development, cos and security) based on five acquired products; Spot, CloudHawk, CloudJumper, Data Mechanics, and CloudCheckr.

They are aimed at customers with cloud-native application development and their DevOps architects. Such customers are often new to NetApp and represent a storage cross-sell opportunity.

The company also offers its Cloud Insights facility, cloud instantiations of its OnCommand Insight software to help with “storage resource management, monitoring and security”. This is sold both direct to customers and through the three CSP marketplaces. Altogether the Insight offerings cover on-premises, hybrid and public cloud scenarios, supporting legacy and cloud-native applications.

From Ader’s point of view no other enterprise storage supplier is as well-placed as NetApp for providing hybrid cloud storage and data services. Until its competitors catch up — if they ever do — NetApp has a clear lead and its public cloud-related revenues should increase steadily.