PARTNER CONTENT: Organizations are taking too many backups. It’s that simple.

While data is undeniably critical, the endless cycle of creating backups of backups to ensure business continuity has spiralled out of control, consuming massive amounts of storage and driving up costs. Backups are necessary, of course, but the proliferation is costly. Yet, with increasing threats like cyberattacks, system failures, and natural disasters, data is constantly at risk. The question remains – how does a backup solution ensure the right balance between storage costs, functionality and the appropriate level of security?

This is the space in which backup targets thrive. Adding a backup target to your backup solution enhances data protection by providing a secure and reliable destination for storing backup data, ensuring quick recovery and safeguarding against data loss or corruption. On top of all of this, it can save you money on storage, too.

This article explores five essential features that your overall backup environment must include to protect your organization’s most valuable asset: its data.

1. Cloud optimization

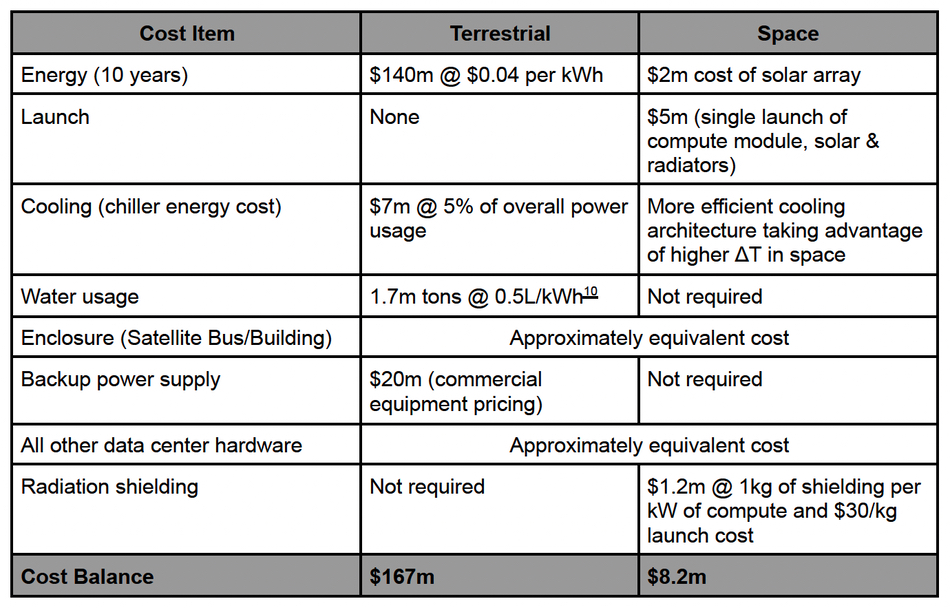

Not all data is equal, yet often lower value data (e.g. older, less vulnerable) is being stored in expensive cloud tiers. Cloud optimization offers significant benefits by allowing businesses to leverage scalable, low-cost object storage solutions for both structured and unstructured data. When properly implemented, cloud storage provides high scalability, ease of management, and cost-efficiency. By making strategic architectural changes and using technologies which work in synergy with your backup solution, organizations can avoid costly mistakes and optimize data protection. This approach minimizes cloud storage requirements and expenses, ensuring that only essential data is stored in the most cost-effective manner.

2. Security capabilities

Ransomware attacks are on the rise, threatening to cripple businesses by holding critical data hostage. Encryption in flight and at rest, though essential, is no longer enough on its own. Backup data needs increased protection in the form of immutable backup storage. Immutable backups provide robust data protection by ensuring that data cannot be modified, deleted, or encrypted by ransomware, making it invulnerable to cyber-attacks. This unassailable nature of backup data assures IT administrators of data availability for recovery in case of disasters or outages. Consequently, companies face reduced risk of ransomware payments, as cybercriminals have less leverage against organizations with secure and reliable backup systems, prompting them to target less protected entities.

3. Speed of upload and recovery

The speed of backup data uploads matter. Faster speeds mean shorter replication windows, allowing you to perform more frequent backups with minimal disruption. This is especially useful in areas with unreliable WAN connections. Quicker recovery times are essential for meeting Recovery Time Objectives (RTOs), ensuring that operations can resume swiftly after a disruption. This efficiency translates into increased productivity and significantly reduces the risk of data loss, protecting valuable information. In today’s fast-paced digital world, faster backup and recovery speeds give businesses a competitive edge, enhance customer satisfaction, and mitigate financial losses from unexpected downtime.

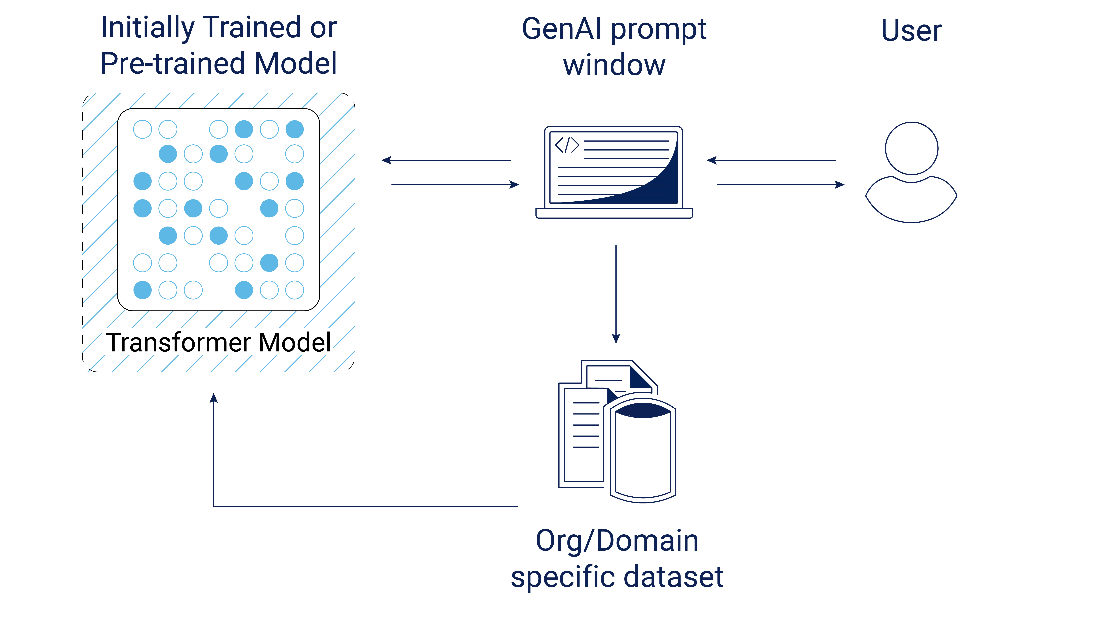

4. Intelligent protection

Imagine being able to detect unusual deviations in backup data in real time. Often, this is where data is manipulated during an attack. These deviations, while innocuous at first glance, could have a significant impact on backup data and the ability to recover. New intelligent functionalities such as AI-based anomaly detection learn the patterns around backup data flow and flag anomalies. Flagging anomalies at the storage layer offers the ability to get the deepest level of security protection–mandatory in today’s environment.

5. Cost and consumption

Exponential data growth can significantly strain your storage budget and resources. Traditionally, data deduplication has depended on hardware appliances or been restricted to a single vendor’s backup solution. To ensure you’re maximising your budget look for a solution with source-side data deduplication and built-in compression so you can capitalize on storage savings–particularly beneficial for cloud object storage.

In conclusion, an effective backup and recovery storage solution is vital for protecting an organization’s most valuable asset: its data. By incorporating features such as cloud optimization, robust security capabilities, rapid upload and recovery speeds, intelligent protection, and cost-effective consumption strategies, businesses can safeguard their data against threats while optimizing storage costs and maintaining high functionality. These elements ensure that data remains secure, accessible, and efficiently managed, enabling organizations to operate smoothly and resiliently in today’s fast-paced digital environment.

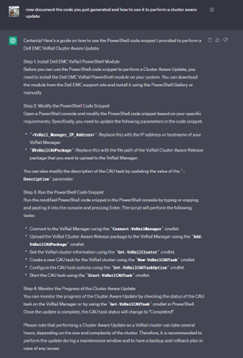

Quest® QoreStor® has the ability to address the key challenges faced by modern organizations, such as yours, in managing backup storage. By optimizing storage, enhancing data protection, and integrating seamlessly with cloud environments, QoreStor provides a comprehensive solution that meets the needs of today’s data-driven businesses. Its advanced security technology, environment versatility, and cost efficiency make it a compelling choice for anyone looking to optimize their storage infrastructure and safeguard their critical data. Learn more by clicking this link.

Contributed by Quest Software.