Sponsored Feature Very few people in the software world today would claim that “monoliths” are the best way forward for delivering new features or matching your company’s growth trajectory.

It might be hard to shrug off a legacy database or ERP system completely. But whether you’re tied to an aging, indispensable application or not, most organizations’ software roadmaps are focused on microservices and containers, with developers using continuous delivery and integration to ensure a steady stream of incremental, sometimes radical, improvements for both users and customers.

This marks an increasingly stark contrast with the infrastructure world, particularly when it comes to storage. Yes, relatively modern storage systems will have some inherent upgradability. You can probably add more or bigger disks, albeit within limits that are sometimes arbitrary. And new features do come along eventually, usually in the shape of major operating system or hardware upgrades. You just need to be patient, that’s all.

Unfortunately, patience is in short supply in the tech world. Modern applications involving analytics or machine learning demand ever more data, in double quick time. Adding more disks, or even appliances, to existing systems can only close the gap so far. And manually tuning systems for changing workloads is hardly a real time solution.

Large-scale rip and replace refreshes are at best a distraction from the business of innovation. At worst they will freeze it or destroy it completely as infrastructure teams struggle to integrate disparate systems with different components and processes. Switching architectures can mean learning entirely new tooling. More perilously, it can mean complicated migrations, which may put data at risk.

Any respite is likely to be temporary as software – and customer – demands quickly increase. Oh, and while all of this is happening, those creaking systems and the data they hold present a tempting target for ransomware gangs and cybercriminals.

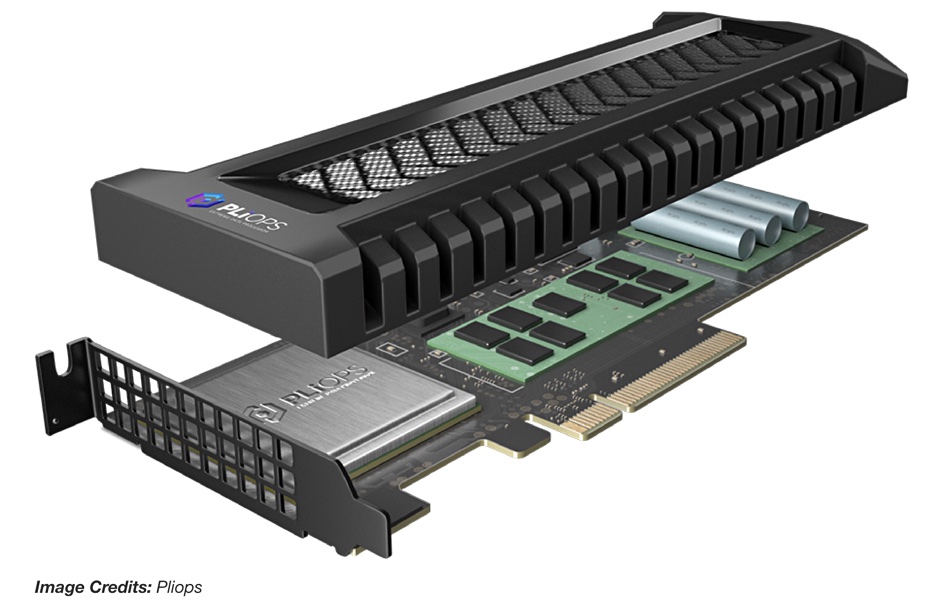

Which is why Dell, with the launch of its PowerStore architecture in 2020, rethought how to build a storage operating system and its underlying hardware to deliver incremental upgradability as well as scalability. At the time, Dell’s portfolio included its own legacy systems such as EqualLogic and SC (Compellent), alongside those from XtremIO and Unity it inherited through its acquisition of EMC.

As Dell’s PowerStore global technology evangelist Jodey Hogeland puts it, the company asked itself, “How do we do what the industry has done with applications? How do we translate that to storage?”

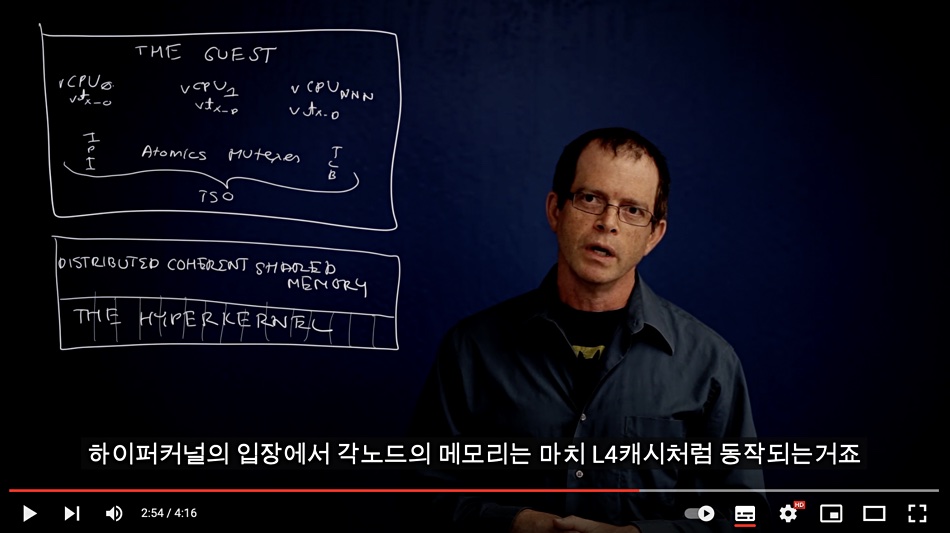

The result is a unified architecture built around a container-based storage operating system, with individual storage management features delivered as microservices. This means refining or adding one feature doesn’t need to wait for a complete overhaul of the entire system.

Containers for enterprise storage? How does that work?

“So, for example, our data path runs as a container,” Hogeland explains. “It’s a unified architecture. So the NAS capabilities or file services run as a container.” Customers have the option “to deploy or not deploy, it’s a click of a button: do I want NAS? Or do I just want a block optimized environment?”

Needless to say with other vendors, having file-based storage AND block optimized hardware would typically mean two completely different platforms. The comparative flexibility of Dell’s unified architecture is also one of the reasons why PowerStore provides an upgrade path for all those earlier Dell and EMC storage lines. A core feature of the PowerStore interface is a GUI-based import external storage option, which supports all Dell’s pre-existing products, offering auto discovery and data migration.

More importantly, the container-based approach means the vendor has been able to massively ramp up the pace of innovation on the platform since launch, says Hogeland. “It’s almost like updating apps on your cell phone, where it’s, ‘Hey, there’s this new thing available, but I don’t have to go through a major update of everything every few months.’”

The latest PowerStore update, 3.0, was launched in May, and is the most significant to date delivering over 120 new software features. Its debut coincided with the launch of the next generation of hardware controllers for the platform. Existing Dell customers can easily add these to the appliances they already own through Dell’s Anytime Upgrade program. This allows them to choose between one or two data-in-place upgrade options on existing kit or get a discount on a second appliance.

The new controllers feature the latest Intel Xeon Platinum processors which add a number of cybersecurity enhancements – silicon-based protection against malware and malicious modifications for example, as well as Hardware Root of Trust protection and Secure Boot. And enhanced support for third party external key managers increases data at rest encryption security and protects against theft of the entire array.

The controller upgrades also bring increased throughput via 100GbE support, up from 25GbE, and expanded support for NVMe. The original platform offered NVMe in the base chassis, but further expansion meant switching to SAS drives. Now the expansion chassis also offers automated, self-discovering NVMe support. PowerStore 3.0 software enhancements make the most of those features with support for NVMe over VMware virtual volumes, or vVols, developed in collaboration with VMware. This is in addition to the NVMe over TCP capabilities that Dell launched earlier this year.

Preliminary tests suggest this combination of new features can deliver a performance boost of as much as 50 per cent on mixed workloads, with writes up to 70 per cent faster, and a factor of 10 speed boost for copy operations. Maximum capacity is increased by two thirds to 18.8PBe per cluster, with up to eight times as many volumes as the previous generation.

But it’s one thing to deliver more horsepower, another to give admins the ability to easily exploit it. The rise of containerization in the application world has happened in lock step with increased automation. Likewise, it’s essential to allow admins to manage increasingly complex storage infrastructure without diverting them from other, more valuable work. This is where automation, courtesy of PowerStore’s Dynamic Resiliency Engine, comes into play.

We have the power…now what do we do with it?

For example, Hogeland explains, a cluster can include multiple PowerStore appliances. If a user needs to create 100 volumes, how do they work out which is the most appropriate place to host them. The answer is, they shouldn’t have to. “In PowerStore today, you can literally say ‘I want to create 100 volumes.’ There’s a drop down that says auto disperse those workloads, based on what the requirements are, and the cluster analytics that’s going on behind the scenes”

At the same time automation at the backend of the array, such as choosing the most appropriate backend path, is completely transparent to the administrator regardless of what is happening on the host side. “We can make inline real time adjustments on the back end of the array to guarantee that we’re always 100 per cent providing the best performance the array can provide at any given time,” says Hogeland.

With PowerStore 3.0, this has been extended to offer self-optimization allied to incremental growth of the underlying infrastructure. When it comes to hardware upgrades, the PowerStore platform can scale up from as few as six flash modules to over 90. And, Hogeland points out, “We can mix and match drive sizes, we can mix and match capacities. And we can do single drive additions.”

As admins build up their system, the system will self-optimize accordingly. “As a customer goes beyond these thresholds, where they might get a better raw to usable ratio, because of the number of drives that are now in the system, PowerStore automatically begins to leverage that new width.”

“I don’t have to worry about reconfiguring the pool. There’s only one pool in PowerStore. I don’t have to move things around or worry about RAID groups or RAID sets.” adds Hogeland.

On a broader scale version 3.0 adds the ability to use PowerStore to create a true metro environment for high availability and disaster recovery without the need for additional hardware and licensing, or indeed cost. “The 3.0 release leverages direct integration with VMware and vSphere, Metro stretch cluster,” says Hogeland, meaning the sites can be up to 100km apart. Again, this is a natively integrated capability, which Hogeland says requires just six clicks to set up.

This native level of integration with VMware, means “traditional” workloads such as Oracle or SQL Server instances are landing on PowerStore, says Hogeland, along with virtual infrastructure and VDI deployments.

At the same time, Dell also provides integration with Kubernetes. A CSI Driver enables K8s-based systems, including Red Hat OpenShift, to use PowerStore infrastructure for persistent storage. “Massive shops that are already on the bleeding edge of K8s clusters are building very robust, container-based applications infrastructure on PowerStore.”

Which shows what you can do when you grasp that storage architectures, like software architectures, don’t have to be monolithic, but can be built to be flexible, even agile.

As Hogeland points out, historically it has taken storage players six or seven years to introduce features like Metro support, or the advanced software functions seen in PowerStore, into their architectures: “We’ve done it in roughly 24 months.”

Sponsored by Dell.