Verge.io, the revitalized and renamed Yottabyte, has added GPU virtualization to its virtual datacenter software take on HCI and composable systems.

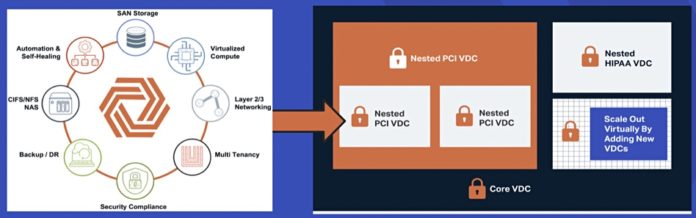

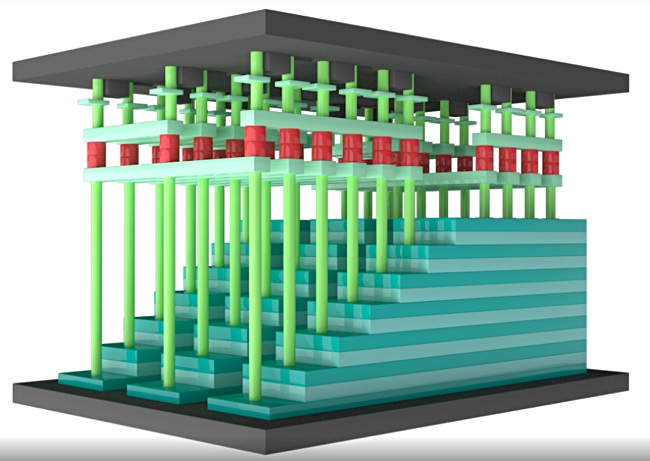

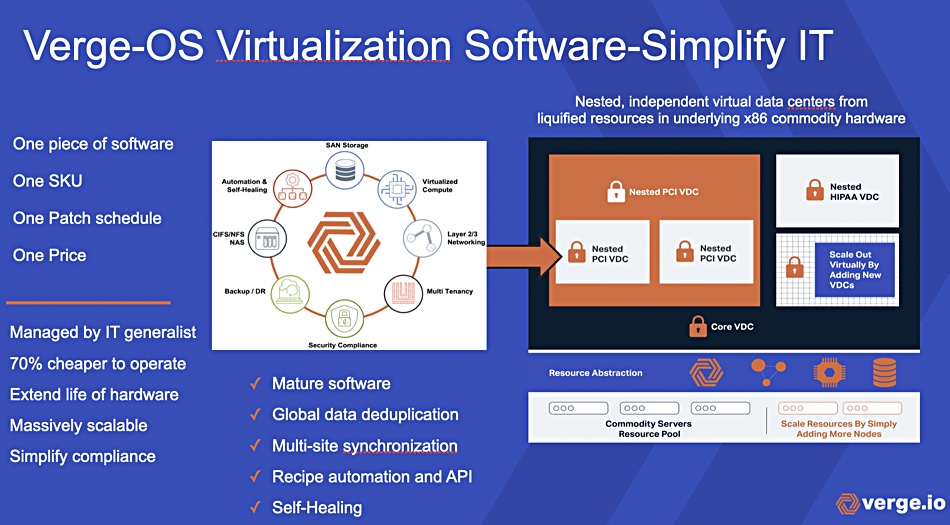

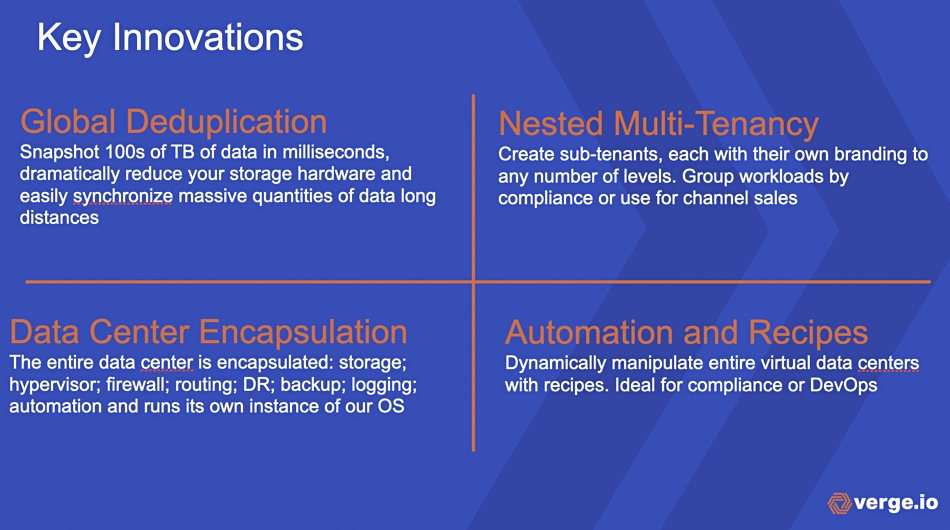

HCI (hyperconverged infrastructure) builds an IT datacenter from standard server-storage-networking boxes with storage pooled across them like a virtual SAN. Verge.io software does the same but then subdivides the pooled compute-storage-networking resource, the abstracted datacenter, into virtual datacenters (VDCs). These can be used by customers of its MSP clients. Such MSPs can create VDCs from a master VDC and nest them so that their customers experience a dedicated datacenter from what is actually a shared MSP VDC resource.

Verge.io CEO Yan Ness said: “Our users are increasingly needing GPU performance, from scientific research to machine learning, so vGPU and GPU Passthrough are simple ways to share and pool GPU resources as they do with the rest of their processing capabilities.”

The company’s software allows users and applications with access to a virtual datacenter to share the computing resources of a single GPU-equipped server. Users and/or administrators can create a virtual machine with pass-through access to that GPU and its resources.

Alternatively, Verge.io can manage the virtualization of the GPU and serve up virtual GPUs (vGPUs) to virtual datacenters, manageable on the same platform as all other VDC shared resources.

Servers in a VDC run the Verge-OS and it encapsulates a datacenter. The storage drive copy of this can be snapshotted then replicated to other servers, with global deduplication reducing data amounts. Verge-OS instances are patched in a single operation, making them simpler to maintain than some HCI software products.

Darren Pulsipher, Intel’s chief solution architect of public sector, commented: “The market is looking for simplicity, and Verge-OS is like an ‘Easy Button’ for creating a virtual cloud that is so much faster and easier to set up than a private cloud. With Verge-OS, my customers can migrate and manage their datacenters anywhere and upgrade their hardware with zero downtime.”

Customers need to obtain an Nvidia license for running the GPU hardware as part of Verge.io’s system.

Verge.io and composable systems

By carving up its overall VDC resource into sub-VDCs, Verge.io is effectively composing datacenters. Ness says that composable server systems software, such as that from Liqid, is aimed at preventing servers having stranded component resources, such as compute or flash storage, and maximizing its use by making it shareable. The focus of such composed systems is an application.

The focus of Verge.io’s “composed” VDCs is an MSP customer or end user who needs to run a set of applications, not one. A VDC could be set up for a single application, such as VDI, but is more commonly set up to run several. The focus is on datacenter administration simplicity and effectiveness, not resource stranding.

Comment

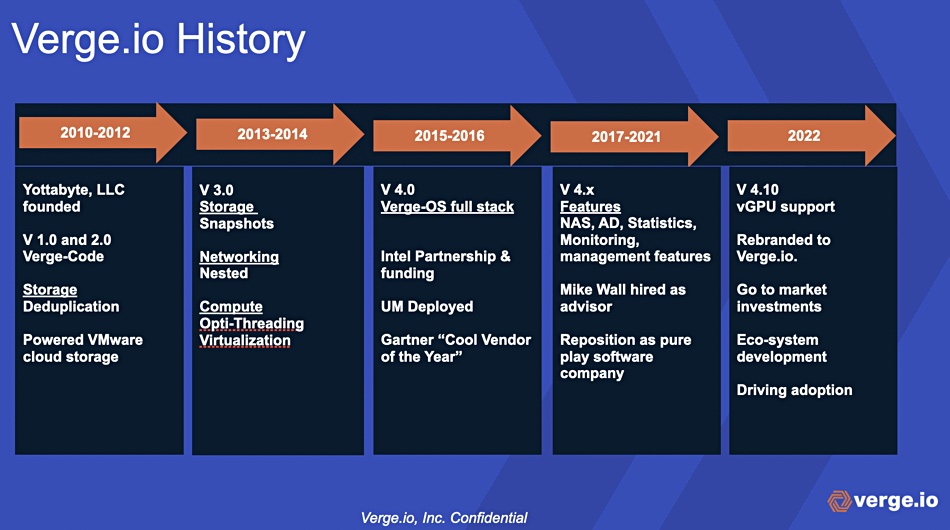

Yottabyte ran into problems, described here. By the end of 2018 it had been rebranded as Verge.io. Then new investors came on board, including Ness, who came out of retirement. They saw great potential in the business’s software but thought it needed redirecting to new markets, meaning MSPs, universities, and enterprises in that order. A key attribute of the software is its simplicity of management and use. They pruned the business setup, bought a new and reduced but more focused sales organisation on board, and set out on a new course.

It currently has around 15 employees. There used to be 50. Ness said: “We tore it down to only what we need.” It has some 45 customers. Verge.io is now, Ness says, a startup with a mature product. This small company is set on punching well above its weight.