Composable systems supplier Liqid has added MemVerge’s BigMemory technology to its disaggregated resources list, enabling it to compose memory plus storage-class memory, like Optane, into its servers.

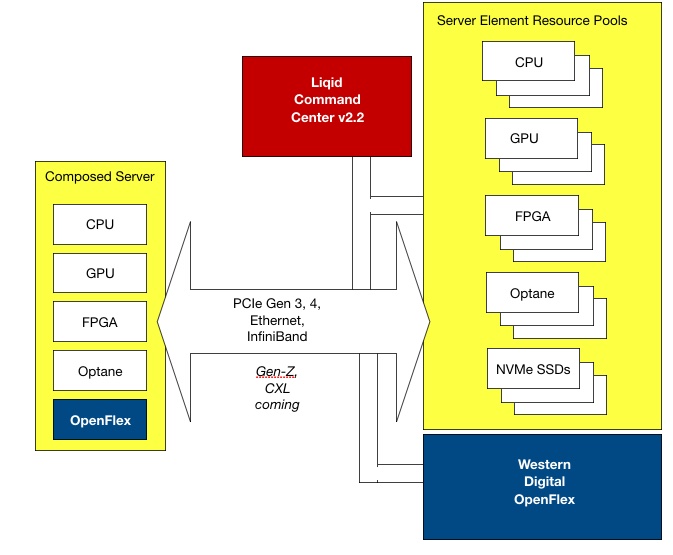

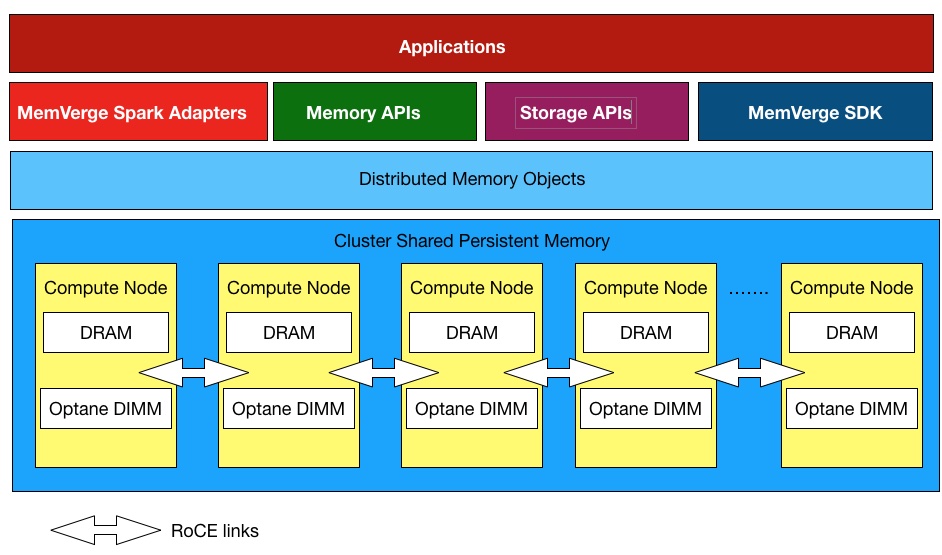

Liqid’s software composes dynamic virtual servers using pools of separate CPU+DRAM, GPU, Optane SSDs, FPGA, networking, and storage resources accessed over a PCI fabric. Composed servers are deconstructed after use and their elements returned to the source pools for reuse. MemVerge’s Big Memory technology combines DRAM and Optane DIMM persistent memory into a single clustered memory pool for existing applications to use with no code changes. Now Liqid can compose memory, meaning DRAM and Optane DIMMs, separately from CPU+DRAM and so achieve partial external memory pooling.

Ben Bolles, LIqid’s Executive Director for Product Management, said: “Intel Optane-based solutions from MemVerge and Liqid provide near-memory data speeds for applications that must maximize compute power to effectively extract actionable intelligence from the deluge of real-time data.”

Liqid’s Matrix composable disaggregated infrastructure (CDI) and MemVerge Memory Machine software can pool and orchestrate DRAM and storage-class memory (SCM) devices such as Intel Optane Persistent Memory (PMem) in flexible configurations with GPU, NVMe storage, FPGA, and other accelerators to match unique workload requirements. Targeted workloads include delivering unparalleled scale for memory intensive applications for a variety of customer use cases, including AI/ML, HPC, in-memory databases, and data analytics

Bernie Wu, MemVerge VP for Business Development, said composable systems are “a solid platform that customers can leverage for deployment of future memory hardware from Intel, in-memory data management services from MemVerge, and composable disaggregated software from Liqid.”

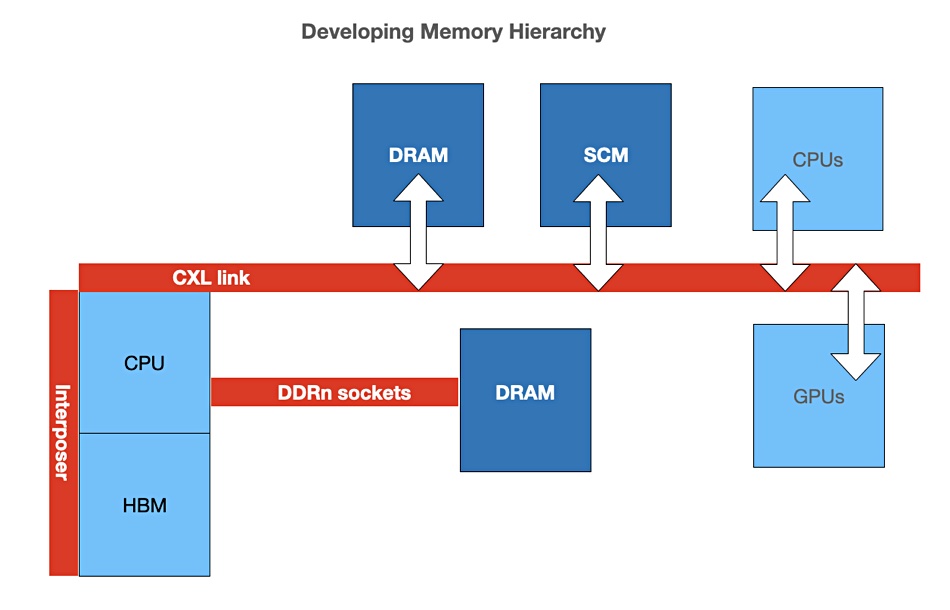

The next-generation, PCIe 5-based CXL (Computer eXpress Link) interconnect protocol will enable the disaggregation of DRAM from CPU, and allow both the CPU and other accelerators to share memory resources. Liqid and MemVerge say this will deliver the final disaggregated element necessary to implement truly software-defined, fully composable datacenters.

Kristie Mann, VP of Product for the Optane Group at Intel, agreed, saying: “The future transformative power of CXL is difficult to overstate.” Liqid and MemVerge deliver systems “that provide functionality now that CXL will bring in the future,” she added.

“Their solution creates a layer of composable Intel Optane-based memory for true tiered memory architectures. Solutions such as these have the potential to address today’s cost and efficiency gaps in big-memory computing, while providing the perfect platform for the seamless integration of future CXL-based technologies.”

Liqid and MemVerge say their combined system will enable users to achieve exponentially higher utilization and capacity for Optane PMem. They will be able to pool and deploy Optane PMem in tandem with other datacenter accelerators to reduce big memory analytical operations from hours to minutes.

Another potential user benefit includes increasing VM and workload density for enhanced server consolidation, resulting in reduced capital and operational expenditures. Finally they will be able to run far more in-memory database computing for real-time data analysis and get faster results for previously memory-limited and IO-bound applications.

The joint Liqid-MemVerge systems are available now.