Given that customers need to dispose of old disk, tape and solid state drives, what problems might they face? We were briefed by Chris Greene, VP and global head of sales and operations, asset lifecycle management business at Iron Mountain, on this surprisingly complicated topic.

He said: “The biggest challenge facing most organizations is the significant number of devices that may need to be dealt with at any given time … across hundreds – if not thousands – of locations, all while ensuring that this process is compliant with local regulations.”

We could be talking about tens of drives a year with smaller organizations, and hundreds if not more than a thousand with large organizations.

If they don’t dispose of devices properly, confidential information could fall into the wrong hands. To prevent this, devices need secure transport to disposal sites, and a secure chain of custody needs to be in place. So, Greene says: “Organizations should use a trusted third-party service provider who can provide a record of all the devices that are either recycled or destroyed.”

How should they dispose of old disk drives?

Greene is keen on doing things right: “Secure disposal practices start with strong data protection and asset management practices – drives should be encrypted, tracked, and maintained via tags tied to an inventory system.” And then: “Organizations need to choose a secure data destruction and erasure method. Shredding and wiping (with overwriting software such as Iron Mountain’s Teraware) are acceptable methods for all media types, and degaussing is acceptable for magnetic media (i.e. not SSDs).”

Wiping via overwriting software removes all the data, but leaves the drive intact and ready to be reformatted and reused. Shredding physically destroys the medium so that it can no longer be read or used. It can be quicker and less expensive than wiping.

Degaussing involves using a powerful magnet to alter the data on the magnetic media like tape and disk drives. This can be lower cost and quicker than wiping, but is not as scalable as shredding.

Bureaucracy is inescapable in Greene’s view: “It is important that each device’s destruction is logged and reconciled to an asset management system. [This] provides an audit trail of destruction required for regulatory compliance.”

There is an environmental angle here, as Greene points out: “Favoring destruction over wiping also has negative implications for an organization’s sustainability goals. Destroying or recycling a device can be up to 20 times more energy-intensive than extending the useful life of the hard drives through remarketing or redeployment. Drives also contain potentially harmful and toxic materials and must be disposed of properly to avoid any negative environmental impacts.”

Can’t users just do three consecutive full block overwrites of all ones, all zeroes and then all ones, and then sell off the now cleaned drive in safety?

Greene says: “No. Organizations should use certified software that can confirm all data has been successfully wiped. There’s always a risk of malfunctions when it comes to wiping data, which makes it important to verify that a wipe has occurred. Most of the leading data sanitization software solutions have the verification step built in.”

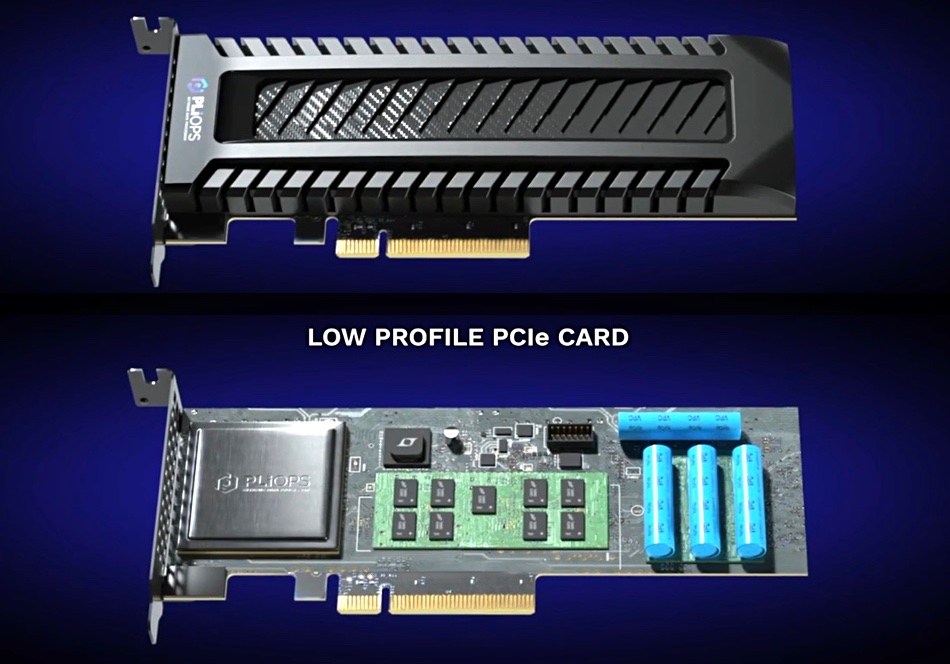

We asked Greene about any problems we should be aware of when disposing of SSDs. He came up with a wear-leveling angle that was news to us and means a different wiping technology has to be used.

“SSDs’ wear leveling will remove certain SSD sections from use, but these decommissioned sections may still retain data. Wiping will not write to these sections, leaving data behind.”

The answer is: “By using whole SSD encryption, all of the data on the drive will become unreadable without the decryption key. By then formatting the drive and removing the encryption key, it can be securely disposed of without the risk of any data remaining on the drive.”

If you decide to shred the SSD instead, then the shredding machinery needs to cope with small form-factor SSDs, such as M.2 drives and USB sticks. Otherwise the drive could pass through unscathed and, of course, retain its data.

Unless in obsolete formats, tape cartridges can readily be reused instead of being destroyed. Greene said Iron Mountain “can remove data from these tapes by degaussing the tape tracks and wiping the chip. This enables the tapes to be reused as opposed to being incinerated or put into a landfill. This is currently available in the UK and is being piloted for roll-out across mainland Europe.”

Older, obsolete tapes are best incinerated, as some of their component materials – such as polyethylene naphthalate (PEN) with barium ferrite (BaFe) magnetic pigment – mean they cannot be recycled. This also applies to snapped, burned, or chemically damaged tapes, as expert bad actors could recover data from them.

How can Iron Mountain help here in general?

“When it comes to drive disposal, we can use our software Teraware to securely wipe devices and have facilities around the world that are R2 (Sustainable Recycling) compliant and equipped with shredding equipment. In addition, we can also ensure a secure chain of custody through our global fleet of locked, alarmed, and GPS monitored vehicles. Our fleet of vehicles is also equipped with onboard drive shredding equipment.”

And the costs? It depends.

“Costs depend on the nature of a customer’s requirements, such as media type, location, and volumes. If assets can be remarketed, value can be returned directly to the customer, which can offset the cost of erasing or disposing of the data.”

It’s not cheap then.

But Greene would say this: “When evaluating cost, organizations should also consider the financial and reputational costs of data breaches. In 2022, the global average cost of a data breach was $4.35 million, according to IBM. Therefore, companies cannot afford to risk the significant costs of a data breach and should prioritize safely disposing of their IT assets.”

We don’t know what the average cost of a data breach comprising data recovered from a badly disposed junk drive is, but suspect it could be less. Still, reputational damage can last for a long time, so drive disposal better be carried out in a considered and effective way.