Blocks & Files wanted to look more deeply into Xinnor’s claims about its faster-than-RAID-hardware software for NVMe SSDs, xiRAID, particularly in light of its performance tests results. We questioned Dmitry Livshits, Xinnor’s CEO, about the tech.

Blocks & Files: Can Xinnor’s xiRAID support SAS and SATA SSDs? What does it do for them?

Dmitry Livshits: Although xiRAID was designed primarily for NVMe, it can also work very well with SAS/SATA SSDs.

You just need to make sure that the server OS is properly enabled for multiple queues, that schedulers are properly selected, and that multipath is configured. We have guidelines for that.

There are some nuances that have to do with the capabilities of the SAS HBA controller, but in general there are no problems. NVMe has several significant advantages over SAS/SATA, but let’s not forget that SAS JBOD is the easiest and cheapest way to add flash capacity to a server or a storage system not to mention many SAS backplane-enabled servers are out there in the field.

We tested JBOFs from various vendors with xiRAID and got results close to 100 percent of IOM throughput. (Several dozen GBps with RAID5 and RAID6). The main nuance with SAS/SATA is random write operations. These generate additional reads and writes and as a result, the HBA chip gets clogged with IOs and performance is lower than expected.

Blocks & Files: When xiRAID is working on an HDD system how does it manage RAID rebuilds and how long do they take?

Dmitry Livshits: To work with spinning disks, we make several important changes to the xiRAID code, without which RAID is extremely difficult to use

- Support of caching mechanisms with replication to the second node (for HA configurations)

- Array recovery using declustered RAID technology

The basic idea of this technology is to place many spare zones over all drives in the array and restore the data of the failed drive to these zones. This solves the main problem of the speed of array rebuild.

Traditional RAID rebuild is limited by the performance of 1 disk that is being written. And disk performance growth is quite minimal these days, as the manufacturers are getting closer to areal density limits, since only one head is active at a time. At the same time, drive capacity is mostly increasing by adding more platters, so there’s a clear downward tendency for the MBsec per TB ratio. There are dual-actuator drives that are helping to some extent, but they’re not easily accessible and even with doubled performance still take a long time to rebuild. Today the largest CMR drive is 22TB, next we can expect 24TB and more after that.

The task of placing spare zones for recovery, for all its simplicity at first glance, is a serious problem. The zones must be set up so that they maintain the required level of fault tolerance and at the same time provide the best possible load balancing – the highest possible rebuild rate.

We spent about a year researching and making these “maps” and we hope to introduce our declustered RAID to the market in 2023.

Blocks & Files: Is xiRAID a good fit with ZFS and what does a xiRAID-ZFS combination bring to customers?

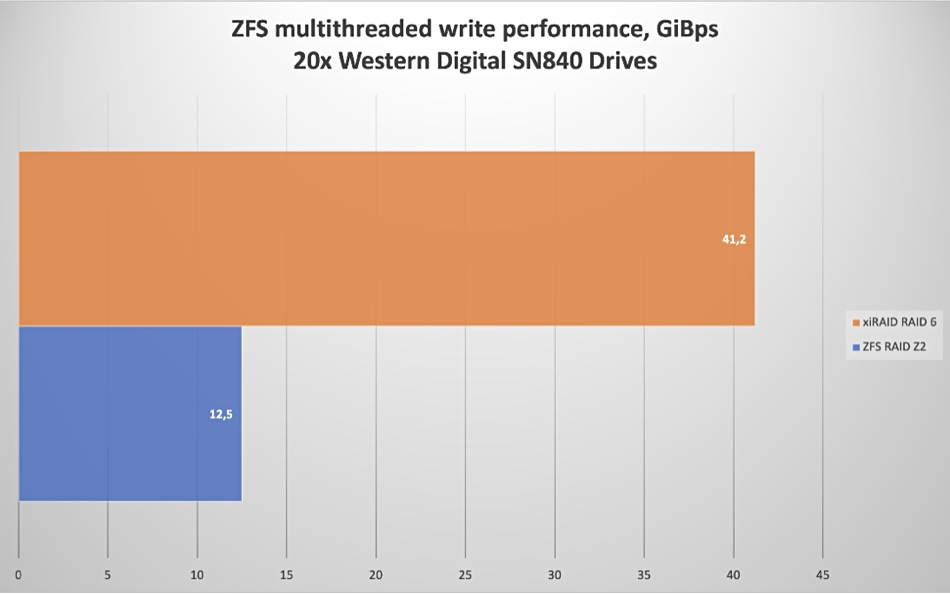

Dmitry Livshits: Now that we see many systems emerging with PCIe Gen5, the main interconnect for HPC and AI will switch to 400Gbps. Also, in HPC one of the most in-demand PFS (parallel file system) is Lustre, which uses ZFS as the back-end file system at the OSS level. The ZFS-integrated RAIDZ2 limits the write speed to 12.5 GBps, and this presents a major bottleneck. Replacing RAIDZ2 with striping is risky and mirroring doubles the cost. As a result, we get an unbalanced storage system that should be able to do much more, but in fact doesn’t utilize the underlying hardware fully. The ZFS developers understand the bottlenecks and made changes to improve performance, such as DirectIO.

By placing ZFS on top of xiRAID 6 we were able to increase the performance of sequential writes by several times, fully saturating 400 Gbit/s network.

Now we have work to do to optimize random small IOs.

Blocks & Files: Can xiRAID support QLC (4bits/cell) SSDs and how would a xiRAID+QLC SSD system look to customers in terms of latency, speed, endurance and rebuild times?

Dmitry Livshits: Logical scaling is one of the main ways to make flash cheaper, but it has several trade-offs. For example, QLC Solidigm drives have a very good combination of storage cost and sequential performance levels, they are even faster than many TLC-based drives at 64k aligned writes, but once you try small writes or unaligned, the drive’s performance drops drastically and so does endurance.

Today xiRAID can be used with QLC drives for read-intensive workloads and for specific write-intensive tasks that have large and deterministic block sizes, so that we can tune IO merging and array geometry to write fully aligned pages to the drives.

For non-deterministic workloads and small random IO, that’s not a good case for QLC, [and] we would have to develop our own FTL (Flash Translation Layer) to properly place data on the drives. But FTL requires significant processing resources, and it could potentially be a good addition to xiRAD when the product will have been ported to run on DPU/IPU.

For large capacity SSDs, our declustered RAID functionality under development will be very useful.

Blocks & Files: I understand xiRAID runs in Linux kernel mode. Could it run in user space and what would it then provide?

Dmitry Livshits: Yes, we started to develop xiRAID as a Linux kernel module so that as many applications as possible could take advantage of it without modification. Today we see a lot of possibilities to work inside SPDK, the ecosystem developed by Intel to use storage inside the user space. The SPDK itself does not have a data protection mechanism ready to use right now. RAID5f still does not have the functionality needed and is limited in use because only whole stripes must be written. xiRAID ported to SPDK will have full support for all important RAID functions, the highest performance on the market and work with any type of IO.

On top of that, it is designed with disaggregated environments in mind:

- With smart rebuilds for network-attached devices (there’s more on that in one of our blogs on xinnor.io, but basically, we manage to keep the RAID volume online through many potential network failures, unless they happen to affect the same stripe).

- RAID volume failover capability in case of host failure.

This should already make xiRAID interesting for current SPDK users who today need to use replication. Also, moving to SPDK and user space makes it much easier to integrate xiRAID with other vendors’ storage software.

Blocks & Files: Could xiRAID execute in a DPU such as NVIDIA’s BlueField? If so what benefits would it bring to server applications using it?

Dmitry Livshits: xiRAID alredy can run on DPU, but we want to use the SPDK ported version for further development. This is because the key DPU vendors rely specifically on the SPDK as part of their SDKs: Nvidia and the open source ipdk.

A DPU solves two important tasks at once: offloads storage services (RAID, FTL, etc.) and provides an NVMf initiator.

We have done a lot to make xiRAID consume a very modest amount of CPU/RAM, but still a lot of customers want to see all their server resources allocated to the business application. Here a DPU helps a lot.

Another advantage of a DPU that we see is plug-n-play capability. Imagine a scenario where a customer rents a server from a service provider and needs to allocate storage to it over NVMf. The customer has to set up a lossless network on the machine, the initiator, multipathing, RAID software and volumes. This is a challenge even for an experienced storage administrator, and the complexity could be prohibitive for many IaaS customers. A DPU with xiRAID onboard could move this complexity from the customer-managed guest OS back into the provider’s hands, while all the customer sees is the resulting NVMe volume.

We link our future with the development of technologies that will allow the main storage and data transfer services to be placed on a DPU, thus ensuring the efficiency of the data center. Herein lies our core competence – to create high-performance storage services using the least amount of computing resources. And if I briefly describe our technology strategy, it goes like this:

- We develop efficient storage services for hot storage technologies

- Today we make solutions for disaggregated infrastructures and DPU

- Soon we will start to optimize for ZNS and Computational Storage devices

Blocks & Files: Could xiRAID support SMR (Shingled Magnetic Recording) disk drives and what benefits would users see?

Dmitry Livshits: Same as with QLC devices, we can work with Drive-Managed and Host-Aware SMR drives in deterministic environments characterized by read-intensive or sequential write loads: HPC infrastructures (Tier-2 Storage), video surveillance workloads, backup and restore. All three industries have similar requirements to store many petabytes with high reliability and maximum storage density.

SMR allows us to have the highest density at the lowest $/TB.

xiRAID with multiple parity and declustered RAID gives one of the best levels of data availability in the industry.

Host-managed SMR and other use cases require a translation layer. This is not our priority, but as software we can be easily integrated into systems with third-party SMR translation layers.

Blocks & Files: The same question in a way; could xiRAID support ZNS (Zoned Name Space) drives and what benefits would users see?

Dmitry Livshits: Like host-managed SMR, zoned NVMe will require us to invest heavily in development. We have already worked out the product vision and started research. Here again, we see FTL as a SPDK bdev device functioning on a DPU.

This would allow customers to work with low-cost ZNS drives, which by default are not visible in the OS as standard block devices, without modifying applications or changing established procedures, and all extra workloads would stay inside the DPU.

Blocks & Files: What is Xinnor’s business strategy now that it has established itself in Israel?

Dmitry Livshits: We are looking at the industry to see where we could add the most value. The need for RAID is ubiquitous – we’ve seen installations of xiRAID in large HPC clusters and 4-NVMe Chia crypto miners, in autonomous car data-loggers and in video production mobile storage units.

We definitely see HPC and AI as an established market for us, and we’re targeting it specifically by doing industry-specific R&D, proof of concepts and talking to HPC customers and partners.

We are also working with the manufacturers of storage and network components and platforms. RAID in a lot of cases is a necessary function to turn a bunch of storage devices into a ready datacenter product. Ideally, we are looking for partners with expertise to combine our software with the right hardware and turn the combination into a ready storage appliance. We believe that xiRAID could be the best engine under the hood of a high-performance storage array.

And of course, we are looking for large end-users that would benefit from running xiRAID on their servers to reduce TCO and improve performance. After all, RAID is the cheapest way to protect data on the drives. With the industry switch to NVMe, the inability of traditional RAID solutions to harness new speeds is a big issue. We believe that we’ve solved it.