Q&A How does Pensando Systems work its server speed-enhancing magic?

The venture-back startup came out of stealth in late October and NetApp was revealed as a customer earlier this week.

At the time of de-cloaking to announce it had raised $145m in a C-series round, Pensando claimed its proprietary accelerator cards perform five to nine times better in productivity, performance and scale than AWS Nitro.

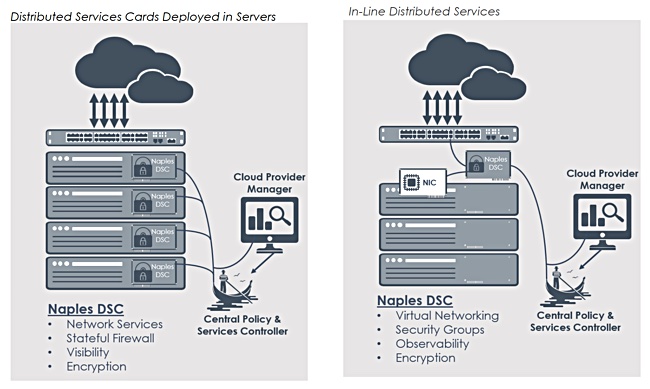

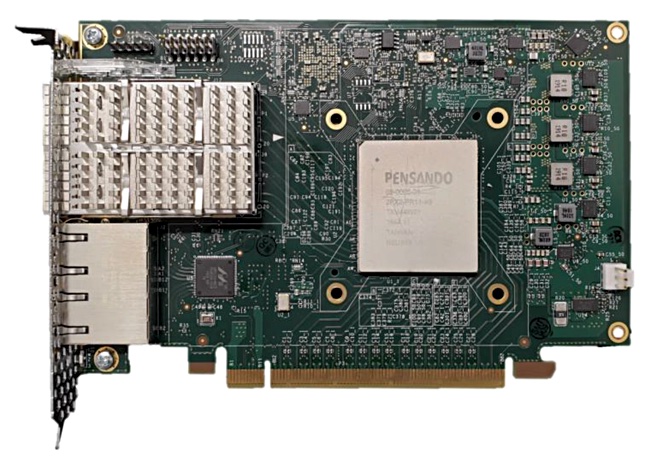

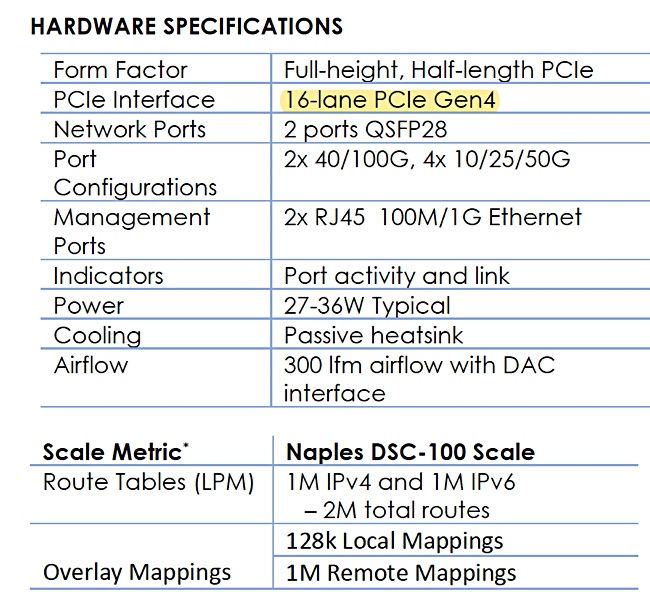

We emailed Pensando some questions to find out more about its Naples DSC (Distributed Services Card) and its replies show that this is in essence a network interface card that also performs some storage-related operations.

Blocks & Files: Does the DSC card fit between the host server and existing storage and networking resources?

Pensando: It’s a PCI device, so it’s actually in the server.

Blocks & Files: How many cards are supported by a host server?

Pensando: It depends on the server; typically servers can support multiple PCIe devices, so far none of our customers have a defined use case that requires more than one DSC.

Blocks & Files: How does the card connect to a host server?

Pensando: We support PCIe (host mode) or bump in the wire deployments.

Blocks & Files: Which host server environments are supported?

Pensando: The DSC has a standard PCIe connector so it can technically be supported any physical server to support bare metal, virtualized or containerised workloads. For example Linux, ESXi, KVM, BSD, Windows etc.

Blocks & Files: How is the DSC card programmed?

Pensando: The card is highly customisable. It has fully programmable Management, Control plane as well as the Data pipeline. The Management and Control plane supports gRPC/REST APIs, where as the data plane can be customised using P4 programming language.

Blocks & Files: How does Pensando’s technology provide cloud service?

Pensando: There are two components in Pensando Technology to help provide cloud service:

a) Datapath is delivered via P4, which allows cloud providers to own the business function and ownership of the custom datapath. P4 offers the processing speeds of an ASIC, but flexibility beyond what an FPGA can offer using a very small power envelope. P4 code is written like a high level language and iterated upon much faster than FPGA code.

At Pensando we have been able to implement new features in a matter of days, which would have required months if implemented in hardware. The P4 language syntax is similar to C, so it’s much more likely that typos and logic errors are caught by peer review. Another advantage of P4 is that statistics, instrumentation, and in-band telemetry are all software defined, just like any other feature.

b) Software Plane that runs on ARM cores (on the same chip) delivers gRPC APIs that implement various cloud features for networking and storage. This layer leverages Pensando’s P4 code and implements APIs to enable well understood industry standard cloud functions such as SDN, VPCs, Multi-tenancy, Routing, SecurityGroups, Network Load Balancing, NVMe Over Fabric, Encryption for Network/Data, NAT, VPN, RDMA, RoCEv2, etc.

The flexibility of the platform allows cloud vendors to change/iterate on the functions as their customer offering changes.

Blocks & Files: Is Pensando a bump in the wire to existing network and storage resources? If it is which of each are supported?

Pensando: The Pensando DSC can be used as a bump-in-the-wire or be used directly as a PCIe device. Bump solution can be used for network functions quite easily, however in order to leverage RDMA, SRIOV, NVMe Pensando DSC can be used as a PCIe device. The bump-in-the-wire mode can be used selectively e.g. NVMe drivers on the host can be using the card for storage whereas networking functions can be used as bump-in-the-wire.

The main attraction to use Pensando DSC as a bump-in-the-wire is to enable using Pensando technology when no driver installation is required. Using bump-in-the-wirte may preclude use of functions such as RDMA, SRIOV, etc. functions that require a Pensando driver be installed on the OS/Hypervisor. Note however that NVMe drivers are standardised and thus doesn’t require any driver installation to work over PCIe.

Software definitions

It is not surprising that Pensando has taken a software-defined networking interface card approach; its founders are a hot shot ex-Cisco engineering team.

A Naples DSC product brief document states: “Just as cloud data centers have adopted a “scale out” approach for compute and storage systems, so too the networking and security elements should be implemented as a Scale-out Services Architecture.

“The ideal place to instantiate these services is the server edge (the border between the server and the network) where services such as overlay/underlay tunneling, security group enforcement and encryption termination can be delivered in a scalable manner.

“In fact, each server edge is tightly coupled to a single server and needs to be aware only of the policies related to that server and its users. It naturally scales, as more DSC services capabilities come with each new server that is added.”