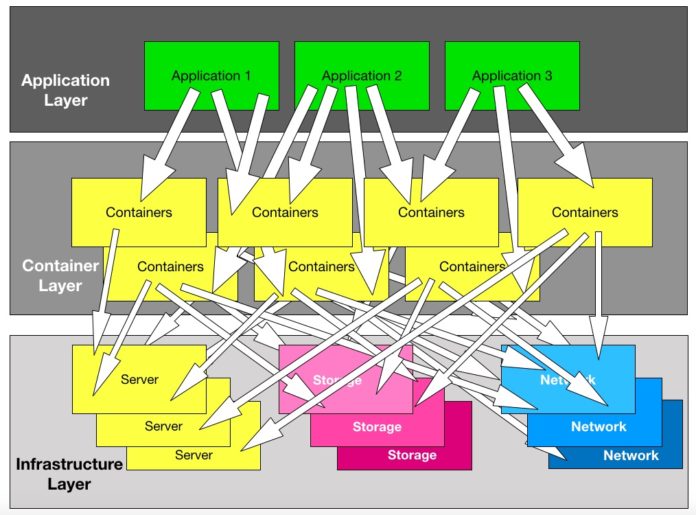

If you want to back up data used by containerised applications where do you start?

As Chris Evans, a storage consultant, argued in a recent Blocks & Files interview, the lack of backup reference frameworks is a serious problem when protecting production-class containerised systems.

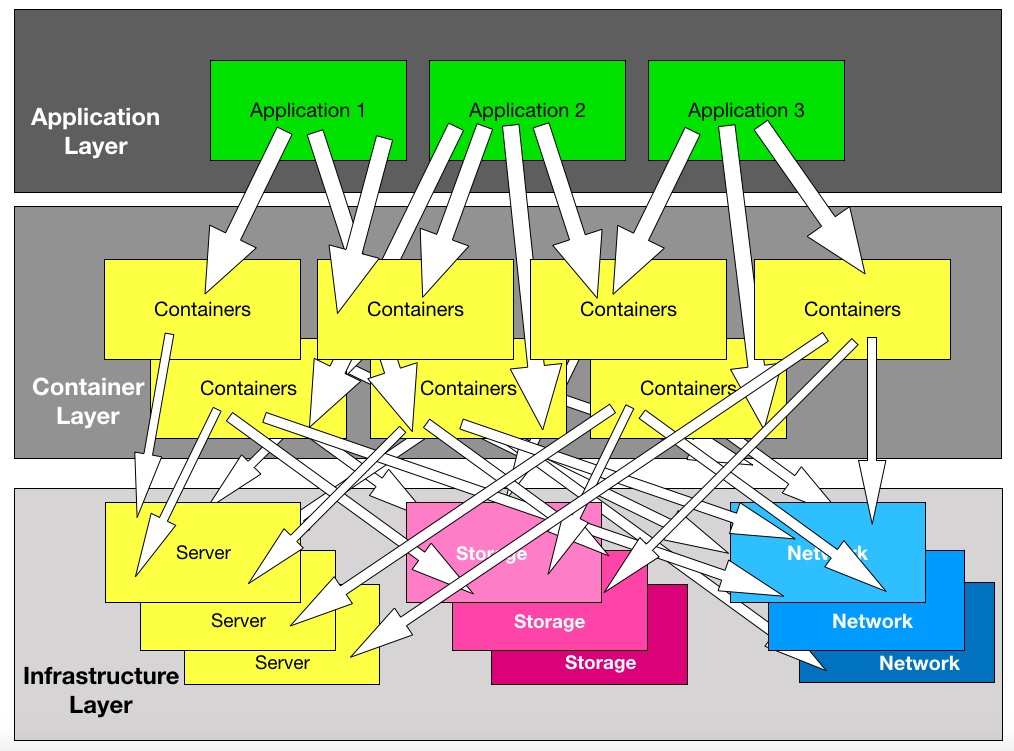

So what are the options? You could:

- Back up data at the infrastructure layer, looking at the storage arrays or storage construct in hyperconverged systems.

- Backup each container which uses persistent data on the infrastructure’s storage resources,

- Focus on an application, which is built from containers which use the storage resources in the underlying infrastructure.

According to Kasten, a California storage startup, the focus should be on the application, as applications use sets of containers which, in turn, use the underlying server, storage and networking resources.

It is difficult to isolate individual application container data from myriad others in a storage array backup that focuses on logical volumes and/or files. An application focus avoids these problems, Kasten said.

If you backup and restore at the container level you recover at the container level. Restoring one container’s data without awareness of the situation of the other containers at that time risks inconsistency. In other words, separate containers have different versions of what should be the same data.

Kasten

Kasten’ is German for ‘box’, which seems appropriate. The Silicon Valley company was founded in January 2017 by CEO Niraj Tolia and engineering VP Vaibhav Kamra, who worked together at Maginatics and EMC and have been friends since university. It raised $3m in a March 2017 seed round and $14m in an A-round in August this year. Kasten said it has enterprise customers but hasn’t revealed any names yet.

Kasten’s K10

Kasten’s software, called K10, provides backup and recovery for cloud-native applications and application migration.

It runs within, and integrates with, Kubernetes on any public or private cloud. The software uses the Kubernetes API to discover application stacks and underlying components, and perform lifecycle operations.

K10 provides backup, restore points, policy-based automation, compliance monitoring and supports scheduling and workflows. It supports the open source Kanister framework, available on Github, with workflows captured as Kubernetes Custom Resources CRs). This has block, file and object storage building blocks.

It supports NetApp, AWS EBS, Dell EMC, and Ceph storage and supports the Container Storage Interface (CSI). K10 uses deduplication technology to reduce storage capacity needs and network transmission requirements.

Kasten claims, without giving any details, that K10 is up to ten times cheaper than un-named legacy products and has up to 90 per cent faster mean time to recovery than using volume snapshots.

Migration

K10 has a migration capability that moves an entire application stack and its data in multi-cluster, multi-region and multi-cloud environments.

There are several reasons for doing this, Kasten said, such as disaster recovery, avoiding vendor lock-in, and sending data to test and dev and continuous integration environments.

Check out a K10 datasheet here. There is a free use trial available on the Kasten website.