In this article we explore NetApp’s MAX Data use of server-based Storage Class Memory to slay the data access latency dragon?

But first, a brief recap: MAX Data is server-side software, installed and run on existing or new application servers to accelerate applications. Data is fetched from a connected NetApp array and loaded into the server’s storage class memory.

Storage class memory (SCM), also called persistent memory (PMEM), is a byte-addressable, non-volatile medium. Access speed is faster than flash but slower than DRAM. An example is Intel’s Optane, built using 3D Xpoint memory technology. This is available in SSD form (Optane DC P4800X) and also NVDIMM form (Optane DC Persistent Memory.)

For comparison, the Optane SSD’s average read latency is 10,000 nanoseconds; the Optane NVDIMM is 350 nanoseconds; DRAM is less than 100 nanoseconds; and an SAS SSD is about 75,000 nanoseconds.

Servers can use less expensive SCM to bulk out DRAM. This way IO-bound applications run faster because time-consuming storage array IOs are reduced in number.

ScaleMP’s Memory ONE unifies a server’s DRAM and SCM into a single virtual memory tier for all applications in the server. NetApp’s MAX Data takes a different approach, restricting its use somewhat.

We talked to Bharat Badrinath, VP, product and solutions marketing at NetApp, to find out more about MAX Data.

MAX Data memory tier

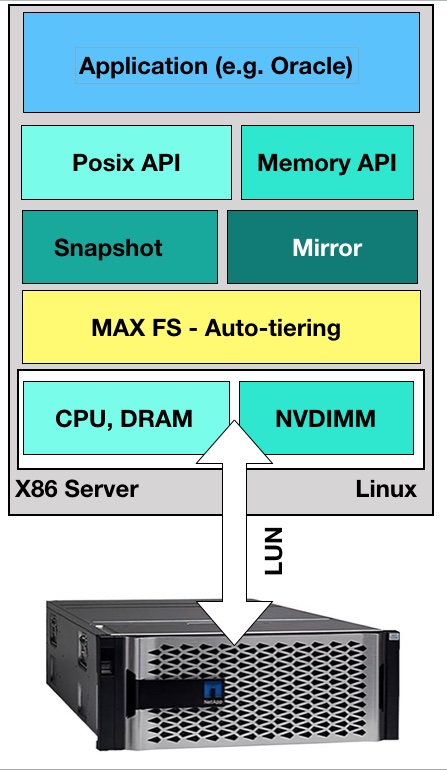

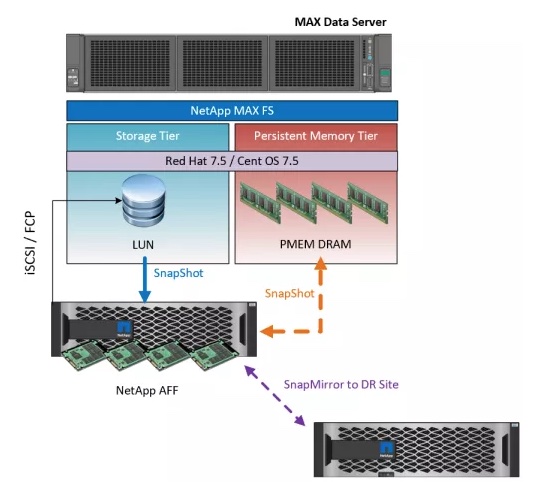

MAX Data supports a memory tier of DRAM, NVDIMM and Optane DIMM with a storage tier of NetApp AFF with ONTAP 9.5 LUN.

According to Badrinath, this set up “allows us to use a ratio of 1:25 between the memory tier and the storage tier so we can accelerate a large existing data set to memory speeds without requiring the full application fit into memory.”

NetApp can protect memory tier data with MAX Snap and MAX Recovery aligned to ONTAP based Snapshots, SnapMirror and the rest of the ONTAP data protection environment.

Server details

MAX Data Runs on the Linux OS today with bare metal configurations – either a single or dual-socket server with up to 128 vCPUs. Future MAX Data versions will support hypervisor configurations.

Any Intel x86 servers can be used if DRAM is used as memory tier 1. If Optane DIMMs are used then the server CPU must support them, which means Cascade Lake AP processors for now.

MAX Data filesystem

MAX Data provides a file system, MAX FS, that spans the PMEM and the storage tier. In this case this means an external NetApp storage array, connected via NVMe-over-Fabric to the server.

Applications that access this file-system get relatively instant access to the data for reads and writes – single-digit microseconds, so long as the data is in the memory tier.

MAX Data supports POSIX API integration as well as the memory API, so users can use block/file system semantics and memory API semantics.

Applications using a POSIX interface can run unmodified to use MAX Data.

Inside and outside

Server-side applications that do not access the MAX Data filesystem will not see, nor gain any benefit from the MAX Data-owned PMEM in the server.

There is also no PMEM inside NetApp’s arrays with this scheme. We think the company will add PMEM to its arrays in due course and so decrease their data access latency. HPE has done this with its 3PAR arrays, and Dell EMC has baked this into its 2019 plans for PowerMAX arrays.

Note that MAX Data will support any Octane DIMM capacity available on the market.