GRAID has incrementally improved its NVMe RAID Card’s software, adding data corruption and correction checks. v1.2.2 of its SupremeRAID storage product enhances its RAID 6 erasure coding (EC) N+2 data consistency check capability with the ability to correct customer data when data corruption is detected for customers deploying SupremeRAID in a RAID 6 configuration. The new 1.2.2 feature set is available on both SupremeRAID SR-1000 for PCIe 3 and SupremeRAID SR-1010 for PCIe 4 servers and will be available worldwide on July 11, 2022.

…

HPE announced that Catharina Hospital, the largest hospital in the Netherlands’ Eindhoven region, providing care for over 150,000 patients, has selected the HPE Ezmeral software portfolio to build a cloud-native analytics and data lakehouse to provide more accurate diagnosis, early detection, and injury prevention for patients. Thomas Mast from the cardiology department at Catharina Hospital said: “The new data lakehouse that we’ve created using HPE Ezmeral will enable us to help accelerate model training and detect cardiogram anomalies among the 500,000 electrocardiograms (ECGs) already available for data analysis with higher precision and to identify the correct diagnosis and treatment.”

…

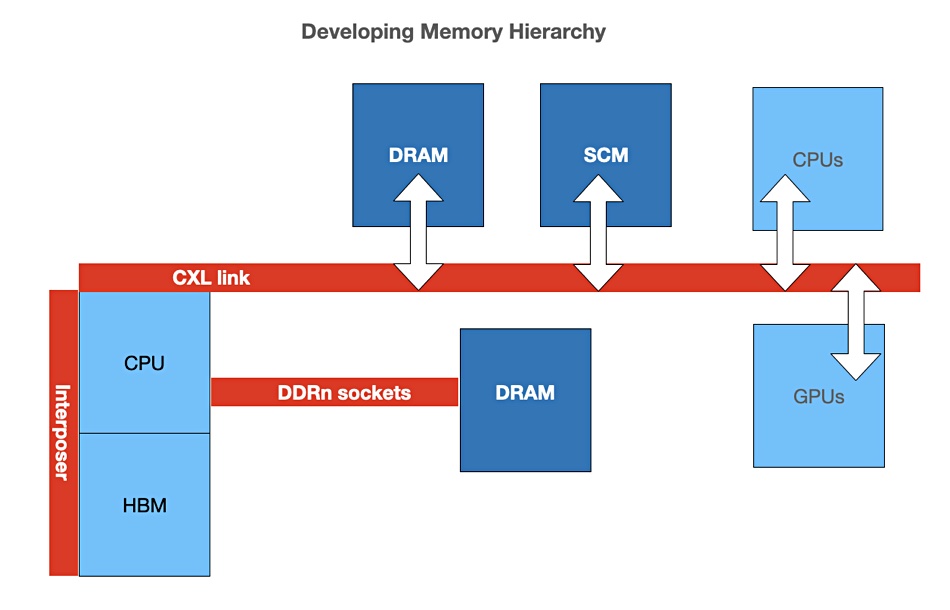

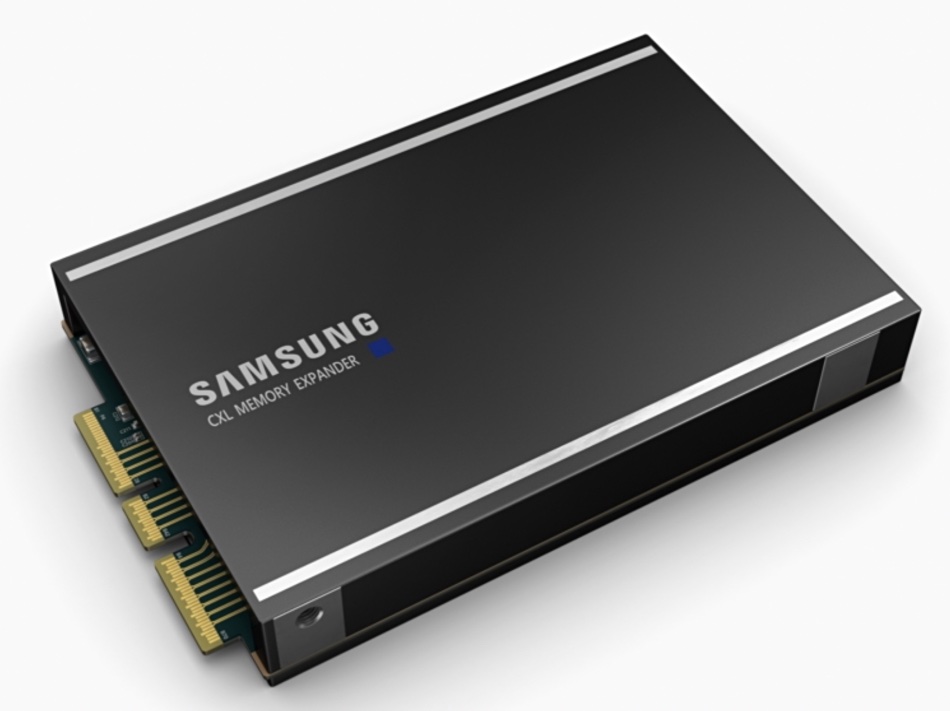

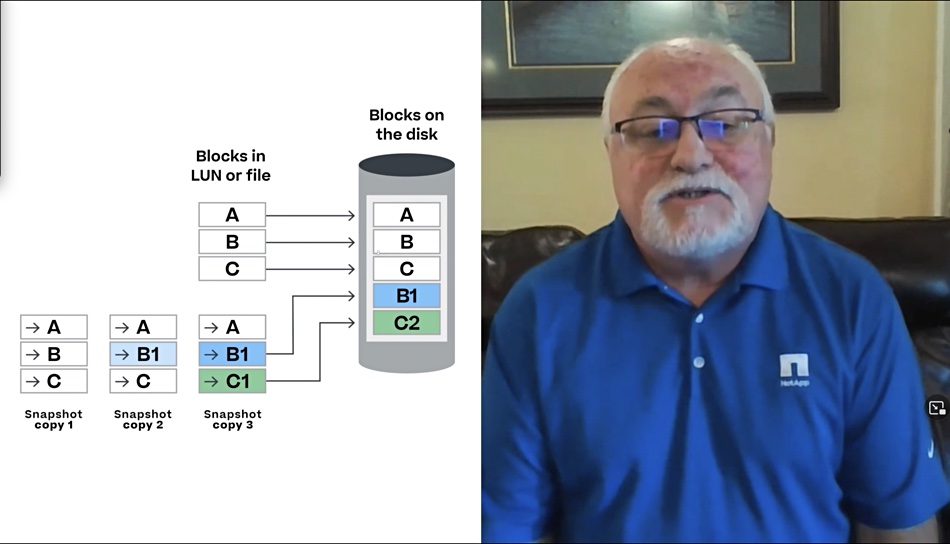

MemVerge Memory Machine software has achieved Red Hat OpenShift Operator and container certifications and is now listed in the Red Hat Ecosystem Catalog. This means cloud builders can deploy Memory Machine software with the confidence that it has completed rigorous certification testing with Red Hat Enterprise Linux and OpenShift. Memory Machine software lowers the cost of memory infrastructure by transparently delivering a pool of software-defined DRAM and lower-cost persistent memory. It provides higher availability to cloud-native OpenShift applications by providing ZeroIO In-Memory snapshots, replication, and instant recovery for terabytes of data.

…

Micron has announced commercial and industrial channel partner availability of its DDR5 server DRAM, with modules up to 64GB, in support of industry qualification of next-generation Intel and AMD DDR5 server and workstation platforms. The move to DDR5 memory enables up to an 85 percent increase in system performance over DDR4 DRAM. DDR5-enabled servers are being evaluated and tested in datacenter environments and are expected to be adopted at an increasing rate throughout the remainder of 2022. The introductory data rate for DDR5 is 4800MT/s but is anticipated to increase to meet future datacenter workload demands.

…

Peer Software has a strategic alliance with Pulsar Security and will use its team of cyber security experts to continuously monitor and analyze emerging and evolving ransomware and malware attack patterns on unstructured data. The PeerGFS distributed file system will use these attack patterns to enhance its Malicious Event Detection (MED) feature and enable an additional layer of cyber security detection and response. Pulsar Security will work with Peer Software to educate and inform enterprise customers on emerging trends in cyber security, and how to harden their systems against attacks through additional services like penetration testing, vulnerability assessments, dark web assessments, phishing simulations, red teaming, and wireless intrusion prevention.

…

NAND and DRAM chip maker Samsung has reported strong second quarter revenues and profits but smartphone memory demand was down, echoing part of Micron’s recent experience, which was also affected by a downturn in PC storage and memory demand. Reuters reported Samsung revenues rose 21 percent to ₩77 trillion, in line with estimates. It posted an operating profit of ₩14 trillion ($10.7 billion), up 11 percent from ₩12.57 trillion a year earlier. According to Counterpoint Research , estimated smartphone shipments by Samsung’s mobile business in the second quarter were about 62-64 million, about 5-8 percent lower than a March estimate.

…

Replicator WANdisco is constantly trumpeting its sales successes. Here’s another: a top five Canadian bank has made a three-year, $1.1 million contract to migrate its Hadoop workloads from an on-premises Cloudera cluster to the Google Cloud Platform (GCP). The initial migration will be of 5 petabytes of data with room for future expansion. This is WANdisco’s first Google Cloud win in North America and represents another contract win in the global financial services market.