CXL 2.0 could create external memory arrays pretty much in the same way Fiber Channel paved the way for external SAN arrays back in the mid-1990s.

The ability to dynamically compose servers with 10TB-plus memory pools will enable many more applications to run in memory and avoid external storage IO. Storage-class memory becomes the primary active data storage tier, with NAND and HDD being used for warm and inactive data, and tape for cold data.

That’s the view of Charles Fan, MemVerge CEO and co-founder, who spoke to Blocks & Files about how the CXL market is developing a year on from his initial briefing on the topic.

He says: “For people like us in the field, this is a major architectural shift. Maybe the biggest one for the last 10 years in this area. This could bring about a new industry, a new market of a memory fabric that can be shared across multiple servers.”

CXL is the Computer Express Link, the extension of the PCIe bus outside a server’s chassis, and is based on the PCIe 5.0 standard. CXL v1, released in March 2019 and based on PCIe 5.0, enables server CPUs to access shared memory on accelerator devices with a cache coherent protocol.

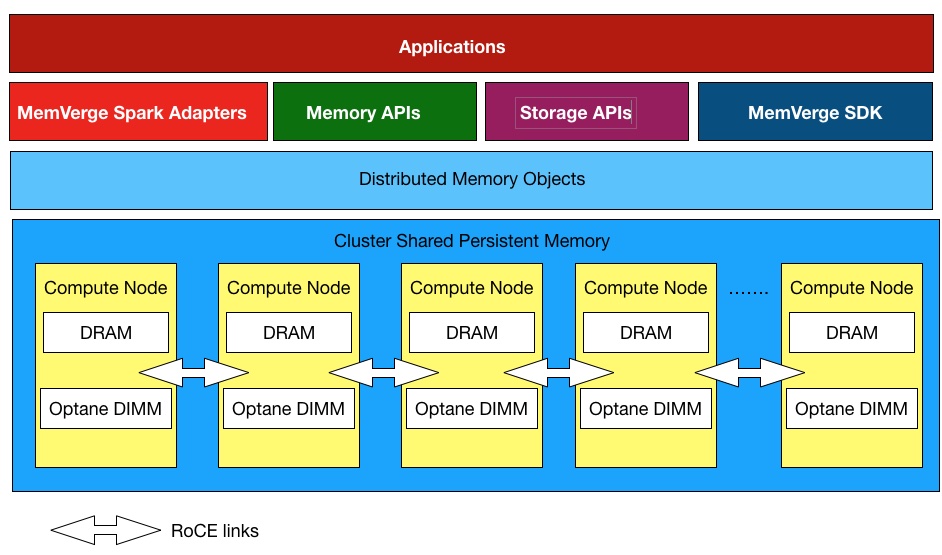

MemVerge software combines DRAM and Optane DIMM persistent memory into a single clustered storage pool for use by server applications with no code changes. In other words, the software already combines fast and slow memory.

CXL v1.1, which sets out how interoperability testing between the host processor and an attached CXL device can be performed, is supported by Intel’s Sapphire Rapids and AMD’s Genoa processors. CXL v2.0 adds support for CXL switching through which multiple CXL 2.0-connected host processors can use distributed shared memory and persistent (storage-class) memory.

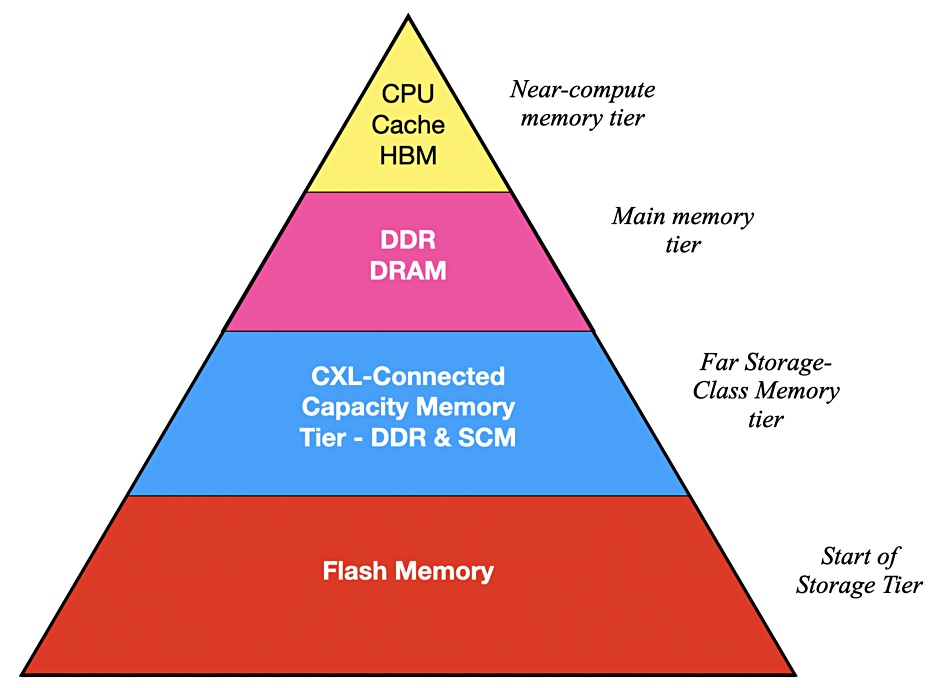

A CXL 2.0 host will have its own directly connected DRAM and the ability to access external DRAM across the CXL 2.0 link. Such external DRAM access will be slower, by nanoseconds, than the local DRAM access, and system software will be needed to bridge this gap. (System software which MemVerge supplies, incidentally.) Fan says he thinks the availability of CXL 2.0 switches and external memory boxes could first appear as early as 2024. We’ll see prototypes much earlier, though.

MemVerge is partnering with composable systems supplier Liqid so that MemVerge-created DRAM and Optane memory pools can be dynamically assigned in whole or part to servers across today’s PCIe 3 and 4 buses. CXL 2.0 should bring in external memory pooling and its dynamic availability to servers; what composability software does.

Fan says: “With CXL, memory can become composable as well. And I think that’s highly synergistic to the cloud servicing model. And so they will have it and I think they will be among the first adopters of this technology.”

Blocks & Files’ thinking is that the hyperscalers, including public cloud suppliers, are utterly dependent on CXL for memory pooling. And they’ve not got pre-existing technology that they can use to supply external pooled memory resources. So they either build it themselves, or look for suitable suppliers, of which there are very, very few. And here is MemVerge with what looks like ready-to-use software.

For Fan, CXL 2.0 “is the best development in the macro industry for us in our short life of five years.”

His company will be helped by the rise of a CXL 2.0 ecosystem of CXL switch, expanders, memory card, and device suppliers. MemVerge’s software can already run in the public cloud. SeekGene, a biotech research firm focusing on single-cell technology, has significantly reduced processing time and cost by using MemVerge Memory Machine running on AliCloud i4p compute instances.

Fan says: “AliCloud was the first cloud service provider to deliver an Optane-enabled instance to their customers, and then our joint service lays on top of that, to allow encapsulation of the application, and use of our snapshot technology to allow rollback recovery.”

MemVerge will make its basic big memory software available in open source form to widen its adoption, and supply paid-for extensions such as snapshot and, possibly, checkpoint services.

External memory pooling example

Imagine a rack of 20 servers today, each with 2TB of memory. That’s 20 x 2TB memory chunks, 40TB, with any application limited to 2TB of memory. MemVerge’s software could be used to bulk up the memory address space in any one server to 3TB or so but each server’s DRAM slots are limited in number and once they are used up no more are available. CXL 2.0 removes that limitation.

Let’s now reimagine our rack of 20 servers, with each of them having, for example, 512GB of memory, and the rack housing a CXL 2.0-connected memory expander chassis with 30TB of DRAM. We still have the same total amount of DRAM as before, 40TB, but it is now distributed differently, with 20 x 5.12GB chunks, one for each server, and a 30TB shareable pool.

An in-memory application could consume up to 30.5TB of DRAM, 10 times more than before, radically increasing the working set of data it can address and reducing its storage IO. We could have three in-memory applications, each taking 10TB of the 30TB memory pool. The ability of such applications to execute faster will be significantly higher.

Fan says: “It lifts the upper limit, the ceiling, that you have to the application in terms of how much memory you can use, and you can dynamically provision it on demand. So that’s what I think is transformative.”

And it’s not just servers that could use this. In Fan’s view: “GPUs could also use a more scalable tier of memory.”

Freshly created DRAM content will still have to be persistent and writing 30TB of data to NAND will take appreciable time, but Optane or similar storage-class memory, such as ReRAM, could be used instead with much faster IO. The most active data will then be stored in SCM devices, with less active data going first to NAND and then disk and finally tape as it ages and its activity profile gets lower and lower.

Such CXL-connected SCM could be in the same or a separate chassis and be dynamically composable as well. We could envisage hyperscalers using such systems of tiered external DRAM and Optane to get their services running faster and capable of supporting more users with higher utilization.

Application design could change as well. Fan adds: “The application general logic is to use as much memory as you have. And storage is only used if you don’t have enough memory. With other data-intensive application, it will be moving the same way, including the database. I think the memory database is a general trend.

“For many of the ISPs I think having the infrastructure delivering a more limitless memory will impact their application design – for it to be more memory-centric. Which in turn reduces their reliance on storage.”

CXL 2.0, hyperscalers, and the public cloud

The public cloud suppliers could set up additional compute instance types with significantly higher memory capacity, and SCM capacity as well. Their high customer counts and scale would enable them to amortize the costs of buying the DRAM and SCM more effectively than ordinary enterprises, and get more utilization from their servers.

Fan thinks that current block-level storage device suppliers may start producing external memory and SCM devices, and so too, B&F thinks, could server manufacturers. After all, they already ship DRAM and SCM in their current server boxes. Converged Infrastructure systems could start having CXL-memory shelves and software added to them.

Fan is convinced that we are entering a big memory computing era, and that the impact of CXL 2.0 will be as profound as that of Fiber Channel 35 years ago. In the SAN era, Fan says: “Storage can be managed and scaled independently to compute.”

Now the same could be true of memory. We are moving from the age of the SAN to an era of big memory and things may never be the same again.