Dominant private/public cloud abstraction layers could exclude independent vendors from suppliers’ customer bases.

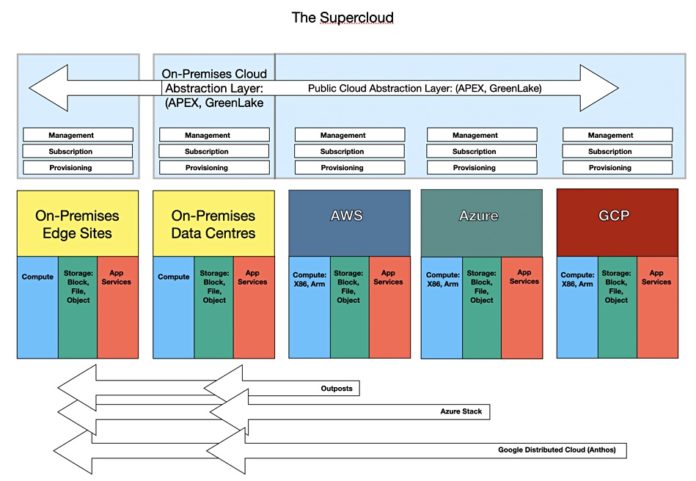

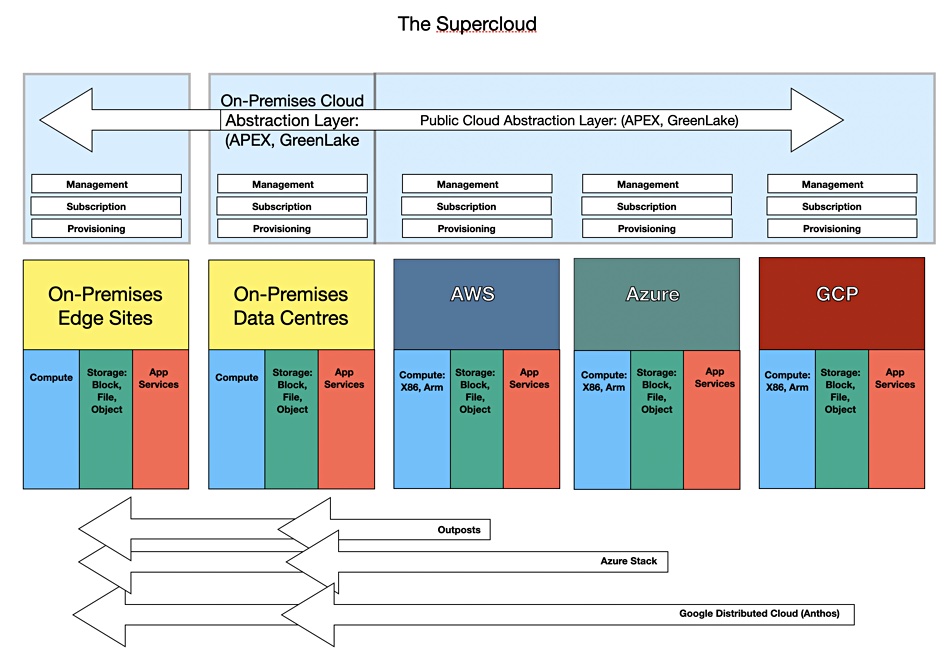

Dell and HPE are erecting public cloud-like abstraction layers over their on-premises hardware and software systems, transforming them into subscription-based services with a public cloud provisioning and consumption model. But APEX (Dell) and GreenLake (HPE) are clearly extensible to cover the three main public clouds as well – AWS, Azure, Google – forming an all-in-one cloud management, subscription, and provisioning service, providing one throat to choke across numerous cloud environments.

Research consultancy Wikibon suggests that the idea is evolving into a “supercloud” concept. An online research note says: “Early IaaS really was about getting out of the datacenter infrastructure management business – call that cloud 1.0 – and then 2.0 was really about changing the operating model and now we’re seeing that operating model spill into on-prem workloads. We’re talking here about initiatives like HPE’s GreenLake and Dell’s APEX.”

Analyst John Furrier suggests: “Basically this is HPE and Dell’s Outposts. In a way, what HPE and Dell are doing is what Outposts should be. We find that interesting because Outposts was a wake up call in 2018 and a shot across the bow at the legacy players. And they initially responded with flexible financial schemes but finally we’re seeing real platforms emerge.”

Wikibon says: “This is setting up Cloud 3.0 or Supercloud as we like to call it. An abstraction layer above the clouds that serves as a unifying experience across the continuum of on-prem, cross clouds and out to the near and far edge.”

We think that the impetus for this is coming from the two main on-premises system vendors: Dell and HPE. They have pretty complete hardware and software stacks on which they run legacy, virtual machine (VM), and containerized apps. Because of the efforts made by VMware and Nutanix, VMs can run on-premises (private cloud) and in the public cloud. With Kubernetes adopted as a standard, containerized apps can run in both the private and public cloud environments as well. Given the right public cloud API interfaces, GreenLake and APEX can transfer apps between these environments and provision the compute, storage, and networking resources needed. Transferring data is not so easy because of data gravity, which we’ll look at in a moment.

It is in Dell and HPE’s interests to support a hybrid and multi-cloud environment, as their enterprise customers are adopting this model. Both Dell and HPE can retain relevance by supporting that. They do that initially by replicating the public cloud operating model for their on-premises (now private cloud) products – Cloud 2.0 – and then by facilitating bursting or lift-and-shift to the public clouds. Their safeguard here is to sing loud about frustrating public cloud lock-in attempts by each of the big three cloud service providers (CSPs), pointing out the cost disadvantages of being locked in to a single CSP.

They can also help manage unstructured data better by providing global file and object namespaces across the four environments: private cloud, AWS, Azure, and Google.

This is entering into Hammerspace territory and leads us straight into the independent storage and data services vendor problem. Does that vendor compete with Dell and HPE or partner with them?

A consideration for Dell and HPE is that they own the assets which they supply to customers for which there are service level agreements (SLA). They will not want to supply partner products unless they absolutely fit in with the APEX and GreenLake SLAs and can be maintained and supported appropriately.

Considerations like this will encourage these two prime vendors to have restricted partnership deals. They also brand their services as APEX for this or GreenLake for that, and prior product brands – such as HPE Alletra 9000 – are de-emphasized. Thus we have GreenLake for Block Storage. Assume GreenLake supplied a WEKA-sourced high-speed file service then it would not necessarily be branded GreenLake for WEKA but GreenLake for High-Speed File.

If HPE did such a deal with WEKA then it would most likely preclude HPE doing similar deals with DDN, IBM (Spectrum Scale), Panasas, Qumulo, and ThinkParQ (BeeGFS). We are going to potentially see a much-reduced IT system software component choice by customers adopting the APEX and GreenLake-style private clouds.

In other words, independent storage and storage application suppliers could find themselves excluded from selling to Dell’s APEX and HPE’s GreenLake customers, unless the customers have a pressing need for products not included in the APEX or GreenLake catalogs.

This is going to present an interesting strategic and marketing challenge to independent vendors.

Data gravity

It’s a truism that large datasets cannot be transferred quickly to/from the public cloud because it takes too long, so compute needs to come to the data and not the other way around. We can say that the only reason data gravity exists is because networking is too slow. A 10TB dataset had gravity when 10Gbit networking was the norm. Assume that, after overheads, 10Gbit/sec equals 1GB/sec and that it would then take 10,000 seconds to move a 10TB dataset; 166.7 mins or just over 2 hours 46 minutes.

Now we have 100Gbit networking. 10GB/sec and it will take 1,000 seconds, 16 minutes and 42 seconds. Now let’s amp up the speed to 400Gbit, 40GB/sec, and our 10TB data transfer takes 250 seconds, 4.2 minutes. The effect of data gravity diminishes as networking speed increases. If we reach 800Gbit/sec networking speed then our 10TB transfer would take 125 seconds, 2.1 minutes.

Data gravity will always exist as long as datasets carry on growing. So a data analytics app looking at a 1PB dataset or larger will surely be subject to data gravity for many years – but smaller datasets may well find that data gravity weakens substantially as networking speeds increase.