Interview: MemVerge co-founder and CEO Charles Fan believes we are transitioning to an era of very big, petabyte-level CXL-connected memory pools. CPUs, GPUs and other accelerators will access vast in-memory datasets, minimising data movement and speeding computation.

MemVerge provides Memory Machine software, an abstraction layer that pools DRAM and Storage-Class Memory such as Optane to enable applications to run completely in memory and avoid most storage IO. Fan thinks hugely larger memory pools are coming and briefed Blocks & Files on his views last month.

He said the main driver for very big memory pool concept is the memory-CPU bottleneck. The restriction on CPU-memory socket count and resultant memory capacity limitations per CPU is what causes this. There is a follow-on problem in that, when data is processed, it has to be sent to the GPU – as with GPUDirect. If CPUs and GPUs could share a memory pool, then the data could stay in place with no time wasted in sending it to the GPUs and then returning it.

Such big memory pooling is made possible by the Compute Express Link, a bus based on the PCIe Gen 5 standard. Fan said of this: ”The Availability of CXL will be an inflexion point and bring in the era of big memory.”

He picked up on something Intel director of Technology Initiatives Jim Pappas said at the April SNIA Persistent Memory and Storage Summit. According to Fan, Pappas said Optane (3D XPoint storage-class memory) and CXL are a match made in heaven. Over the next two to three years, Intel will add a CXL interconnect to Optane. This thus making its capacity accessible to any processing resource with a CXL link – non-Intel X86, Arm, FPGAs and GPUs for example.

Fan said: “In 2 – 3 years we’ll see two to three CXL-interfaced SCM (Storage-Class Memory) products made at higher capacity and lower cost than DRAM.”

He cited Resistive RAM (RERAM) as a potential technology for this.

Big name vendors

Who is making them? Fan said: “We’re under NDA and they have not publicly announced anything. They are major established players of Intel and Micron class.”

This is hinting at Samsung, SK Hynix and maybe Kioxia/Western Digital. Whoever they are, MemVerge plans to support their CXL-connected technology out of the gate.

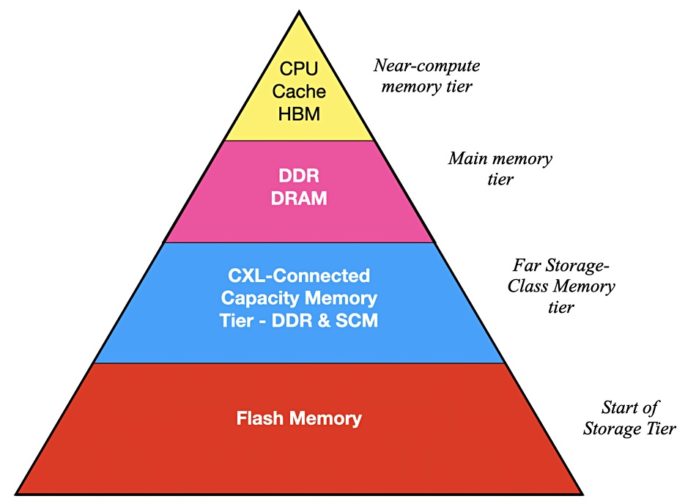

Fan said he thinks memory technology will diverge into three classes: near-CPU memory, main memory, and far memory:

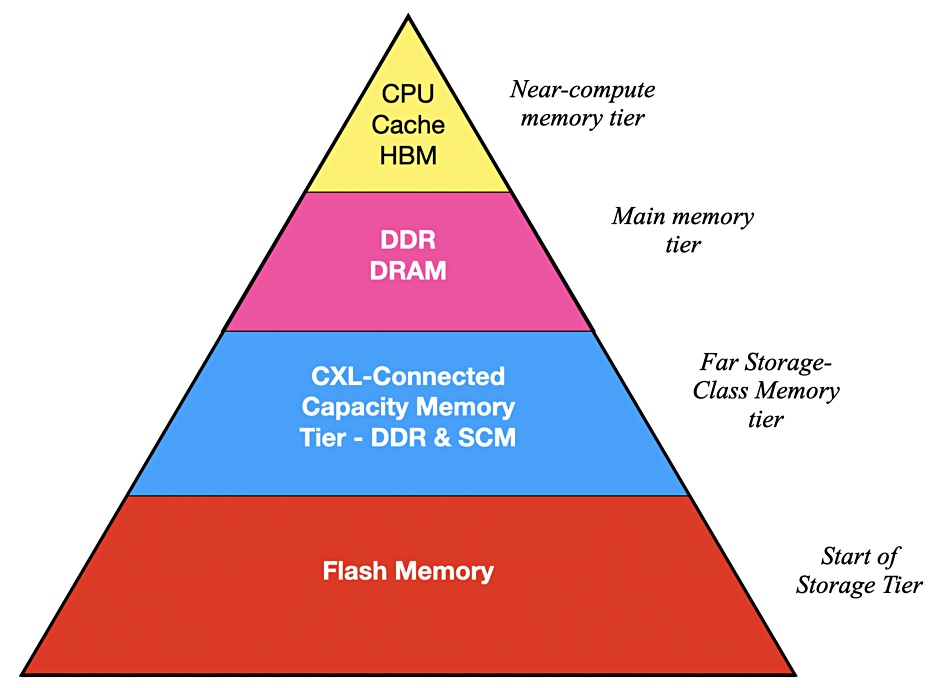

Another way of looking at the top three layers in this hierarchy is by connect technology:

In Fan’s view near-memory will use HBM with its fast and high-bandwidth interposer link to the CPU. DDR socket links will be too slow for this role. He says increased core counts in CPUs will need increased CPU-memory bandwidth and HBM is a good fit: “HBM will turn into a caching layer.”

He also says DDR DRAM memory channels have 300 pins in their connectors. This results in very complicated wiring on the motherboard: “Moving to a serial [CXL] bus gives you the same bandwidth but at 80 pins per channel.” That makes connections simpler.

The CXL bus enables interconnects between servers. The existing DDR memory channel does not. This could: “allow many petabytes of memory pooling – really big memory. … The compute-to-memory Von Neumann bottleneck gets fixed.”

It also fixes the memory-to-storage bottleneck as you: “keep most of the data in memory rather than in the storage layer. It doesn’t need to move. Really big memory will fundamentally change things over the next three to five years.”

Main memory will be DDR4 and DDR5 socket-linked memory. Far memory will be CXL-connected SCM predominantly with some DRAM. His reasoning? CXL, with its few hundred microsecs latency, is too slow for DDR DRAM but OK for SCM. He sees servers accessing 100TB or more of CXL memory in two to three years time.

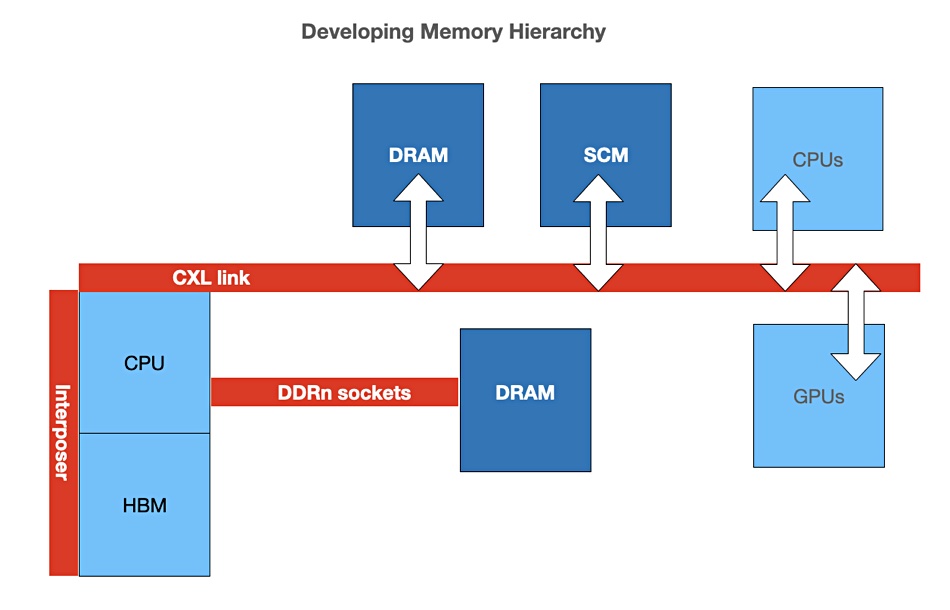

CXL is already here

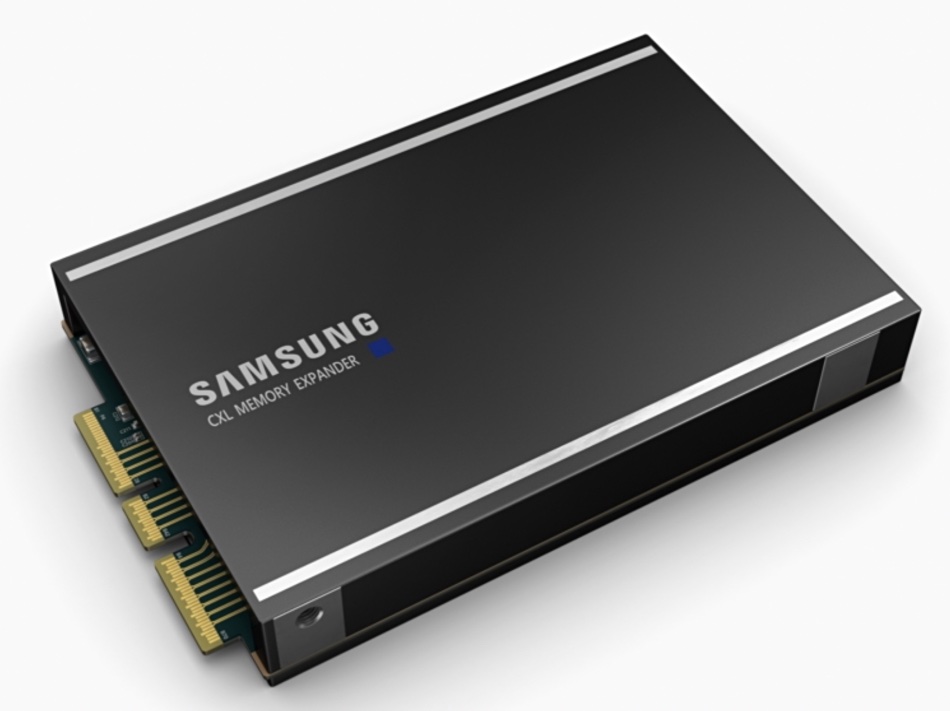

Samsung has just demonstrated a CXL-connected DDR5 memory module that could provide up to a terabyte of capacity. The module has memory mapping, interface converting and error management technologies to enable CPUs and GPUs to use this CXL memory module as main memory.

Naturally there needs to be a software layer between applications and the three memory tiers. Software will compensate for the DRAM and CXL memory access speed differences and also use the non-volatile nature of CXL-connected SCM to protect data. This could use snapshotting (aka checkpointing) of DRAM contents to SCM, with periodic writes to storage for longer-term protection. That would enable fast rollbacks from SCM to previous points in time if there were problems.

This software layer will enable existing applications, whether they are bare-metal, virtualised or containerised, to run in a server combined DDR DRAM + CXL memory environment.

Fan sees MemVerge’s Memory Machine software as being such a layer. The idea is it would enable applications to deal with DRAM main memory and CXL far memory as a single resource. He told us data services, such as snapshotting, will be offered for CXL memory pools. Another could be metro-distance disaster recovery between two very big memory installations. This would use an asynchronous background replication process to move snapshot data to the second system.

A third option would be to move the snapshot data to a storage system or S3 bucket: “You could then retrieve state and relaunch the app anywhere. It’s instance management with state. [Data] movement, which might take 15 minutes, happens in the background and doesn’t impact the primary system.”

He said: “We capture the entire app state and not just the data state. It’s a snap-mirror-like process,” with layers of snapshots, up to 256 today for example.

Comment

MemVerge is flying the flag for its own technology of course, but what FAN says about the need for an abstraction layer is interesting. Presenting disjoint memory/SCM pools as a single, logical memory resource makes sense. If what he says about major semi-conductor memory/SCM players developing their own SCM products for the 2023/2024 period and CXL-connected Optane comes to pass, our current server-based app environment is going to change substantially.