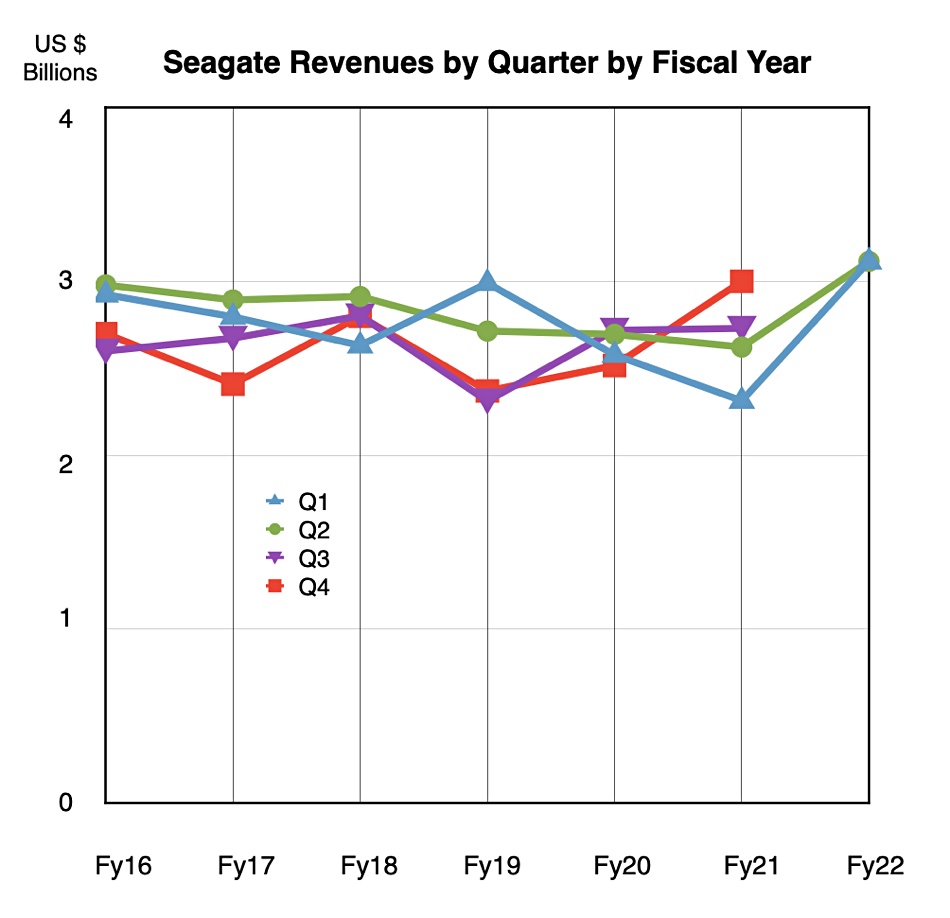

Disk drive manufacturer Seagate could be positioned for a breakout fiscal 2022. It reported revenues of $3.12 billion for its second fiscal 2022 quarter, ended December 31, and up 18.8 per cent on the year-ago number. There were profits of $501 million, up 78.9 per cent year-on-year.

CEO Dave Mosley’s results statement said “Seagate ended calendar year 2021 on a high note delivering another solid performance in the December quarter highlighted by revenue of $3.12 billion, our best in over six years. This performance is all the more impressive in light of the supply chain disruptions and inflationary pressures we are experiencing today.”

“In calendar year 2021, we achieved revenue of nearly $12 billion, up 18 per cent compared with the prior calendar year, we expanded non-GAAP EPS by more than 75 per cent and we grew free cashflow by nearly 40 per cent. Truly an outstanding year of growth that shows we are capitalising on the secular tailwinds driving long-term mass capacity storage demand.”

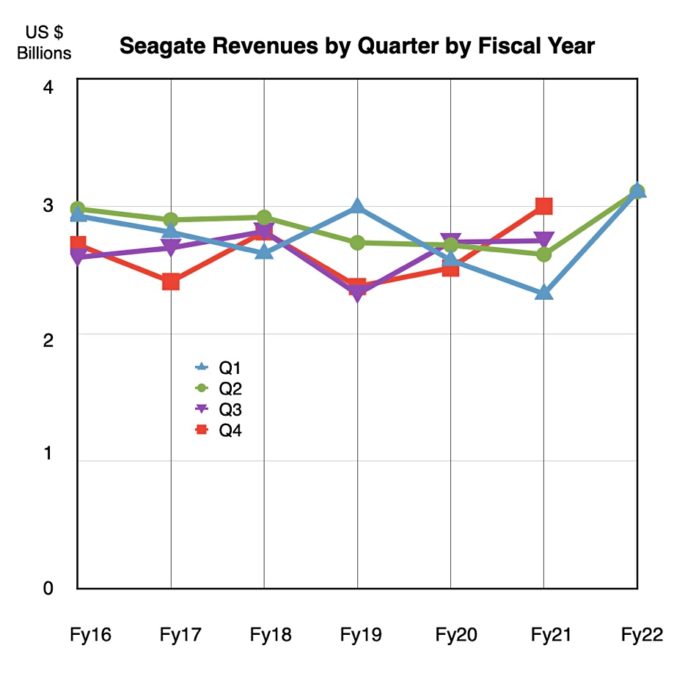

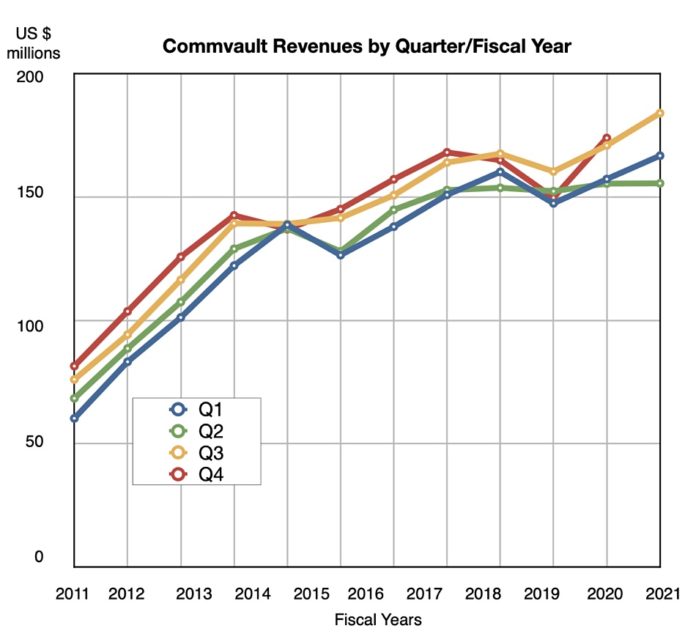

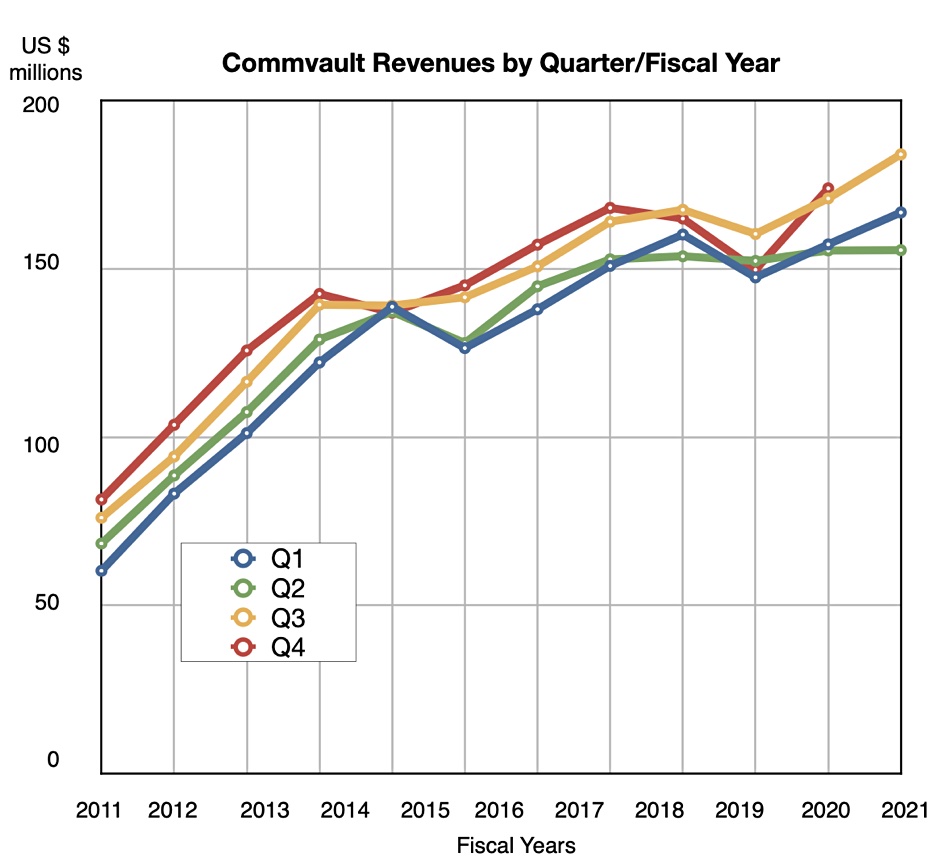

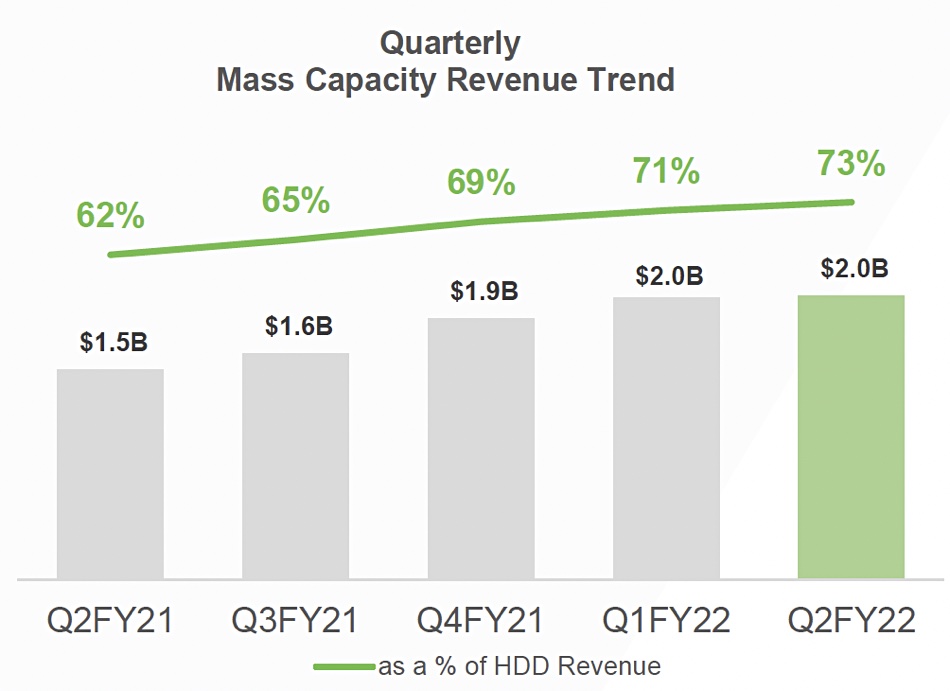

There is a hint here that fiscal 2022 could be outstanding as well. A chart of Seagate’s revenues by quarter per fiscal year shows the excellent start to its FY22:

Mosley said “Our execution and product momentum positions Seagate to deliver a third consecutive calendar year of top line growth … revenue to increase three per cent to six per cent with further growth beyond.” Surely he’s saying here fiscal 2022 will be a very good year too.

Wells Fargo analyst Aaron Rakers told subscribers “Seagate increased its FY2022 revenue outlook to grow in the +12–14 per cent y/y range vs prior guided growth in the low double-digit y/y range.”

Financial summary:

- Gross margin – 30.4 per cent (26.5 per cent a year ago);

- Diluted EPs – $2.23 compared to year ago $1.12;

- Cash from operations – $521 million;

- Free cash flow – $426 million.

The quarter saw high double-digit growth in Seagate 18TB drive shipments to cloud customers. Seagate said there is strong demand for its 20TB drives as well. In fact it was Seagate’s fifth consecutive quarter of record capacity shipment.

Mosley said in the earnings call: “Sequential growth from the cloud in the December quarter was somewhat counterbalanced by lower revenue in the enterprise, OEM and legacy PC markets, that we attribute primarily to the COVID-related supply challenges.”

CFO Gianluca Romano said the SSD business was in good shape too. “Non-HDD revenue increased 17 per cent sequentially and 48 per cent year-over-year to a record $294 million boosted by strong SSD demand.”

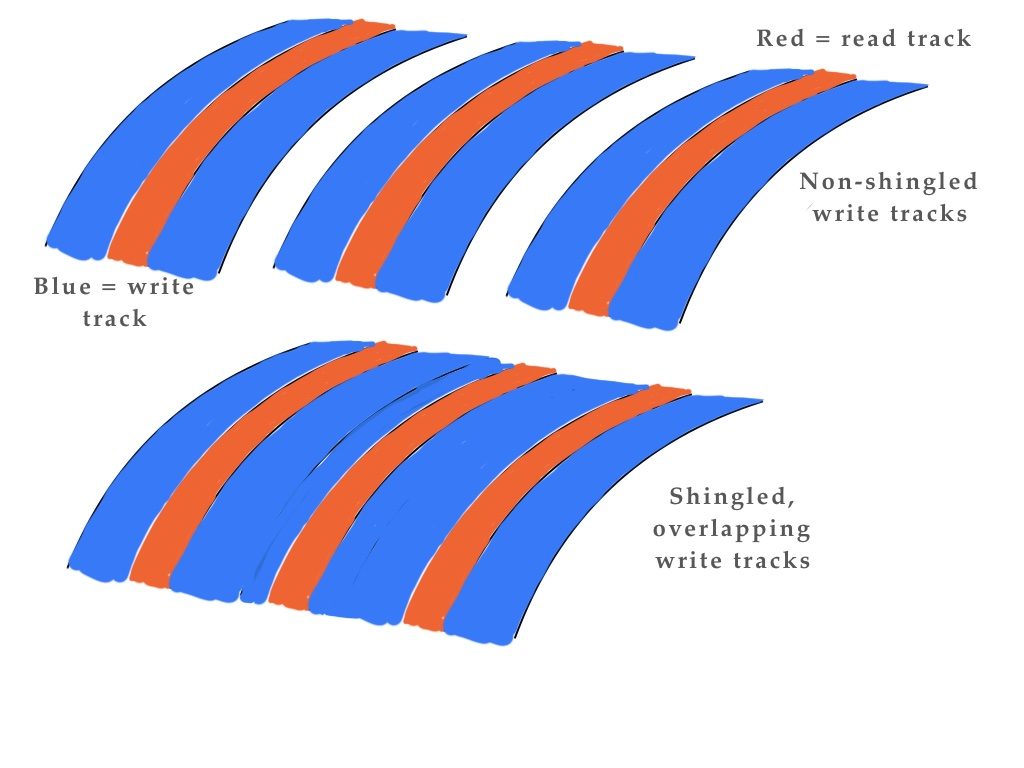

Mosley was enthusiastic about the 20TB drives. “The 20 terabyte ramp is going to be a very, very aggressive ramp.” Its also shipping 22TB SMR versions of these drives.

The Q3 outlook is for revenues of $2.9 billion +/- $150 million and a 6.2 per cent rise year-on-year at the mid-point. Shares responded positively to the results and the outlook, rising from $95.70 before the results release to $112.47 today.

We’ll keep a watch on Seagate and look for it completing a great fiscal 2022.