Sponsored Tucked away in his files, HubStor CEO Geoff Bourgeois still has the scribbled notes in which he and co-founder Greg Campbell first sketched out the idea for their business back in 2015. He recently looked those notes out, he says, and was gratified to see that their vision hasn’t changed that much.

“There may have been some twists and turns along the way,” he says, “but my main impression was, ‘Wow. We’ve really stuck to plan.’”

That plan, from the start, was for the Kanata, Ontario-based business to build a unified platform that enables companies to tap into the scalability and cost-efficiency of the cloud to meet their backup and archiving needs.

Along the way, HubStor’s promise to help them protect their data, simplify their IT infrastructure and achieve compliance, all via a software-as-a-service (SaaS) offering, has won over many corporate customers, including a handful from the Fortune 100.

“While we bootstrapped the company ourselves in its early days,” Bourgeois reports, “the business is now customer-funded by a recurring revenue model and seeing healthy organic growth.”

A gap in the market

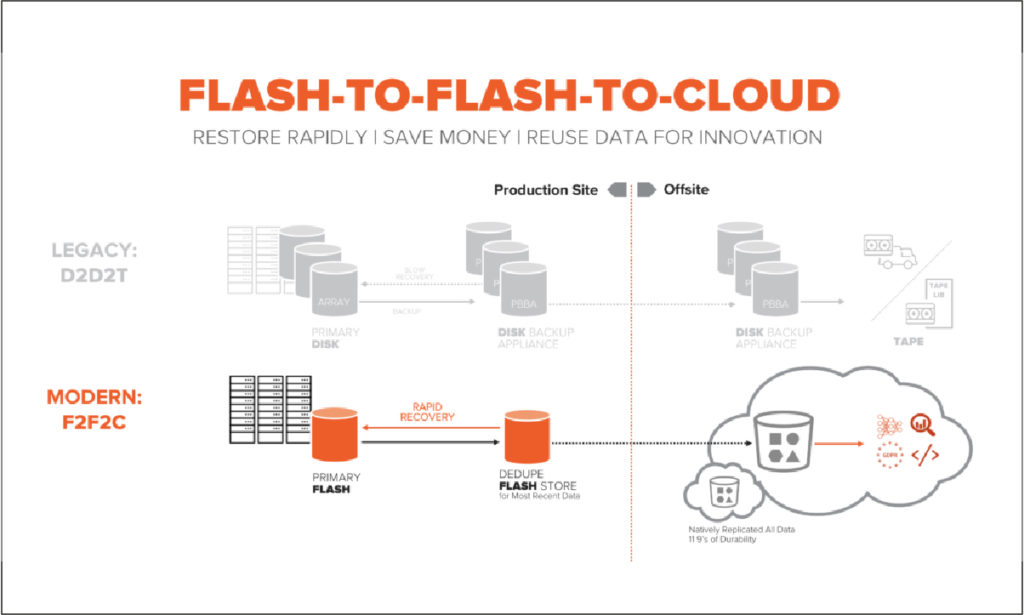

The key to this success, as with so many businesses, came from spotting a gap in the market. Back in 2015, Bourgeois says, there was a great deal of interest among IT decision-makers in using the cloud for backup and archiving. Many were looking to escape the costly and time-consuming trap of having to provision and manage more and more on-premise infrastructure to meet their storage needs. But when they looked into the cloud, they often complained, their options were limited to commodity infrastructure-as-a-service (IaaS) offerings.

These presented significant challenges. While IaaS might provide a bare-bones cloud location in which to store data, these services didn’t connect easily to the workloads or applications that organisations were running; nor did they help IT admins to apply data management rules and policies around backup and archiving to the data sets they were looking to migrate. As Bourgeois puts it: “There was no easy on-ramp to the cloud.”

“That’s when a lightbulb came on for us,” he continues. “There was a big disconnect here – and what was missing was a SaaS layer that would make the cloud more consumable for backup and archiving.”

The value of a ‘bridge’ connecting company data sets to low-cost, scalable cloud storage, in a logical, easy-to-manage way, has only increased over the intervening five years, according to Bourgeois.

The HubStor interface gives IT administrators a single console via which they can view all backup and archive workloads, handle each one according to its particular data-management needs, and migrate it to the cloud region of their choice, or to multiple regions where appropriate.

They can allocate data to different storage tiers in the cloud, in order to get the most from their storage budget, and apply special rules for specific enterprise use cases, such as security, regulatory compliance and litigation preparedness.

The data involved may be structured or unstructured, database or blob. It might originate from on-premise systems, or from other private or public clouds. For example, the back-up of corporate SaaS applications, including Microsoft 365, Slack and Box, is now a high-growth revenue stream for the business, says Bourgeois.

Twists and turns

The rapid growth of back-up for SaaS applications is just one of those “twists and turns” in the company’s history and an area where it continues to expand. Backup for files stored in Box, a cloud content management provider, for example, was added in November 2019.

A more recent launch is backup for virtual machines (VMs); until as recently as last year, even Bourgeois doubted there might be sufficient demand for a new product in this market, given that Commvault, Veeam and Veritas are all active and established players there.

“I thought those guys had this market sewn up, but we’ve been asked again and again at trade shows whether we offer backup for virtual environments. And the people asking would say they were customers of those other companies, but looking for something different, something more cloud-based, something more modern in terms of user experience. So, some time around the end of 2019 we were like, ‘OK, it’s time for us to do this.’”

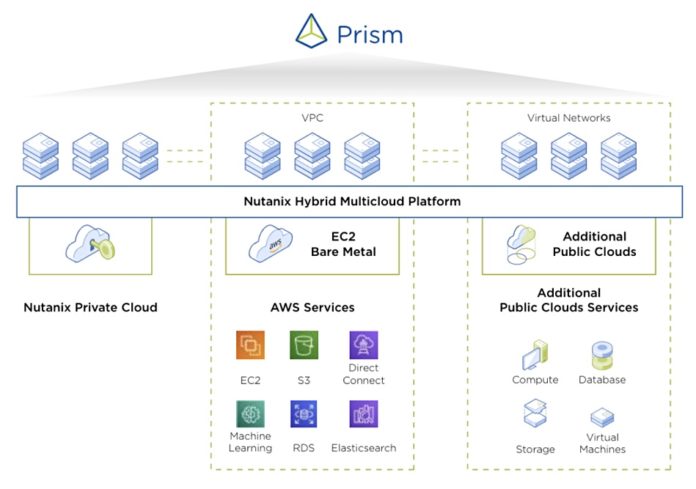

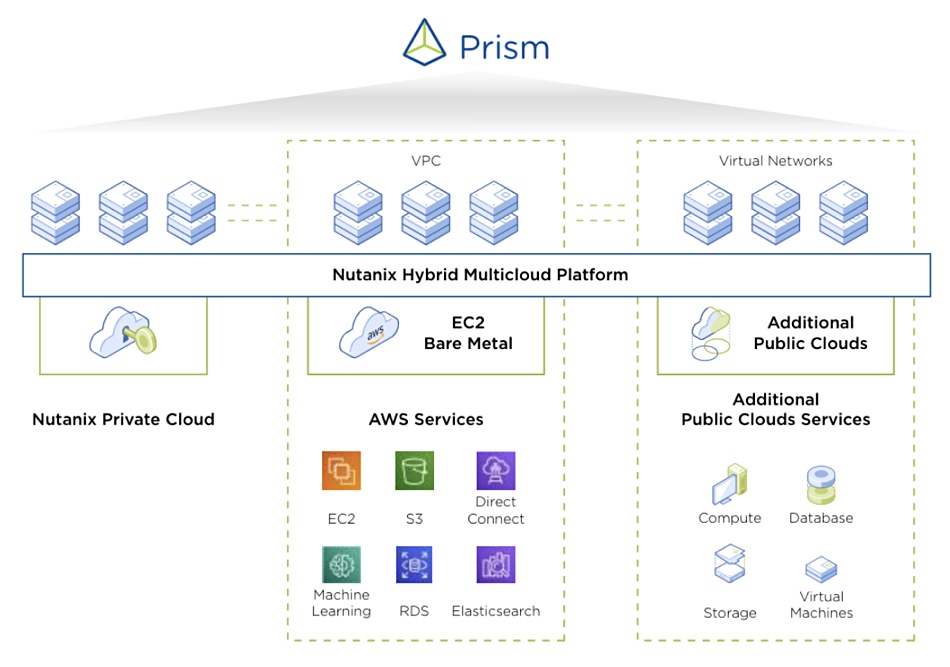

In May 2020, HubStor announced the release of its BaaS (Backup-as-a-service) solution for VMware vSphere environments. Customers can use either a dedicated or shared BaaS model, with pricing based mainly on their cloud storage consumption, rather than the number of VMs protected, as is more typically the case with competitors. HubStor also plans to support other virtual infrastructures, including those based on Microsoft Hyper-V and Nutanix, in the near future.

Customer expansion opportunities

This new offering could be a valuable way to attract new customers, who might then go on to explore HubStor’s other offerings: file system tiering, Office 365 backup, journaling or content archiving, for example.

It makes a lot of sense to customers to do this and is a regularly observed trend, says Bourgeois. “It’s a win-win for customers when they throw multiple workloads at us. I mean, it’s fine if a customer signs up to HubStor and just uses it for one particular workload or one particular use case. But there’s a very small, incremental uptick in cost to them when they want to leverage it for other things, and so they start to achieve very significant economies of scale quite quickly with the compute investment they’ve made to use our SaaS.”

A great example, he says, is Los Angeles-based Virgin Hyperloop One, which is working to make this thoroughly modern form of mass transportation a reality, using electric propulsion and electromagnetic levitation in near-vacuum conditions. It may not be HubStor’s largest corporate customer from a revenue perspective, says Bourgeois, but it’s one that is taking advantage of close to the full scope of its platform’s capabilities.

“So for example, Virgin Hyperloop One is backing up SaaS applications, including Microsoft 365, and doing long-term retention of audit log data from SaaS applications. They’re doing virtual machine back-up. They’re tiering file systems and they’re leveraging HubStor for legal discovery and security audits, too,” he says.

Conversely, existing HubStor customers may be keen to adopt the new VM backup product. Among them is Axon Corporation, a Scottsdale, Arizona-based industrial machinery company. According to Axon’s manager of infrastructure systems Ryan Reichart, the company is looking forward to replacing its existing backup product, which he describes as “expensive and extraordinarily complex.”

Says Reichart: “One exciting feature is that [Hubstor’s VM backup] integrates directly with the SaaS management dashboard and storage accounts of our other HubStor products in use – file tiering and Office 365 backup.”

In other words, HubStor will bring new cost-efficiency and simplicity to backup and archiving that Axon has previously been lacking.

A new simplicity

It’s a similar story at other customers, says Bourgeois, and simplicity has been a mantra for him and the HubStor team from the start. With a user experience refresh planned for this year, the team will be looking to make HubStor’s SaaS interface even easier to use.

At its heart, a SaaS platform “gets customers out of the weeds”, he says. They no longer have to manage on-premise tape libraries or backup servers or deal with licensing issues. Above all, they get a true-to-cloud consumption pricing model, typically for the first time.

“With HubStor in place, they can see exactly what underlying cloud resources they’re consuming, and the costs associated with that, plus a simple subscription fee on top for using our SaaS, which is a totally transparent mark-up on the use of cloud resources,” he explains.

This means that customers can move storage around, to lower cost storage tiers. For example, if a cloud provider introduces sharper pricing tactics, they can reassess their approach and shrink the underlying cloud costs still further. “In some cases,” Bourgeois concludes, “we’ve been able to help customers achieve a million-dollar a year budget relief from escaping some of the legacy approaches they were using. That’s a good feeling and it’s a big source of pride to us.”

This article was sponsored by Hubstor.