A flurry of add-on processor startups think there is a gap between X86 CPUs and GPUs that DPUs (data processing units) can fill.

Advocates claim DPUs boost application performance by offloading storage, networking and other dedicated tasks from the general purpose X86 processor.

Suppliers such as ScaleFlux, NGD, Eideticom and Nyriad are building computational storage systems – in essence, drives with on-board processors. Pensando has developed a networking and storage processing chip which is used by NetApp and Fungible is building a composable security and storage processing device.

A processing gap has opened up, according to Pradeep Sindhu, Fungible co-founder and CEO. GPUs have demonstrated that certain specialised processing tasks can be better carried out by dedicated hardware which is many times faster than an X86 CPU. The idea of Smart NICs, host bus adapters and Ethernet NICs with on-board, offload processors for TCP/IP offload and data plane acceleration, have educated us all that X86 servers can do with help in handling certain workloads.

In a press briefing this week, Sindhu outlined a four-pronged general data centre infrastructure problem.

- Moore’s Law is slowing and the X86 speed improvement rate is slowing while data centre workloads are increasing.

- The volume of internal, east-west networking traffic in data centres is increasing.

- Data sets are increasing in size as people realise that, with AI and analysis tasks, the bigger the data set, the better the data. Big data needs to be sharded across hundreds of servers, which leads to even more east-west traffic.

- Security attacks are growing.

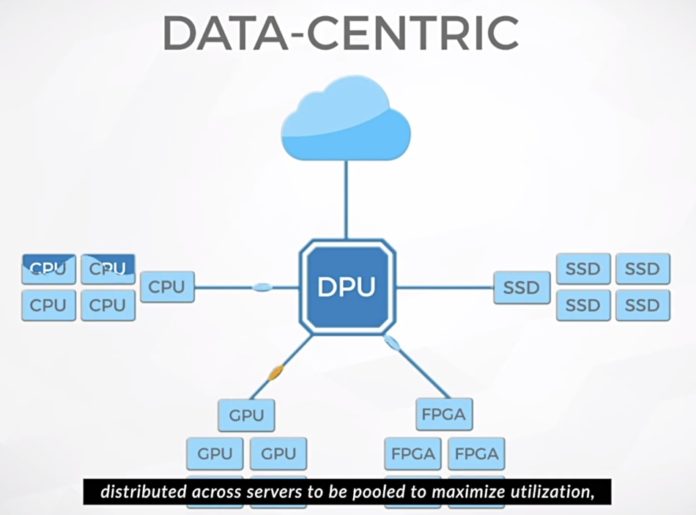

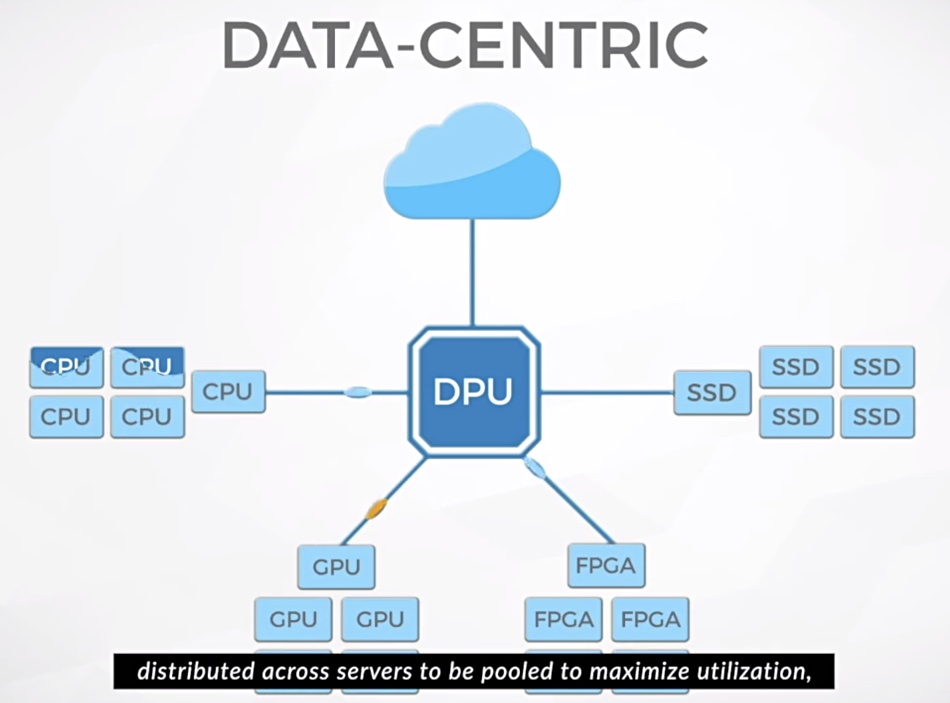

These issues are making data interchange between data centre nodes inefficient. For instance, an X86 server is good at application processing but not at data-centric processing, Sindhu argues. The latter is characterised by all of the work coming across network links, by IO dominating arithmetic and logic, and by multiple contexts which require a much higher rate of context-switching than typical applications.

Also, many analytics and columnar database workloads involve streaming data that need filtering for joins and map reduce sorting – data-centric computation, in other words. Neither GPUs nor X86 nor ARM CPUs can do this data-centric processing efficiently. Hence the need for dedicated DPU, Sindhu said.

DPUs can be pooled for use by CPUs and GPUs and should be programmable in a high-level language like C, according to Sindhu. They can also disaggregate server resources such as DRAM, Optane and SSDs and pool them for use in dynamically-composed servers. Fungible is thus both a network/storage/security DPU developer and a composable systems developer.

It’s as if Fungible architected a DPU to perform data-centric work and then realised that composable systems were instantiated and torn down using data-centric instructions too.

A Fungible white paper explains its ideas in more detail and a video provides an easy-to-digest intro.

We understand the company will launching its first products later this year and look forward to finding out more about their design, their performance and route to market.