Computational storage is an emerging trend that sees some data processing carried out at the storage layer, rather than moving the data into a computer’s main memory for processing by the host CPU.

The notion behind computational storage is that it takes time and resources to move data from where it is stored. It may be more efficient to do some of the processing in-situ where the data lives for applications such as AI and data analytics.where the data sets are very large or the task is latency-sensitive.

As with many emerging trends, different vendors and startups are developing a number of different technologies and approaches to computational storage, often with little or no standardisation between them.

To address this, the Storage Networking Industry Association (SNIA) has set up a technical working group to promote device interoperability and to define interface standards for deployment, provisioning, management and security of computational storage systems. The group is co-chaired by NGD and SK Hynix, and over 20 companies are actively participating.

A report from 451 Research containing an overview of computational storage will be published on the SNIA website from June 17.

Blocks & Files has been given access to information from 451 Research on the current players in the computational storage field, and we list some of the front runners and detail their respective offerings.

NGD Systems

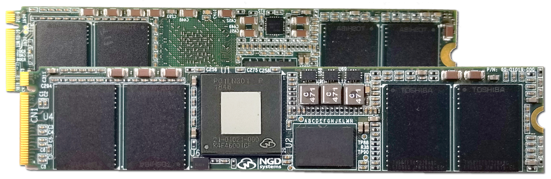

NGD Systems achieves in-situ processing through the simple approach of integrating an ARM Cortex-A53 processor into the controller of an NVMe SSD.

The data still needs to be moved from the NAND flash chips to the processor, but that is accomplished using a Common Flash Interface (CFI), which has three to six times the bandwidth of the host interface.

The advantage of this approach is that the processor can run a standard operating system (Ubuntu Linux), and allows any software that runs on Ubuntu to be used for in situ computing in NGD’s drives. The drive itself can also be used as a standard SSD.

NGD has not specified the expected performance boost for applications using the latest Newport generation of it hardware, but said the previous generation accelerated image recognition by two orders of magnitude and some Hadoop functions by over 40 per cent.

Samsung

Samsung announced in October 2018 the SmartSSD. It describes the devices as a smart subsystem rather than a storage device – a server loaded with multiple SmartSSDs will behave like a clustered computing device.

Each Smart SSD is based on Samsung’s 3D V-NAND TLC flash plus a Xilinx Zynq FPGA with ARM cores. Samsung targets two types of workload; analytics, and storage services such as data compression, de-duplication and encryption.

Unlike NGD’s platform, the SmartSSD cannot run standard software, but Samsung and Xilinx have jointly developed a runtime library for the FPGA in the SmartSSD.

The devices are currently being tested by potential customers such as hyperscalers and storage system makers.

Bigstream, which develops tools for analytics and ML applications, demonstrated its software working with Samsung’s SmartSSDs and Apache Spark, providing a performance boost of threefold to fivefold.

ScaleFlux

ScaleFlux is another vendor combining processing with a flash drive. It is currently shipping the CSS 1000 series, sold as PCIe cards or U.2 drives with raw flash capacities of 2TB-8TB. A third generation is due later this year.

Each ScaleFlux CSS drive is based on a Xilinx FPGA that processes data as well as acting as the flash controller. It integrates into a host server and storage environment via a ScaleFlux software module, with compute functions made accessible through APIs exposed from the software module.

This software includes the flash translation layer (FTL) to manage IO and the flash storage, which is in the controller in a standard SSD.

This means it consumes some host CPU cycles, but ScaleFlux claims this it is outweighed by the advantages of running it as host software, such as the ability to optimise to suit specific workloads.

Moving some processing from servers to the CSS devices requires changes to code, and ScaleFlux offers off-the-shelf code packages to accelerate applications such as Aerospike, Apache HBase, Hadoop and MySQL, the OpenZFS file system, and the Ceph storage system

According to ScaleFlux, China’s Alibaba is set to use CSS devices to accelerate its PolarDB application, which is a combined transactional and analytic database. Alibaba is believed to have modified applications itself, using APIs and code libraries from ScaleFlux.

Eideticom

Eideticom‘s NoLoad accelerators are unusual in that they fit into a 2.5in U.2 NVMe SSD format, but contain a Xilinx FPGA accelerator and a relatively small amount of memory instead of flash storage.

The concept behind this architecture is that the PCIe bus can be used to rapidly move data between the NoLoad accelerator and NVMe SSD storage at high speed, with little or no host CPU involvement.

This allows the compute element of the computational storage to be scaled independently of storage capacity, and even beyond a single server node by using NVMe-oF.

In a demo at SuperComputing 2018, Eideticom showed six NoLoad devices compressing data fed to them by 18 flash drives at a total of 160 GB/sec, with less than five percent host CPU overhead.

Eideticom said a U.2 PCIe Gen3x4 NoLoad device can zlib-compress or decompress data at over 3 GB/sec.

Eideticom touts NoLoad for storage services such as data compression and deduplication, plus RAID and erasure coding. Long-term plans include accelerating applications such as analytics.

Nyriad

The New Zealand company Nyriad developed its technology originally for the massive data processing requirements of the Square Kilometre Array (SKA) radio telescope.

Instead of hardware, NSULATE is a Linux block device that functions as a software-defined alternative to RAID for high-performance, large-scale storage solutions. It uses Nvidia GPUs as storage controllers to perform erasure coding with very deep parity calculations for very high levels of data protection.

According to Nyriad the GPUs can be used concurrently for other workloads such as machine learning and blockchain calculations.

Nyriad partners include Boston Limited, which has an NSULATE-based system that also uses NVDIMMs, and ThinkParQ which has developed a storage server that incorporates NSULATE. Oregon State University uses that system for computational biology work.

Overall take

Computational Storage is an emerging technology, but will become commonplace in one form or another, 451 Research analyst Tim Stammers forecasts.

One reason for this is that new workloads using machine learning and analytics require faster access to data than conventional storage systems can provide, even those entirely based on flash. Computational storage looks to be one answer to this, according to 451, and its benefits could be amplified further by using it in combination with storage-class memories (SCM).