WekaIO has devised a “production-ready” framework to help artificial intelligence installations to speed up their storage data transfers. The basic deal is that WekaIO supports Nvidia’s GPUDirect storage with its NVMe file storage. Weka says its solution can deliver 73 GB/sec of bandwidth to a single GPU client.

The Weka AI framework omprises customisable reference architectures and software development kits, centred on Nvidia GPUs, Mellanox networking, Supermicro servers (other server and storage hardware vendors are also supported) and Weka Matrix parallel file system software.

Paresh Kharya, director of product management for accelerated computing at Nvidia, provided a quote: “End-to-end application performance for AI requires feeding high-performance Nvidia GPUs with a high-throughput data pipeline. Weka AI leverages GPUDirect storage to provide a direct path between storage and GPUs, eliminating I/O bottlenecks for data intensive AI applications.”

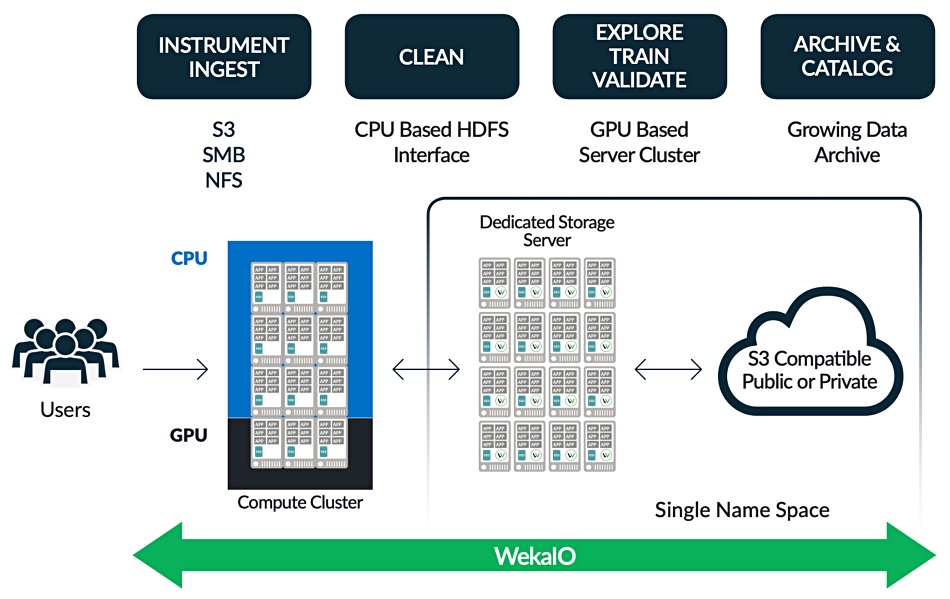

Nvidia and Weka say AI data pipelines have a sequence of stages with distinct storage IO requirements: massive bandwidth for ingest and training; mixed read/write handling for extract, transform, and load (ETL); and low latency for inference. They say a single namespace is needed for entire data pipeline visibility.

Weka AI bundles are immediately available from Weka’s channel. You can check out a Weka AI white paper (registration required.)