Quobyte has developed v4 of its eponymous parallel file system software with an AI focus in mind.

Quobyte v4 adds cloud object storage, Arm support, and end-to-end observability. Previous point releases have introduced RDMA, using Infiniband, Omni-Path and RoCE, and a File Query Engine.

Co-founder and CEO Bjorn Kolbeck said: “AI is what HPC used to be – really the most demanding workload that drives the requirements for hardware and software.”

Arm support from Quobyte initially came out in 2018-2019, porting both its client and server software to Cavium Arm. Kolbeck told us: “Then AMD came out with the EPYC processors and no one wanted to talk about Arm anymore … And now they’ve changed completely with Apple Silicon and Nvidia completely switching to Arm. And then we see the AWS Graviton, Google, Ampere, so suddenly there’s significant investment in Arm.”

“We’ve revived our Arm support, updated it, and we both support the client and the server on Arms. You can mix and match. You can have x86 servers next to Arm servers or clients, whatever you like. It’s really completely transparent.”

The AI focus has not resulted in Quobyte supporting Nvidia’s GPUDirect protocol, with Kolbeck saying: “And I don’t know if we will because, how do I say this very diplomatically? GPUDirect is more or less a marketing point. It’s not really important for performance. One of the reasons is that the CPU is basically there just to shovel data into the GPUs. The other thing is that latency doesn’t matter in AI workloads.

“There’s still people touting that. But if you look, for example, at the MLPerf benchmark, it’s not about latency, it’s about consistent high performance in terms of rating the link and delivering enough data for the GPU. Latency is not really the key there. It’s really about this consistent high throughput across a large number of devices [and] RDMA is the key.”

He claimed: “You can use GPUDirect with NFS and then it’s just an emulation layer that does shovel it directly from the NFS current module to the GPU. But I don’t think you win much by that.”

How about GPUDirect for objects? Kolbeck opined: “That is even more weird. I think that is just a translation layer as well, because your bottleneck is the HTTP request and the expensive parsing of the protocol. So if you look at how much CPU or overhead you create from that, so when you send HTTP requests, you’re parsing text. It’s very expensive compared to binary protocols. And this parsing needs to be done by the CPU. So in that case, the HTTP overhead is gigantic for parsing it and extracting the data and whatever. I don’t know what GPU Direct has there.”

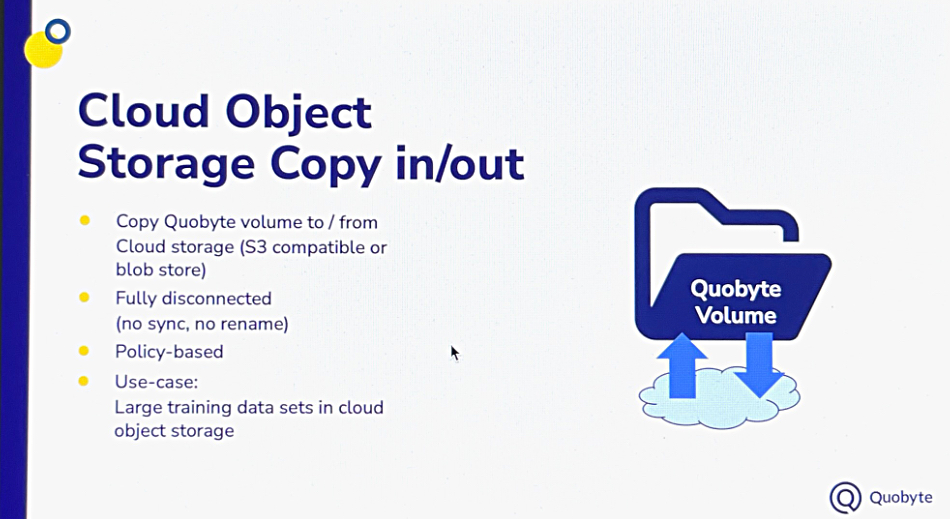

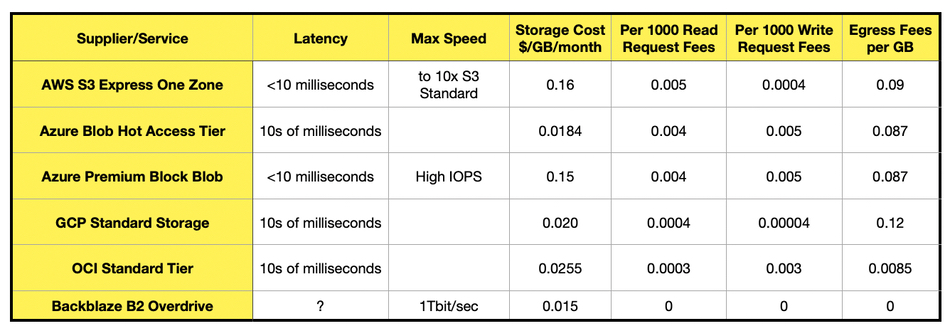

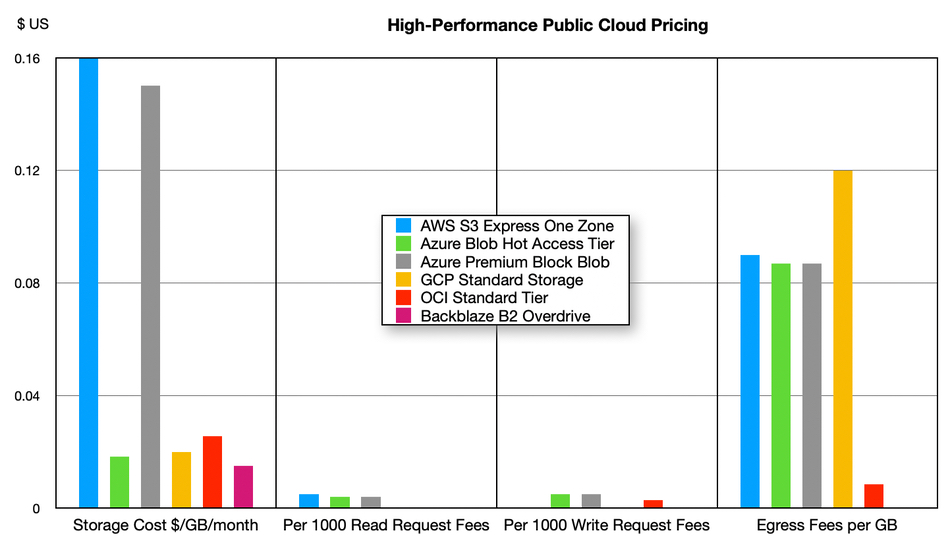

The Cloud Tiering means that you can store petabytes of data more cost-efficiently. With AI workloads like training, “we’re talking about tens of petabytes and the cost factor is just insane on the cloud. So this is why we decided to integrate the tiering both to S3 but also Azure Blob store. Pretty straightforward, typical external tiering with the stub in the HPC world, we’ve known this for probably 30 years with all the pros and cons, but on the cloud it’s mandatory.”

Kolbeck said: ”We can tier to anything that speaks S3. So basically AWS, Google Cloud, and a long list. We also support the Microsoft Azure Blob store protocol, which is different.”

“I’m more excited about what we call copy-in, copy-out.” He talked about a scenario where “customers that use AI for the cloud;, they often have their entire dataset in S3 or some object store that’s cheaper because they started in the cloud. Suddenly they have tons of petabytes there and know how it’s locked in and is so hard to get off it. So what we offer here is the ability to say, ‘I have a Quobyte cluster and this data set – create a volume from it that I can access with high performance for the training phase. Now work on this data set. And then, once I’m done, throw it away.’ So the copy-in basically allows you to say, ‘Transform this bucket or this part of the bucket and this and this and this into a volume that I can use for my high performance training.’”

“You can also copy-out from Quobyte. It works in both directions; very convenient. And this was actually one of the features where we had customer input. Because we have customers doing this kind of training with a mix of on-prem and in the cloud, and that was a common request.”

He added: ”We are not crippling our customers here. They can choose between the tiering or the copy-in and out; they have all the options there. So that’s basically a cloud part of v4 to make that cost-effective. And another thing here is [that] some of our customers use Lustre in the cloud [which] is not easy to use [with] its drivers, kernel drivers and so on. So this makes Quobyte a very easy replacement for those FSX for Lustre workloads.”

The end-to-end observability feature “was developed with a lot of input from our customers with large infrastructures. And one of the big problems that large infrastructures have is there’s always something that doesn’t work, right? There might be this or that which is problematic. It might be a networking issue or the … the application isn’t working properly.”

He talked about typical scenarios involving “a data scientist running training workload with PyTorch or … a typical HPC job” and says that the E2E observability empowers storage admin staff.

“The user says the storage is slow … basically pointing at the admins, and then the storage admins are like, ‘Oh, we have to find out what’s going on because management is on our backs.’ And with this end-to-end observability, we basically track the requests coming from the application on a profile basis and monitor it over the network down to the disk. So you basically have the whole path and back monitored because we have our native client. Not only does this alert admins when there is slowness, or the client cannot communicate properly with the network, there are network congestion problems, all those kind of things. It alerts them that an application is waiting for IO or for file system operations that aren’t completing. That now alerts them before the users say anything.”

“It’s a huge game changer for them. We have this in production with a few customers and the feedback has been overwhelming because that’s really a problem. Now they’re ahead, they can see the problem, they can tell the users there’s a networking issue or there’s something wrong with your client. Or even more important, they can look at the file and see that how many requests on flight, what was the IO depth, the maximum I/O depth on the file. And then you see that the application [is] requesting, I don’t know, four kilobytes at a time. And then you can tell them: ‘Well, it’s your application, not the storage.’”

“This completely turns the game around for them because now they know ahead of time. They can advise the users instead of reacting. They are proactive. And that’s a much nicer position to be in rather than finding a big fat finger pointing in your direction and having to react as best you can.”

Kolbeck said that, in v4, “we also did performance improvements for NVMe. Depending on the drive and the configuration, we get up to 50 percent better throughput with our new release. Part of it is that we optimized, for example, for the new EPYC 5 CPUs that have significantly more memory bandwidth; it doubled between f4 and f5, which is a game changer for storage.”

“Quobyte also has an interface where you can use Quobyte like an object store to access the file system … We built this all on our own, tightly integrated with the file system. Everything is mapped on the file system layer. We extended this with S3 versioning mapped onto file versions that you can access from the file system. So in Linux with relinks you can see different file versions that access them. The object lock in S3, when you set that, it’s stored as a file retention policy in Quobyte So you can see it on the file, you can modify it on the file and, if you set a retention policy, when you create a file, you can see there’s an object like S3.”

v4 has an integrated DNS Server. Kolbeck said: “The integrated DNS server makes it easier to do things like load balancing, high-availability for services that rely on IP addresses like S3. You need round robin across all available gateways for NFS. We have virtual IPs. Just configure a pool, point to Quobyte or point your DNS sub domain to Quobyte and then NFS so cluster name just points at the right interfaces.”

It also has single sign-on. “We built Quobyte as a solution that enables you to provide cloud-like services so that you, a storage admin, a storage group, and a company can act like a cloud provider. We have strong multi-tenancy built in with isolation, optional hardware isolation on, and our web interface and CLI are also multi-tenancy ready. So users can log in, create their own access keys, run file queries, and tenant admins can log in, create volumes, and so on.”

We asked Kolbeck for his views on DAOS. He said: “DAOS is first of all an object store; so not the file system semantics. It has a very different goal, being designed for what an HPC system was like 10, 15 years ago [with] burst buffers [and] super high performance.”

“The problem with the burst buffer is that there are only a handful of supercomputing centres at the very high end that can afford the high end infrastructure for buffering. … you need to put in substantial expensive hardware just for a burst buffer.”

As for Quobyte, “we wouldn’t go after this market because it’s in the high end in storage. It is always this kind of weird attitude for the super high performance … it’s not really for a larger market.”

“If we talk about AI now, no one cares about the lowest latency or the fastest … server. What you care about is that your farm of a thousand GPUs can have a massive pipe to read lots of data. So it’s a scale-out world.”

“What people forget is GPUs are very expensive. Data scientists are very expensive. The most important thing after delivering the performance [and] equally as important is availability and uptime. It’s the dirty secret of the supercomputers. If you look at a lot of them they don’t publish down times. It’s a dirty secret that the Lustre systems sometimes cause downtime where availability is less than 60 percent.”

“We all know the challenges of keeping Lustre up and you have to have downtime when you update kernel modules on the driver’s side and so on.”

“So with GPUs being really crazy expensive and … the power consumption is insane. So you have this energy bill for idle GPUs, you have the frustrated data scientists and it doesn’t matter that you have the fastest storage if your uptime isn’t great.”

Quobyte v4 is publicly available and in production. The v3 to v4 upgrade is non-disruptive.