Hyperscalers who use SQL processing units (SPUs) to offload x86 CPUs running analytics workloads could get a 100x or more acceleration of their jobs, enabling lower costs and more efficient CPU core use, says in-memory computing chip and hardware startup NeuroBlade.

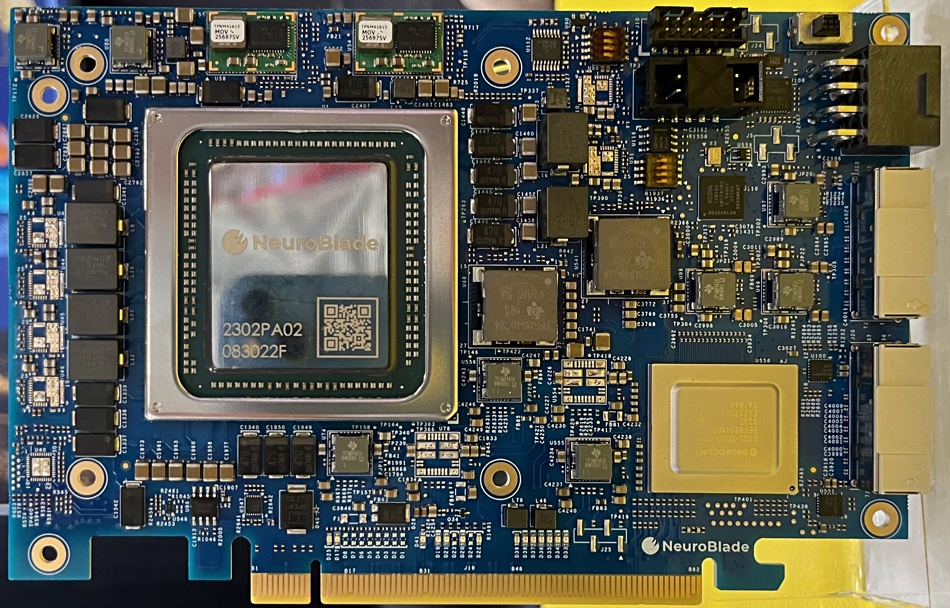

The startup has developed a specialized semiconductor chip to accelerate SQL instruction processing. It plugs into a host server’s PCIe bus and transparently takes over the SQL-related processing with no host application software changes. The device is emphatically not a DPU (data processing unit) which offloads general infrastructure processing, including security, networking and storage, from a host x86 server, nor is it a SmartNIC running networking faster.

Lior Genzel Gal, NeuroBlade chief business officer, briefed us on the company’s hyperscaler focus at the Big Data London conference event where NeuroBlade had a stand. He said that NeuroBlade was talking to all the big hyperscalers and has a contract win for several thousand SPU cards with one of them.

The company has a partnership deal with Dell to distribute SPU card products in PowerEdge servers, and potential customers for these servers thronged the aisles and lecture theaters of what was a very popular event, with crowds queuing for talks and not a face mask to be seen.

Gal was CEO and co-founder of NVMe storage array startup Excelero, which was acquired by Nvidia in March last year. Gal left Excelero in September 2020 and worked on strategic business development at DPU developer arcastream, which was acquired by Kalray in 2022. He joined NeuroBlade as CBO in February 2021 and covered strategic business development for Kalray from April last year.

Gal says the Excelero tech accelerated storage, but that was not enough on its own to accelerate application workloads. They needed end-to-end acceleration. DPUs can’t provide that, only working at the infrastructure level. Accelerating SQL processing by using a specifically designed processor enables end-to-end SQL analytics acceleration.

We asked about using the SPU for computational storage. It could be done, with a subset of SPU’s firmware code running in an FPGA or Arm CPU inside a drive chassis, but the return on investment would not be great. The SPU is a parallel processing design, intended to speed queries looking at data spread across many, many drives and not just one. NeuroBlade has talked to drive manufacturers, such as Samsung, but decided not to focus on this market, Gal told us.

That spurred us to ask about deploying an SPU card inside a storage array, plugged into its controllers and operating on data spread across many drives. This is relevant to NeuroBlade but the payoff from hyperscaler sales would be much higher than that from deploying SPUs inside storage arrays.

Our understanding is that a hyperscaler sale could potentially involve tens of thousands of CPUs compared to a storage array vendor shipping at the hundreds or low thousands of units a year level. NeuroBlade has talked to storage vendors such as VAST Data about SPU usage inside their arrays.

Gal said: “The path forward includes storage arrays but it is not our current focus.”

He reckons that NeuroBlade’s technology is unique and highly effective. Hyperscaler customers and others will be able to lower costs and release CPU cores for other work, saving millions of dollars a year.

Server x86 CPUs can be used for general application work. GPUs can be used for AI, while SPUs are for SQL-based analytics. “We are,” Gal said, “the Nvidia of data analytics.”