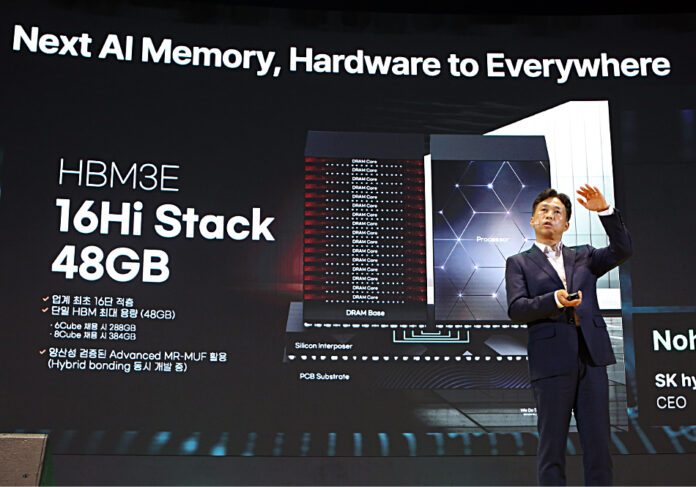

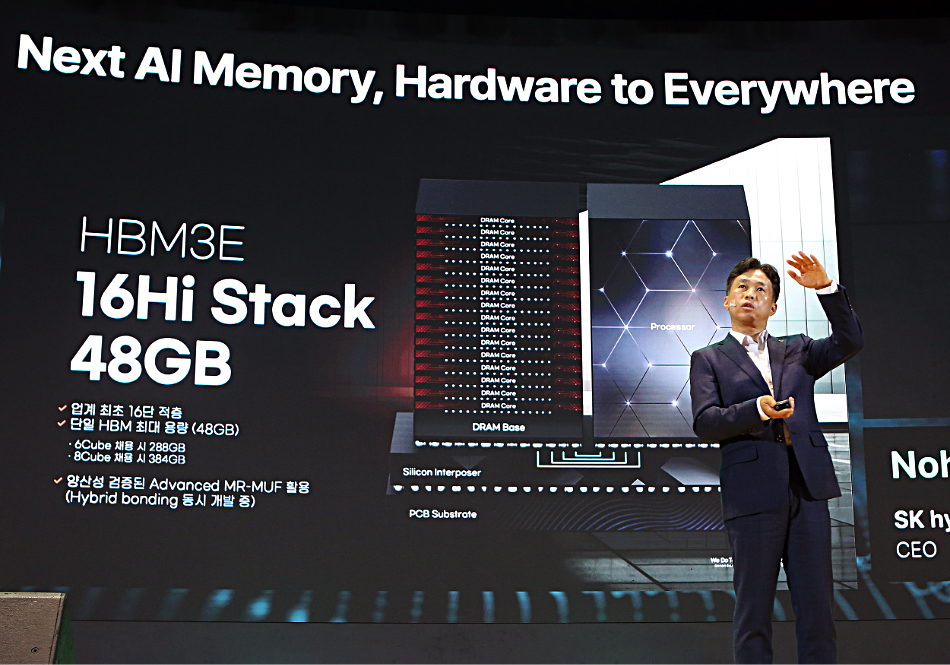

SK hynix has added another four layers to its 12-Hi HBM3e memory chips to increase capacity from 36 GB to 48 GB and is set to sample this 16-Hi product in 2025.

Up until now, all HBM3e chips have had a maximum of 12 layers, with 16-layer HBM understood to be arriving with the HBM4 standard in the next year or two. The 16-Hi technology was revealed by SK hynix CEO Kwak Noh-Jung during a keynote speech at the SK AI Summit in Seoul.

High Bandwidth Memory (HBM) stacks memory dice and connects them to a processor via an interposer unit rather than via a socket system as a way of increasing memory to processor bandwidth. The latest generation of this standard is extended HBM3 (HBM3e).

The coming HBM4 standard differs from HBM3e by having an expected 10-plus Gbps per pin versus HBM3e’s max of 9.2 Gbps per pin. This would mean a stack bandwidth of around 1.5 TBps compared to HBM3e’s 1.2-plus TBps. HBM4 will likely support higher capacities than HBM3e, possibly up to to 64 GB, and also have a lower latency. A report says Nvidia’s Jensen Huang has requested SK hynix to deliver HBM4 chips 6 months earlier than planned. SK Group Chairman Chey Tae-won said the chips would be delivered in the second half of 2025.

The 16-Hi HBM3e chips have generated performance improvements of 18 percent in GenAI training and 32 percent in inference against 12-Hi products, according to SK hynix’s in-house testing.

SK hynix’s 16-Hi product is fabricated using a MR-MUF (mass reflow-molded underfill) technology. This combines a reflow and molding process, attaching semiconductor chips to circuits by melting the bumps between chips, and filling the space between chips and the bump gap with a material called liquid epoxy molding compound (EMC). This increases stack durability and heat dissipation.

The SK hynix CEO spoke of more memory developments by the company:

- LPCAMM2 module for PCs

- 1c nm-based LPDDR5 and LPDDR6 memory

- PCIe gen 6 SSD

- High-capacity QLC enterprise SSD

- UFS 5.0 memory

- HBM4 chips with logic process on the base die

- CXL fabrics for external memory

- Processing near Memory (PNM), Processing in Memory (PIM), and Computational Storage product technologies

All of these are being developed by SK hynix in the face of what it sees as a serious and sustained increase in memory demand from AI workloads. We can expect its competitors, Samsung and Micron, to develop similar capacity HBM3e technology.