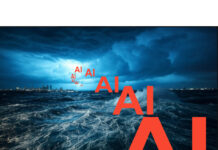

Box CEO Aaron Levie posted a chart tracking historical storage costs on X/Twitter:

He said: “When we started Box, the hard drives in our servers were ~80 GB, and we prayed that the economics would improve over time. Now, we’ll have hard drives with 50 TB in a couple years. A 600x improvement. Storage is becoming too cheap to meter.”

…

An X/Twitter post from semiconductor commentator @Jukanlosreve, known for accurate leaks of storage tech, claims that, much as 3D NAND regressed to using older NAND process technology with less fine line widths than the then-current planar NAND tech, so too could 3D DRAM. The Chinese fabber CXMT could potentially build 3D DRAM with its current DRAM process technology, instead of being prevented from doing so because it can no longer import US technology supply-banned advanced lithography equipment. @Jukanlosreve suggests “seeing China’s development as being blocked by US sanctions is too myopic.”

…

A Tom’s Hardware report says a 1 TB QLC Huawei eKitStore Xtreme 200E PCIe Gen 4 SSD is in South Korea for $32.00. This is cheap. A 1 TB Kioxia Exceria Plus Portable SSD external drive with USB 3.2 Gen 2 Interface is available on Amazon for $63.99.

…

IBM has published a 300-page PDF guide for Ceph, “IBM Storage Ceph for beginners.” It’s intended as an introduction to Ceph and IBM Storage Ceph in particular from installation to deployment of all unified services including block, file, and object, and is suited to help customers evaluate the benefits of IBM Storage Ceph in a test or POC environment. Access it here.

…

Taipei-based analyst Dan Nystedt posts on X/Twitter that Micron reckons the HBM market will grow strongly between now and 2030, increasing from $16 billion in 2024 to $64 billion in 2028 and reach $100 billion by 2030. This means the 2030 HBM market would be larger than the entire DRAM industry (including HBM) in 2024. Micron HBM chip production at its fab in Hiroshima, Japan, will hit 25,000 wafers by the end of 2024, he said. Its Taiwan fabs, Fab 11 in Taoyuan and A3 in Taichung, are ramping up 1β process technology and is expected to increase its HBM wafer start capacity to 60,000 in 12 months’ time.

…

Wedbush analyst Matt Bryson wrote about reports from the Korea Herald claiming Samsung may have picked Micron to be the primary supplier of LPDDR5 memory for the Galaxy S25 in lieu of Samsung’s own DRAM. “If accurate, the shift of Samsung’s handset division away from internal memory supply would be another damaging statement regarding the current state of Samsung DRAM production, albeit in line with concerns we have been encountering through 2024 with both DDR5 and HBM products.”

…

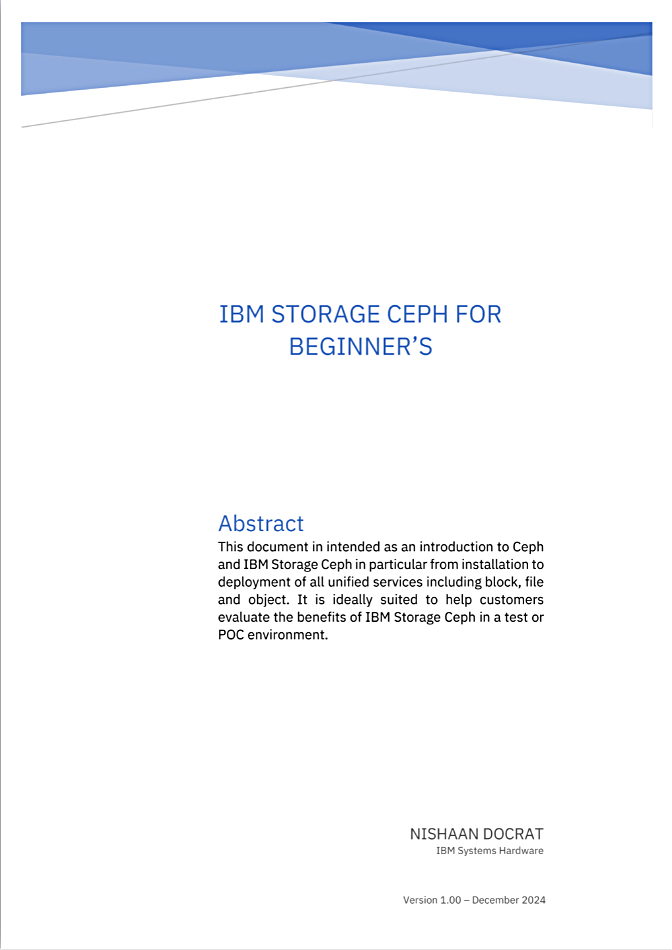

NeuroBlade’s SQL analytics acceleration is now accessible via Amazon Elastic Compute Cloud (EC2) F2 instances. By using AMD FPGA and EPYC CPUs, “this integration brings blazing-fast query performance, reduced costs, and unmatched efficiency to AWS customers.”

The card integrates with popular open source query engines like Presto and Apache Spark, delivering “market-leading query throughput efficiency (QpH/$).” NeuroBlade also provides reference integrations of its accelerator technology with Prestissimo (Presto + Velox) and Apache Spark. These setups enable customers to run industry-standard benchmarks such as TPC-H and TPC-DS or test their workloads with their own datasets. This facilitates apples-to-apples comparisons between accelerated and non-accelerated implementations on the same cloud infrastructure. It should demonstrate “the performance gains and cost efficiencies of NeuroBlade’s Acceleration technology in comparison to state of the art, native vectorized processing on the CPU.”

…

Other World Computing (OWC) has appointed Matt Dargis as CRO reporting directly to OWC founder and CEO Larry O’Connor. Prior to joining OWC, Dargis served as SVP US Sales at ACCO Brands and VP of North America Sales at Kensington where he rebuilt the North America sales team and created a new go-to-market strategy and three-year plan to double sales while improving the bottom line.

Dargis said: “OWC is well positioned for rapid expansion and growth due to an obsessive commitment to product quality and its customers. My goal is to ensure our products are sold in all relevant commercial and consumer channels around the globe and that all customers can experience the OWC quality and brand commitment. I expect to immediately hire some new roles to double down on our efforts and support of the channel.”

…

OWC has launched a ThunderBlade X12 professional-grade RAID product, and the USB4 40 Gbps Active Optical Cable, for long-distance connectivity. It also announced GA of the Thunderbolt 5 Hub with “unparalleled connectivity.” We’re told that the ThunderBlade X12 features speeds up to 6,500 MBps – twice the performance of its predecessor – and capacities from 12 to 96 TB with RAID 0, 1, 5, and 10 configurations. It’s intended for workflows involving 8K RAW, 16K video, or VR production, and will be available in March.

The Active Optical Cable, with universal USB-C connectivity and optical fiber technology, provides up to 40 Gbps of stable bandwidth, up to 240 W of power delivery, and up to 8K video resolution at distances of up to 15 feet. It can connect to Thunderbolt 4/3 and USB 4/3/2 USB-C equipped docks, displays, eGPUs, PCIe expansions, external SSDs, RAID storage, and accessories.

The Thunderbolt 5 Hub can turn a single cable connection from your machine into three Thunderbolt 5 ports and one USB-A port. It has up to 80 Gbps of bi-directional data speed – up to 2x faster than Thunderbolt 4 and USB 4 – and up to 120 Gbps for higher display bandwidth. It’s generally available for $189.99.

…

OWC and Hedge announced an alliance whereby every OWC Archive Pro purchase will now include a license for Hedge’s Canister software, for macOS and Windows, supporting streamlined Linear Tape-Open (LTO) backups – a $399 value at no additional cost.

…

The US Department of Commerce awarded Samsung up to $4.745 billion in direct funding under the CHIPS Incentives Program’s Funding Opportunity for Commercial Fabrication facilities. This follows the previously signed prelim memo terms, announced on April 15, 2024, and the completion of the department’s due diligence. The funding will support Samsung’s investment of over $37 billion in the coming years to turn its existing presence in central Texas into a comprehensive ecosystem for the development and production of leading-edge chips in the United States, including two new leading-edge logic fabs and an R&D fab in Taylor, as well as an expansion to the existing Austin facility.

…

SK hynix will unveil its “Full Stack AI Memory Provider” vision at CES 2025. It will showcase samples of HBM3E 16-layer products, Solidigm’s D5-P5336 122 TB SSD, and on-device AI products such as LPCAMM23 and ZUFS 4.04. It will also present CXL and PIM (Processing in Memory) technologies, along with modularized versions, CMM (CXL Memory Module)-Ax and AiMX5, designed to be core infrastructures for next-generation datacenters. CMM-Ax adds computational functionality to CXL. AiMX is SK Hynix’s Accelerator-in-Memory card product that specializes in large language models using GDDR6-AiM chips.

Referring to Solidigm’s 122 TB SSD, Ahn Hyun, CDO at SK hynix, said: “As SK hynix succeeded in developing QLC (Quadruple Level Cell)-based 61 TB products in December, we expect to maximize synergy based on a balanced portfolio between the two companies in the high-capacity eSSD market.”

…

Wedbush analyst Matt Bryson wrote to subscribers about reports that SK hynix and Samsung are slowing down development of new NAND technology (e.g. 10th gen and beyond), and taking older generation facilities offline to convert production to newer processes (8th and 9th generation output), effectively moderating NAND output in the near term. Bryson said NAND market conditions are soft but should recover over the next few quarters.

…

Cloud data warehouser Snowflake has released Arctic Embed L 2.0 and Arctic Embed M 2.0, new versions of its frontier embedding models, which now allow multilingual search “without sacrificing English performance or scalability.” In this Arctic Embed 2.0 release, there are two variants available for public usage; a medium variant focused on inference efficiency built on top of Alibaba’s GTE-multilingual with 305 million parameters (of which 113 million are non-embedding parameters), and a large variant focused on retrieval quality built on top of a long-context variation of Facebook’s XMLR-Large, which has 568 million parameters (of which 303 million are non-embedding parameters). Both sizes support a context length of up to 8,192 tokens. They deliver top-tier performance in non-English languages, such as German, Spanish, and French, while also outscoring their English-only predecessor Arctic Embed M 1.5 at English-language retrieval.

The chart shows single-vector dense retrieval performance of open source multilingual embedding models with fewer than 1 billion parameters. Scores are average nDCG@10 on MTEB Retrieval and the subset of CLEF (ELRA, 2006) covering English, French, Spanish, Italian, and German.

…

Xinnor and NEC Deutschland GmbH have supplied over 4 PB of NVMe flash storage operated by the Lustre file system and using Xinnor’s xiRAID software, configured with RAID 6, to one of Germany’s top universities for AI research. The university’s AI research center required a storage system capable of handling the computational needs of over 20 machine learning research groups. We understand it is not the Technical University of Darmstadt (TU Darmstadt), which has more than 20 research groups focused on machine learning, artificial intelligence, and related fields. The deployment includes a dual-site infrastructure:

- First Location: 1.7 PB of storage with NDR 200 connectivity, supporting 15 x Nvidia DGX H100 supercomputers.

- Second Location: 2.8 PB of storage with 100 Gbps connectivity, supporting 28 nodes with 8 x Nvidia GeForce RTX 2080Ti each and 40 nodes with 8 x Nvidia A100 each.

The center’s previous setup consisted of five servers connected to a 48-bay JBOF with 1.92 TB SATA SSD from Samsung, protected by Broadcom hardware RAID controllers, and the Lustre file system.

The AI center’s compute infrastructure consists of 15 x Nvidia DGX H100 supercomputers, 28 nodes with 8 x Nvidia GeForce RTX 2080Ti each, and 40 nodes with 8 x Nvidia A100 each. These compute nodes were previously often waiting for the storage subsystem, leading to a waste of compute resources, delays in the project’s execution, and a reduced number of projects that the AI center could handle.

The deployment of Xinnor’s xiRAID+Lustre with the NVMe SSDs was up and running in a few hours and has boosted the center’s capabilities, we’re told by Xinnor. Specific models taking tens of minutes to load with the previous storage infrastructure are now available within a few seconds, the company added. The full case study is available here.