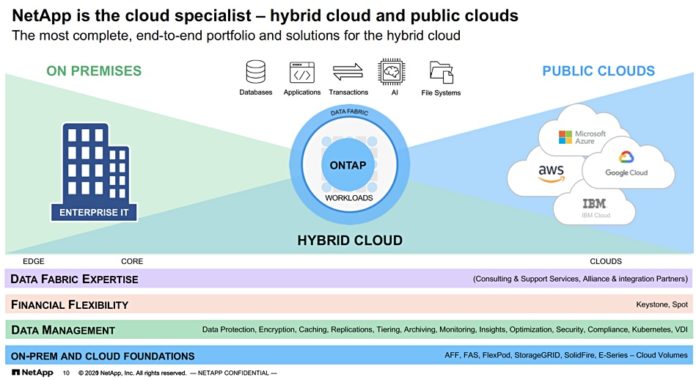

NetApp appears to making hybrid cloud a core focus, having recently made a slew of product announcements including core storage products, Flexpod converged infrastructure, Keystone subscription and many cloud management services.

It briefed Blocks and Files that its customers wanted to embrace the hybrid cloud by extending their IT capabilities to include the public clouds, and/or migrating workloads and data to them over time. It said users want a unified operational scheme, cloud-style flexible financial arrangements, a choice of where to deploy applications, and help from incumbent suppliers.

Adam Fore, NetApp’s senior director for portfolio marketing, told us: “Every customer has at least one workload in the public cloud. … Most customers expect to stay in the hybrid environment for the foreseeable future.”

NetApp talked up four groups of products:

- Core hardware and software; ONTAP v9.9, StorageGRID v11.5, FlexPod with Cisco Intersight,

- Subscription pricing: Keystone with Equinix,

- Cloud management with Astra, Backup, Data Sense, Manager, Insights and Tiering,

- Professional services and ActiveIQ.

Core hardware and software

ONTAP is the operating system for NetApp’s FAS (hybrid flash-disk) and AFF (all-flash) arrays.

V9.9 adds:

- Automatic backup and tiering of of on-premises data to StorageGRID and public clouds,

- Better multilevel file security and remote access management,

- Continuous data availability for 2x larger MetroCluster configurations,

- More replication options for backup and DR for large data containers for NAS workloads,

- Up to 4x performance increase for single LUN applications such as VMware datastores.

How was the 4x performance gain achieved? A NetApp spokesperson said: “We converted the single-threaded LUN access in distributed SCSI architecture into a multi-threaded stack per LUN. This applies to single-LUN and low-LUN count situations where ONTAP will multi-thread reads/writes per LUN on AFF systems, AFF All SAN Arrays, and FAS systems.

“When the LUN count is greater than the number of active SCSI threads, then ONTAP returns to single thread per LUN. For the AFF A800, we measured random read performance of up to +400 performance gain for a single LUN running on ONTAP 9.9 compared to ONTAP 9.8.”

An ONTAP blog has more detail.

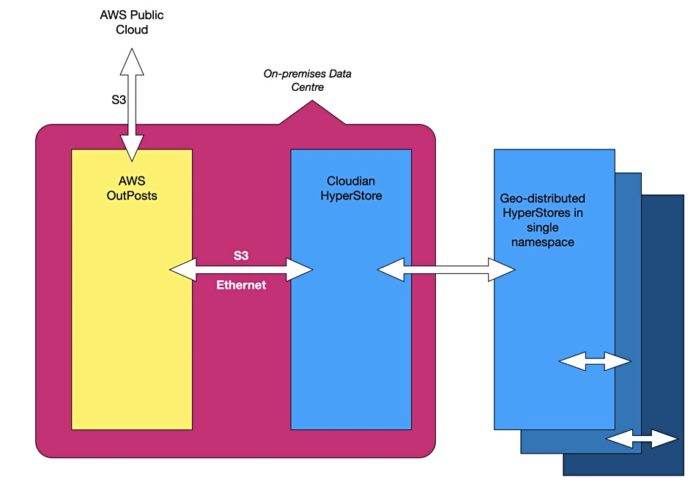

StorageGRID 11.5 delivers various incremental improvements: S3 Object Lock, support for KMIP encryption of data, usability improvements to ILM, a redesigned Tenant Manager user interface, support for decommissioning a StorageGRID site, and an appliance node clone procedure.

There is a new FlexPod (converged NetApp/Cisco infrastructure reference architecture) generation coming this summer, using the Cisco Intersight (SaaS cloud operations management platform) integration announced in May. This provides full stack monitoring, including ONTAP. There will be new capabilities rolled out over several months, such as intelligent application placement across on-premises and cloud, automated hybrid cloud data workflows, and the ability to consume FlexPod as a fully managed, cloud-like service.

The system will provide automated firmware upgrades and configuration monitoring, workload profiling and guidance, and backup to the public cloud. Supported public clouds are AWS, Azure and GCP.

A FlexPod blog provides more information.

Keystone Equinix

NetApp is placing its arrays in Equinix co-location data centres, and so providing storage services with fast connectivity to public cloud regional data centres, like Pure Storage and Seagate.

The idea is that customers can enjoy having their normal ONTAP storage environment with public cloud compute instances accessing data on the NetApp arrays as if it were local. NetApp claims an approximate 1ms data access latency, with the data not having to be moved into the public clouds.

The kit can be paid for under NetApp’s Keystone Flex Subscription scheme. It provides a single contract, single invoice, and support for storage and colocation services through NetApp. The supported clouds are AWS, Azure and GCP with NetApp claiming customers can centralise hybrid cloud data management, meaning protection, tiering, visibility, and optimisation, across these clouds

This NetApp Equinix facility is available in 21 Equinix IBX data centres located in 11 countries. Another NetApp blog provides background details.

Cloud management

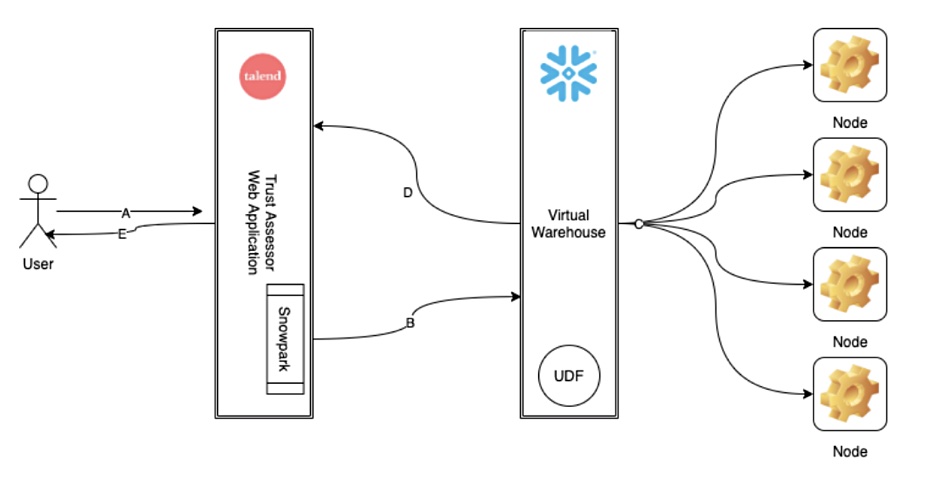

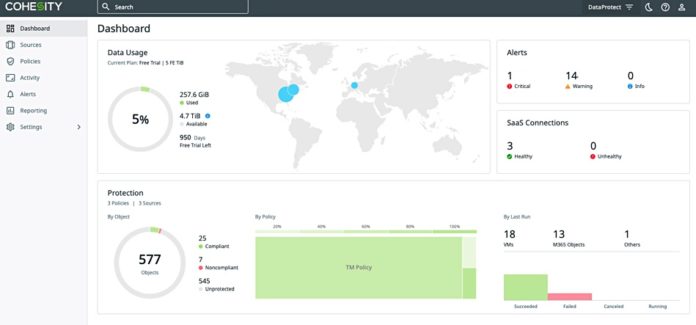

NetApp is providing a central Cloud Manager console through which six hybrid cloud services can be accessed:

- Cloud Volumes – ONTAP Cloud Volumes in AWS, Azure and GCP,

- Cloud Backup as a service for on-premises and in-cloud ONTAP data, with StorageGRID supported both as a source and a target,

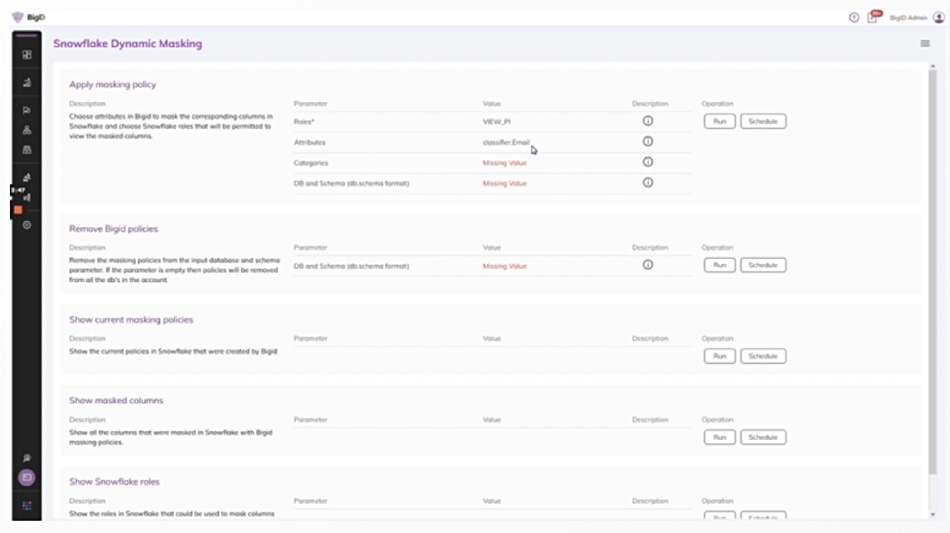

- Cloud Data Sense for data discovery, classification and governance in NetApp’s hybrid cloud,

- Cloud Insights to visualise and optimise hybrid cloud deployments,

- Cloud Tiering to move cold data to lower-cost storage, including on-premises StorageGRID

- Astra, which now supports on-premises Kubernetes-orchestrated container workloads as well as the original in-cloud ones.

Astra has expanded support for ONTAP Cloud Volumes and says a blog will be available to discuss the Astra enhancements.

Comment

NetApp is here presenting itself as a storage-related specialist in hybrid and public clouds.

The company has always produced external, shared file and subsequently block and then object data storage services. It has not historically been a compute supplier, albeit with one diversion into disaggregated HCI via Supermicro servers.

NetApp is effectively betting that its customers will continue to treat compute and storage separately, and is building a hybrid cloud, cloud-operating model and Kubernetes-handling infrastructure on top of its core storage HW/SW systems base. It is building hybrid cloud control and data planes, which feed data to on-premises and in-cloud application compute instances.

These planes also optimise its placement, its protection, security and cost and represent a bet-the-company strategy. It appears that NetApp thinks it cannot grow solely as an on-premises external storage system vendor. It sees its future in the hybrid cloud and has to treat the major public cloud players as partners, even though each one would prefer customers to use their own in-cloud storage facilities.

NetApp’s advantages over AWS, Azure, GCP and the IBM cloud include its multi-cloud, honest broker approach, and its years of storage software experience. It will need to ensure customers see it as a safer data storage bet than its public cloud partners.