Memory and NAND manufacturer SK hynix has demonstrated processor-in-memory (PIM) technology with a sample graphics memory chip using it.

Its GDDR6-AiM chip (AiM meaning Accelerator in Memory) adds computational functions to GDDR6 memory chips. GDDR6 is Graphics Double Data Rate 6 Random Access Memory, which processes data at 16Gbit/sec. GDDR, as opposed to DDR, was originally intended for use by GPUs. GDDR prioritises bandwidth over latency, which is a main focus of DDR memory design.

The company says GDDR chips are among the most popular memory chips for AI and big data applications.

GDDR6-AiM paired with a CPU or GPU can run certain computations 16 times faster than the same CPU/GPU paired with DRAM. We’re told SK hynix’s PIM reduces data movement to the CPU or GPU, so lowering power consumption by up to 80 per cent. The GDDR6-AiM chip runs on 1.25V, lower than SK hynix’s existing GDDR6 product’s operating voltage of 1.35V.

SK hynix does not say which processing instructions have been added to the graphics memory or how the processing elements are distributed among the memory cells. This is in contrast to Samsung, which provided more information about its MRAM-based PIM technology in January. MRAM is faster than DRAM and uses less electricity in its operations.

An SK hynix-sponsored EE Times article by Dae-han Kwon PhD, project leader of custom design at SK hynix, published in October 2021, adds a lot of extra information.

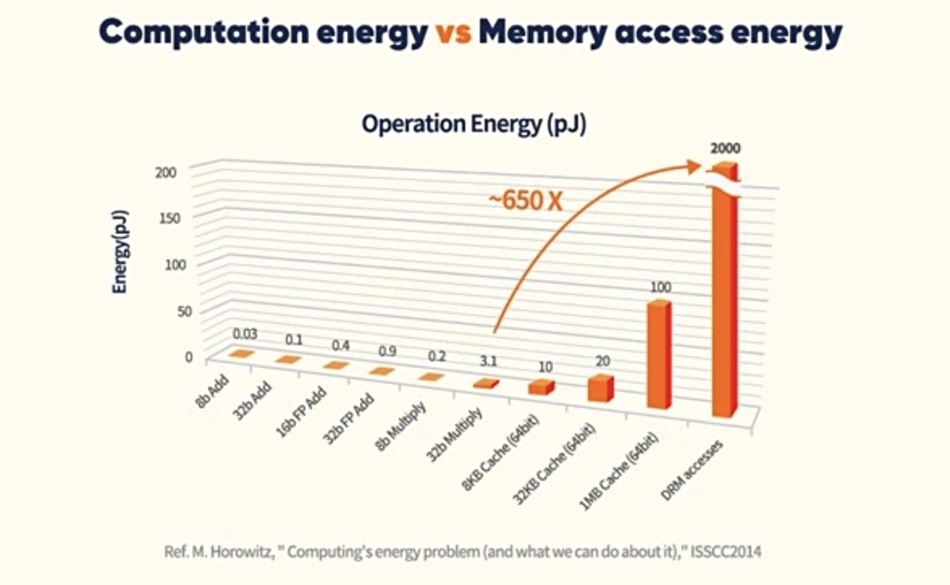

He shows a chart of power consumption by elements in CPU processing of data, and moving data from DRAM into the CPU-cache area consumes vastly more energy than any other operations there:

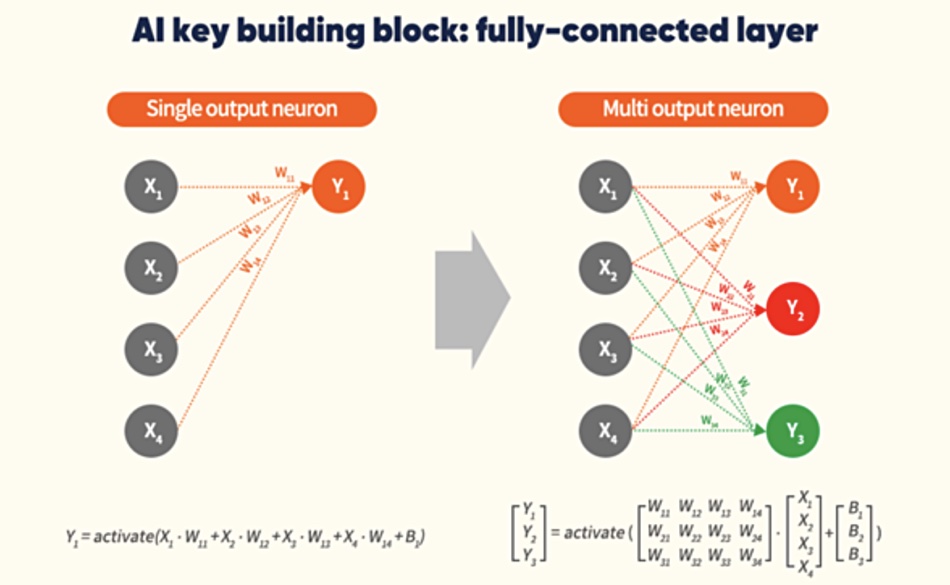

Then he discusses typical mathematical operations used in neural network processing, singling out the multiplication and addition of matrices when computing multi-output neuron operations.

The author writes: “If circuits for these operations are added to the memory, data does not need to be transferred to the processor, and only results need to be processed in the memory and delivered to the processor.” This is very likely what SK hynix has done.

He continues: “For memory-bound applications such as RNN (Recurrent Neural Networks), significant improvements in performance and power efficiency are expected when the application is performed with a computational circuit in the DRAM. Considering that the amount of data to be processed will increase tremendously, PIM is expected to be a strong candidate to improve the performance limit of the current computer system.”

SK hynix intends to exhibit its PIM development at the 2022 International Solid-State Circuits Conference (ISSCC) in San Francisco, 20–24 February. It hopes its GDDR6-AiM chips will be used in machine learning, high-performance computing, big data computation and storage applications.

Ahn Hyun, SK hynix head of solution development who spearheaded the chip’s development, said “SK hynix will build a new memory solution ecosystem using GDDR6-AiM.” The company plans to introduce an artificial neural network technology that combines GDDR6-AiM with AI chips in collaboration with SAPEON, a recently spun-off AI chip company from SK Telecom. Ryu Soo-jung, CEO of SAPEON, said “We aim to maximise efficiency in data calculation, costs, and energy use by combining technologies from the two companies.”