Lakehouse – a data lakehouse aims to combine the attributes of a data warehouse and data lake and it is being used for both business intelligence, like a data lake, and also machine learning workloads. Databrick’s DeltaLake is an example. It has its own internal ETL functions, referencing internal metadata, to feed its data warehouse component.

Data Lake

Data Lake – A data lake combines a large amount of structured, semi-structured, and unstructured data. Reading the data generally means filtering it through a structure defined at read time, and can involve an ETL process to get the read data into a data warehouse. An S3 or other object store can be regarded as a data lake where its contents can be used by data scientists and for machine learning

Data Warehouse

Data Warehouse – Real-time, current records of structured data are stored in a database. A data warehouse, such as Teradata, introduced in the late 1970s, stores older records in its structured data store with fixed schema for historical data analysis. Data is typically loaded into a data warehouse though an extract, transform and load (ETL) process applied to databases. Business intelligence users query the data, using structured query language (SQL), for example, and the warehouse code is designed for fast query processing

Dell talks up the green datacenter

Dell has written a post on sustainability that swerves away from direct energy consumption numbers and says a comprehensive strategy is needed to create a green datacenter.

The blog by Travis Vigil, Dell’s SVP for Infrastructure Solutions Group Portfolio Management, follows VAST Data’s recent public claims that its all-flash Universal Storage filer uses less electricity than Dell’s PowerScale storage and PowerProtect backup.

Vigil says: “At Dell, we believe strongly that it’s every technology provider’s responsibility to prioritize going green and to deliver technology that will drive human progress.

“On occasion, we see our peers challenge our position on the sustainability features of one solution or another. While we welcome the increased focus on creating a sustainable future, we also know that looking at a single solution, or even one aspect of a solution, will not drive the results that are critically important to get to a green datacenter and more profitable bottom line.”

The blog cites International Energy Agency (IEA) estimates that in 2018, 1 percent of all electricity was used by datacenters and an IEA report warning a “tsunami of data could consume one-fifth of global electricity by 2025.”

Vigil says: “Creating a green datacenter requires a strategic, all-encompassing approach, inclusive of the right partners, to drive the desired results.”

He says Dell partners with “customers on reducing carbon footprint in the datacenter, factoring in how to optimize things like power, energy efficiency, cooling and thermals, rack space and performance per watt. And that’s just the hardware. We also focus on other important factors like datacenter design, hot/cold aisle containment, cooling methods and energy sources.”

Vigil adds: “When it comes to creating a green datacenter, the strategy must be comprehensive, and not with just a single solution, in mind. A photograph of trees can provide a façade of greenery but it lacks the depth and substance that exist within a real forest.”

He admits legacy hardware is a key contributor to high carbon footprints in the datacenter and says Dell is designing hardware to increase energy efficiency. It has reduced energy intensity in HCI products (VxRail and PowerFlex) by up to 83 percent since 2013. PowerEdge server efficiency has increased by 29 percent over the previous generation. PowerMax storage is 40 percent more efficient than the previous generation.

These comparisons are of energy intensity and not raw consumption, although they can mean reduced electricity consumption as well. For example, an all-flash PowerMax will use less electricity than an equivalent capacity all-disk array. But another example could be a 4,000W storage array with a 100TB capacity being replaced by an 8,000W array with a 1,000TB capacity. Electricity consumption has doubled but energy intensity in watt/TB terms has improved fivefold from 0.025 to 0.125.

Vigil says of PowerMax that energy intensity improvements come through the use of things like flash storage, data deduplication, and compression. These enable “customers to consolidate their hardware and save energy, reducing their physical footprint.” One such customer, Fresenius Medical Care, said: “PowerMax has allowed us to reduce our datacenter footprint by 50 percent, while decreasing power and cooling costs by more than 35 percent.”

Vigil mentions Dell’s OpenManage Enterprise (OME) Power Manager software, which enables customers to monitor and manage server power based on consumption and workload needs, as well as keep an eye on the thermal conditions. OME Power Manager provides:

- Monitoring and manage power and thermal consumption for individual and groups of servers

- Reporting to help meet sustainability goals:

- CO2 emissions from the PowerEdge servers as it relates to usage

- Idle Servers

- Utilizing policies to set power caps at the rack or group level

- Utilizing enhanced visualization of datacenter racks with power and thermal readings

- Reporting power on virtual machine usage for a physical device

- Viewing and comparing server performance metrics

This could usefully be extended to other products in Dell’s portfolio, such as storage nodes in a cluster.

Vigil says: “We believe our advantage is that we not only work to lower the complete carbon footprint of each product in our portfolio, but that we also provide solutions for the entire ecosystem that contribute to the datacenter footprint.”

Bootnote

The term “energy intensity” is used to indicate the inefficiency of an economy or product. In economic terms it’s calculated as units of energy per unit of GDP. In product terms it’s calculated as units of energy per product output or activity, such as as per TB or per IO.

Pure Storage defends sustainability credentials

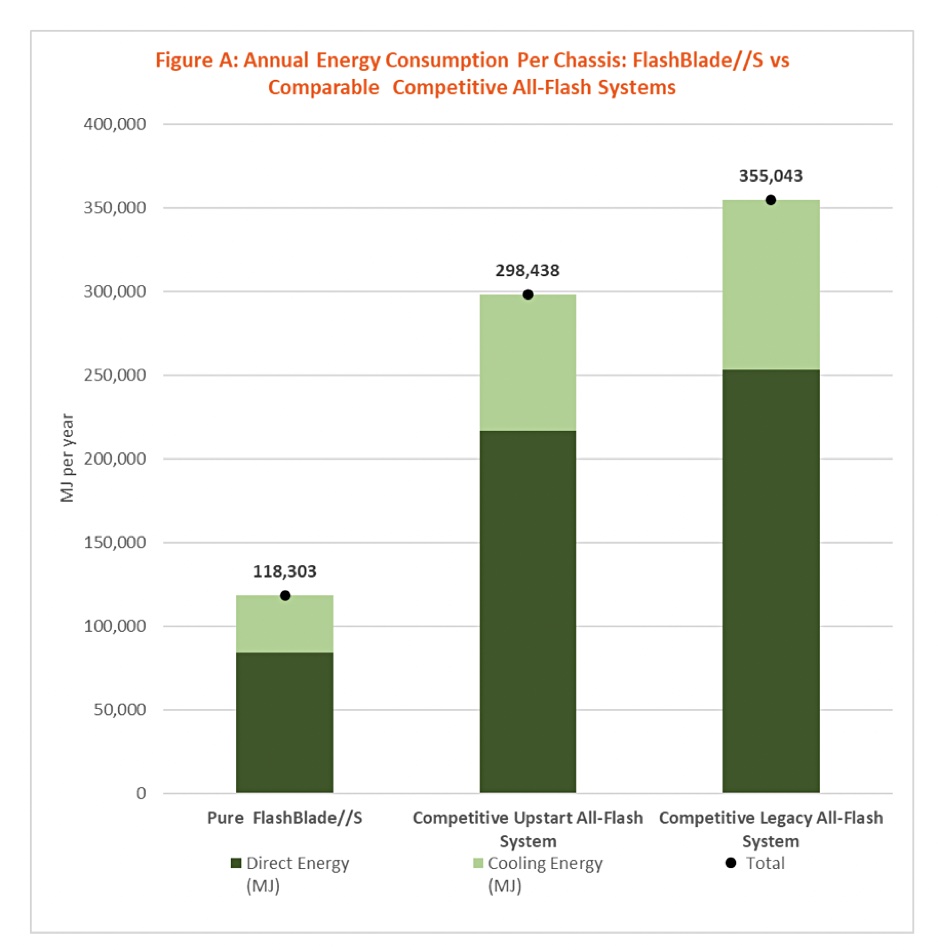

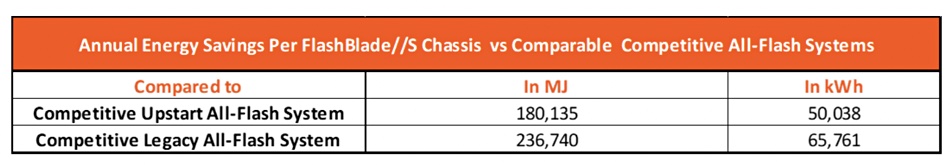

Pure Storage says its arrays are more efficient than legacy or upstart all-flash arrays so it has better sustainability credentials.

VAST Data earlier this month claimed its arrays were more efficient than those from Dell and Pure Storage. It said its products were better in terms of electricity usage and e-waste.

Pure Storage’s Biswajit Mishra, Senior Product Marketing Manager for FlashBlade, and FlashBlade Technical Evangelist Justin Emerson say: “Reducing the carbon footprint of storage systems while delivering on business’ digital transformation initiatives, and supporting the fight against climate change and pollution to make the world a better place for everyone are goals we should all get behind.”

Pure says that customers can dramatically reduce their energy use and environmental footprint.

It is providing a Pure1 Sustainability Assessment giving customers visibility of their environmental impact and suggesting optimization actions. The features include:

- Power savings analysis: Pure1 will show the power used compared to the appliance’s nominal power load. Customers can monitor power consumption efficiency for an entire fleet, by data center site, or at the individual array.

- Greenhouse gas emissions monitor: Pure1 provides direct carbon usage estimates based on power used.

- Assessment: Customers can use Pure1 to assess how to improve power efficiency by metering Watts per unit of data on the array that can be read back.

- Recommendations: Pure1 provides proactive insights and guidance on improving direct carbon footprint.

Pure makes a blanket claim that “because of unique design decisions – including Pure’s DirectFlash technology, built-for-flash software, always-on deep compression, and Evergreen architecture – both FlashBlade and FlashArray can achieve energy savings unlike any competitive storage systems on the market.”

Pure Storage has performed use phase sustainability analyses following the application of the third party-audited Life Cycle Assessments (LCA) formula across its portfolio of storage arrays. It says it delivers:

- 84.7 percent in direct energy savings vs. competitive all-flash for FlashArray//X

- 80 percent in direct energy savings vs. competitive all-flash for FlashArray//XL

- 75 percent in direct energy savings vs. competitive hybrid for FlashArray//C

- 67 percent in direct energy savings vs. competitive legacy all-flash for FlashBlade//S

- 60 percent in direct energy savings vs. competitive upstart all-flash for FlashBlade//S

VAST claims a 5.4PB configuration of its Universal Storage consumes 5,530 watts whereas an equivalent 3-chassis FlashBlade//S consumes 8,000 watts.

A Pure blog says FlashBlade//S delivers:

- 67 percent in energy and emission savings vs competitive legacy all-flash systems

- 60 percent in energy and emission savings vs competitive upstart all-flash systems

We can perhaps assume that the legacy all-flash system is Dell’s PowerStore and the upstart one is VAST Data’s Universal Storage. The blog provides a chart of energy consumption per chassis:

It also provides a table showing calculated annual energy savings:

This will give the marketeers at VAST and Dell something to get their teeth into.

Pure says it’s committed to a 3x reduction in direct carbon usage per petabyte by FY30. Read Pure’s inaugural ESG report here.

Comment

We think these sustainability marketing initiatives by suppliers are creditable but could go further. By making claims that don’t furnish direct comparisons with other named vendors and products, such suppliers could be accused of being defensive and striving for marketing advantage rather than helping the storage industry, its customers, and the IT industry generally produce more sustainable products for the good of us all.

As the Pure bloggers say: “Reducing the carbon footprint of storage systems while delivering on business’ digital transformation initiatives, and supporting the fight against climate change and pollution to make the world a better place for everyone are goals we should all get behind.”

The dictionary definition of sustainability is the ability of something to be maintained at a certain rate or level. The concept is used to convey the notion of an avoidance of the depletion of natural resources in order to maintain an ecological or environmental balance. Yet there is no precise definition of environmental sustainability and, therefore, no standard way of measuring it.

The general IT industry use of sustainability centers on combating global warming by reducing greenhouse gas emissions from a process or thing such as a datacenter, server or storage array. For example, a storage array’s electricity use (watts/hour) can be converted into some measure of CO2 emissions.

Blocks & Files thinks there is an opportunity here for an independent research and analysis house to produce named vendor and product sustainability comparisons, using, say, the LCA formula. In other words, we need a transparent industry standard sustainability benchmark.

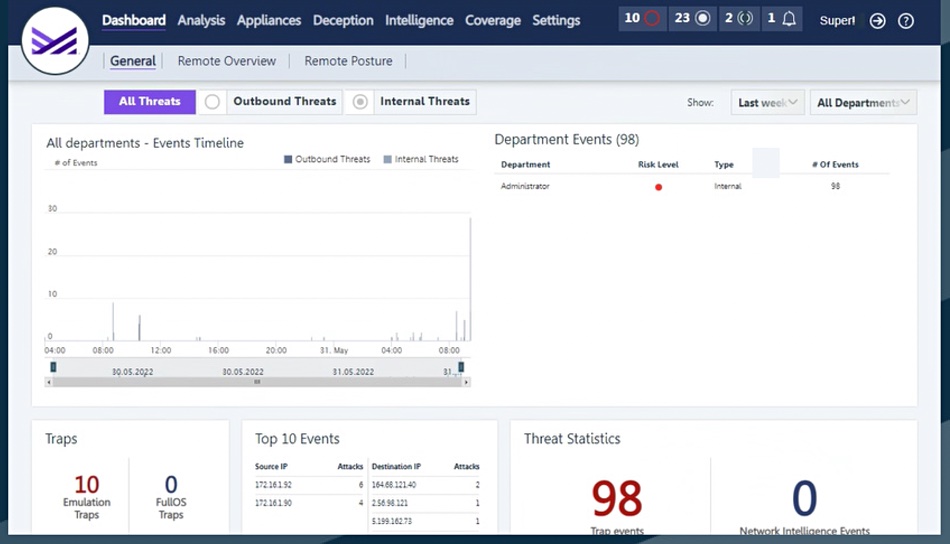

Commvault adds malware honeypots to Metallic

Commvault has added ThreatWise malware deception and detection technology to its Metallic SaaS product.

ThreatWise is based on TrapX software acquired by Commvault in January. The idea is to detect and proactively reject malware attacks before they can do damage. This is one half of a balanced malware response strategy, the other being data immutability and recoverability.

Commvault SVP for products Ranga Rajagopalan said: “Data recovery is important, but alone it’s not enough. Just a few hours with an undetected bad actor in your systems can be catastrophic. By integrating ThreatWise into the Metallic SaaS portfolio, we provide customers with a proactive, early warning system that bolsters their zero-loss strategy by intercepting a threat before it impacts their business.”

ThreatWise’s TrapX technology sets up honeypot virtual targets for attackers that leads them away from a customer’s real corporate assets. They are lured towards virtual assets that are actually pre-configured and specialized traps or threat sensors across on-premises and SaaS environments. Customers can fingerprint any highly specific assets and deploy a deceptive replicate in minutes.

These traps help discover the attack origin and TTPs (Tactics, Techniques, and Procedures), trigger alerts, and enable faster responses. TrapX said its customers could rapidly isolate, fingerprint, and disable new zero-day attacks and APTs (Advanced Persistent Threats) in real-time.

Once initiated, TrapX’s scalable technology can deploy more than 500 unique traps per appliance in less than five minutes, Commvault said.

Commvault has also updated its general protection software’s security capabilities, extending its machine learning and threat detection. This has a Zero Loss Strategy featuring end-to-end data visibility, broad workload protection, and faster business response, built on a Zero Trust foundation. It has expanded its file anomaly framework to detect malicious applications that may evade traditional detection methods by posing as safe file types.

The updated software expands data governance capabilities to profile object storage for Azure, AWS, and GCP, helping to eliminate data leakage.

Metallic ThreatWise, along with Commvault’s latest platform update features, is available now. Read an Early Warning Threat Detection datasheet here.

TrapX was founded in 2012, raising around $50 million in four funding rounds. It has amassed a 300-plus worldwide customer count, with thousands of users.

Storage news ticker – September 21

Data catalog and data intelligence supplier Alation has announced a partnership with data integrator Fivetran that is intended to enable joint customers to find and understand the full context of their data. Fivetran’s tech is designed to let data and analytics teams securely move data from operational systems to analytics platforms. Alation is built to enhance visibility into the data to speed pipeline development. Their partnership provides shared customers with visibility into enterprise data as it moves through Fivetran-managed pipelines, the pair said. The partnership simplifies data consolidation and pipeline creation, while aggregating governed, reliable data so organizations can get more value from their data, the duo added.

…

Bluesky has formally launched its first product designed to provide more visibility into Snowflake workload usage and costs as well as actionable insights and workload specific recommendations for maximum optimization. Bluesky analyzes query workloads to detect similar groupings, using query patterns technology. By watching for similar query patterns, Bluesky said it can detect complex situations that simplistic visibility tools miss. It can suggest high-impact tuning options for valuable workloads, increasing efficiency, it added, while also looking out for clear savings hiding inside the noise of regular operations, such as long-running queries that fail repeatedly without providing any value. Bluesky also announced that it has raised $8.8 million in seed funding. The company was founded by Google and Uber data and machine learning tech leads.

…

SSD firmware developer Burlywood has hired Jay Bradley as its Chief Revenue Officer and VP of Customer Success. He will oversee global sales and marketing teams to ensure customer success and be responsible for managing all global sales activities spanning direct, OEM, and channel sales engagements. Most recently, Bradley was Director of OEM Business Development at Brocade Storage Networking. His experience also includes work at Micron Technology and LSI Corporation, where he worked in various engineering, product marketing, and business development roles over his more than 15 years of service.

…

CDS, a provider of multi-vendor services (MVS) for datacenters worldwide, today launched its new Data Center Modernization Assessment Service, aimed at helping organizations understand and realize their potential for cost savings as hardware refresh cycles become longer and modernization initiatives need funding. According to the Uptime Institute, 50 percent of all data center system refreshes occur after five years. More than 90 percent of systems in the average data center today are at least three years old. While server units outnumber storage arrays, storage is the largest area for savings because of its much higher acquisition cost, accounting for nearly 70 percent of the potential savings in hardware maintenance. MVS can save customers at least 20 percent on systems that are between three and four years old and 50 percent or greater on systems that are five years old or older, CDS claimed.

…

Object storage vendor Cloudian said it has enhanced its Managed Service Provider (MSP) program to help MSPs and Cloud Service Providers (CSPs) capitalize on new market opportunities by leveraging the company’s expanded object storage solutions portfolio and increased program benefits, including go-to-market and event support, joint business planning, and additional training. Cloudian has over 300 MSP/CSP customers around the world – including 30 added over the past year. To learn more about the Cloudian program, click here.

…

Druva has released a joint survey with IDC highlighting the unpreparedness of businesses against the threat of ransomware. The research, featured in Druva’s blog and an IDC white paper, shows that while 92 percent of respondents said their data resiliency tools were efficient or highly efficient, 67 percent of those hit by ransomware were forced to pay the ransom, and nearly 50 percent experienced data loss. Some 93 percent of respondents also claimed to be using automated tools to find the ideal recovery point yet the inability to find the correct recovery point was cited as the number one reason for data loss. For IDC’s report, click here.

…

Purpose-built backup appliance target ExaGrid has achieved a +81 Net Promoter Score (NPS). NPS measures the loyalty of customers to a company with scores measured with a single-question survey and reported with a number from the range -100 to +100. It’s a measure of whether current customers would recommend a supplier to a friend or colleague. ExaGrid claimed it offers the largest scale-out system in the industry – comprised of 32 EX84 appliances that can take in up to a 2.7PB full backup in a single system, which is 50 percent larger than any other solution with aggressive deduplication.

…

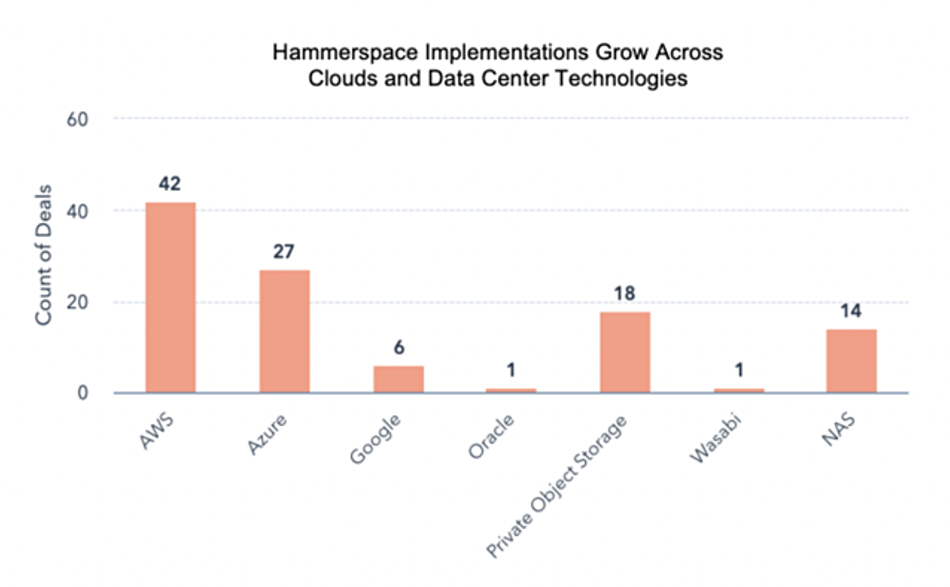

Global Data Environment software developer Hammerspace says it has more than 100 implementations, demonstrating “massive momentum across enterprise, finance, government, higher education, retail, and research organizations” in datacenters and public and private clouds. It distributed a chart showing this:

David Flynn, founder and CEO, said: “Adoption of the Hammerspace Global Data Environment is fueled by the ability to unify the user and application access to data across silos and to automate data placement at the point of decision or processing without interruption.”

…

The HPE GreenLake for Databases service was updated with expert-designed configurations of optimized infrastructure for leading databases such as SQL Server, Oracle, and EDB Postgres. It said Microsoft SQL Server customers will appreciate the lab-tested infrastructure proven to increase performance for SQL Server, with latency reductions as much as 94 percent vs last-gen hardware. Performance testing of Microsoft SQL Server on the latest all-NVMe HPE Alletra 6000 storage vs previous-gen storage infrastructure showed a 19x write performance improvement, with latency reductions as high as 94 percent.

…

HPE has updated its SimpliVity HCI software to version 4.1.2 with dual disk resiliency, unified continuous hardware monitoring, and the ability to view VM copy locations more easily. Also, with SimpliVity InfoSight Server Integration, all the benefits that are available in HPE InfoSight for Servers will be available on SimpliVity infrastructure. The SimpliVity hardware will be continuously monitored in a unified manner, to help protect the SimpliVity infrastructure. When SimpliVity creates a secondary copy of data for added resilience, you can now view the virtual machine copy locations on the vCenter UI in a new tab.

…

Immuta has sponsored a report by IDC InfoBrief that highlights some challenges facing businesses as they attempt to ramp up their data-driven digital strategies. While as many as 90 percent of CEOs surveyed said they consider it crucial to have a digital-first strategy to achieve business value from their data, two thirds of European organizations admitted to having issues leveraging their data because they lack the right kind of data access governance. The report looks at the reasons for the bottleneck, highlighting how data access strategy across a business is an issue for 61 percent of organizations, with 14 percent citing it as a “critical challenge.”

…

Memory cached array provider Infinidat has added Italian distributor Computer Gross to its channel to boost its business in the country.

…

TCP/NVMe storage supplier Lightbits has been assigned a patent (11,442,658) covering a “system and method for selecting a write unit size for a block storage device.” Different SSDs behave better under different conditions depending on their model and internal architecture. A parameter affecting their behavior is the write unit size, i.e. the amount of data written to the SSD as one “chunk.” Using the methods described in this patent, Lightbits determines the optimal write unit size for a given SSD, writing to that SSD in such a way that induces it to work as close as possible to its optimal working point. To read the patent abstracts and full detail, click here.

…

Greg Knieriemen, NetApp‘s Director for Technology Evangelism, Influencer Relations and Community Engagement, has resigned for an opportunity he couldn’t turn down. We don’t know what it is yet.

…

Scality announced the results of an independent survey of IT decision makers across France, Germany, UK, and the US about their data sovereignty strategies. 98 percent of organizations already have policies in place or have plans to implement them. To achieve data sovereignty, 49 percent of IT decision makers are using hybrid cloud or regional cloud service providers as an alternative to the public cloud.

…

Backup and archive systems vendor SpectraLogic says its customer, Imperial War Museums (IWM), has won the 2022 IBC Innovation Award in Content Distribution for a “prodigious” project to build a digital asset management and storage ecosystem with multi-site implementation across datacenters in Cambridge and London. This project brought together software and hardware from multiple vendors including Spectra Logic, Axiell, and Veritas to enable IWM to collect and preserve real stories of modern war, conflicts, and their impact. The infrastructure includes Spectra T950 Tape Libraries; BlackPearl NAS with high-density drive capability allowing for expansion; BlackPearl object storage platform to provide migration capability between tape generations; and StorCycle software, enabling data migration from primary storage, saving IWM storage capacity and money.

…

Real-time data integration and streamer Striim has joined the Databricks Technology Partner Program. The Databricks Lakehouse Platform combines the elements of data lakes and data warehouses so users can unify their data, analytics, and AI, build on open source technology, and maintain a consistent platform across clouds. Striim’s integration enables enterprises to leverage the Databricks Lakehouse Platform while deriving insights in real time via Striim’s streaming capabilities.

…

Talend has released the results of its second annual Data Health Barometer, a survey conducted globally among nearly 900 independent data experts and leaders. It revealed that while a majority of respondents believe data is important, 97 percent face challenges in using data effectively and nearly half say it’s not easy to use data to drive business impact. The Data Health Barometer explores the disconnect between data and decision, which can impede enterprises and executives from supporting their strategic objectives through any economic conditions. Major findings from the survey include:

- Companies’ ability to manage data is worsening

- There is a data literacy skills gap

- Businesses are preparing for economic turbulence

- Data trust and data quality remain top challenges to using data effectively

…

Veeam Software has today announced the appointment of Rick Jackson as Chief Marketing Officer from Qlik, where he led the global marketing organization and was part of the leadership team that transformed Qlik into an end-to-end data and analytics SaaS company. Jackson also previously served as CMO at VMware, where he helped drive the transformation from virtualization to cloud infrastructure, as well as Rackspace.

…

Live data replicator WANdisco has signed its largest ever contract, valued at $25 million, with a top 10 global communications company. As a Commit-to-Consume contract, revenue will be recognized over time and has the potential to grow further as the customer’s data requirements grow. This is the fourth consecutive contract with the customer, following the $11.6 million order announced on June 28. The customer had previously used WANdisco’s solutions to migrate smart meter data from an on-premises Hadoop cluster to multiple cloud providers. This follow-on deal comes as the customer has seen a proliferation of smart meter data and has new Internet of Things (IoT) data needs in the automotive sector. The cumulative contracts from this customer now total $39.3m during 2022, demonstrating the opportunity for WANdisco to land and expand deals using its Commit-to-Consume model.

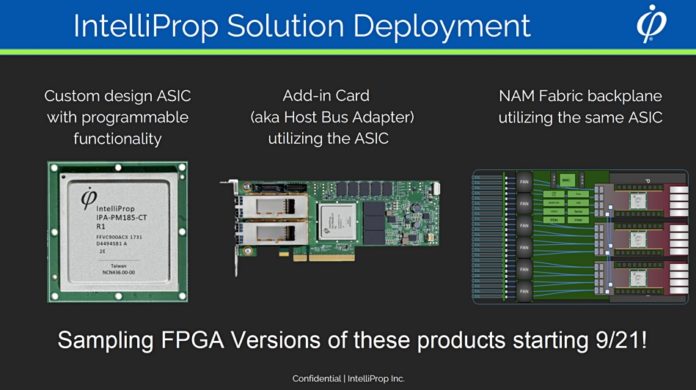

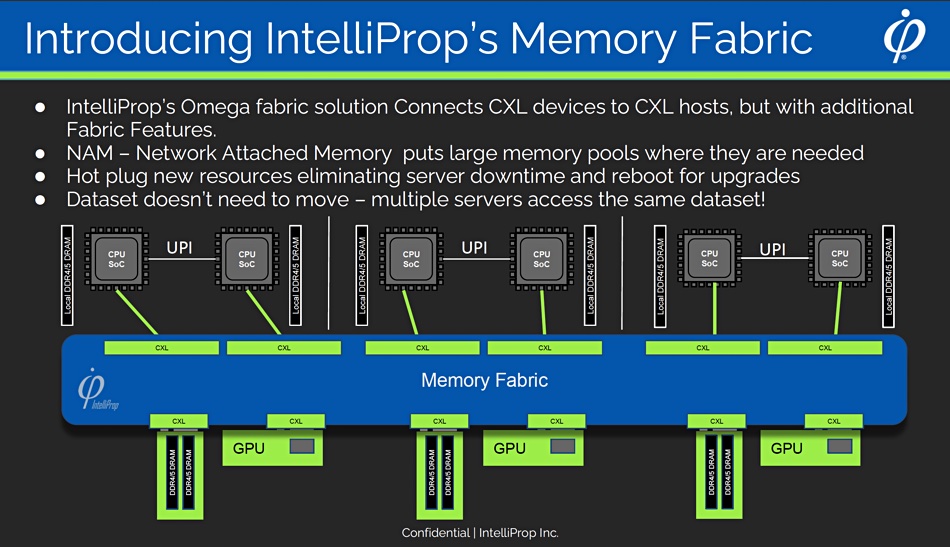

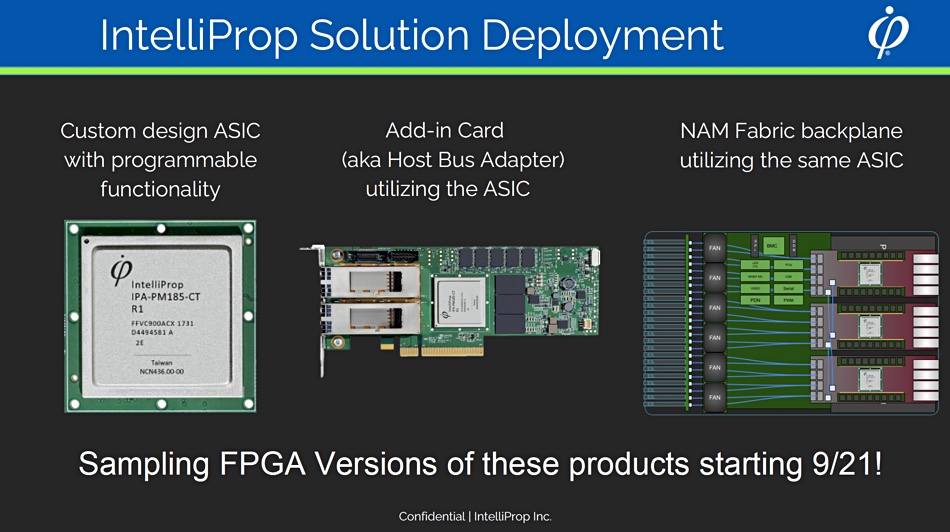

IntelliProp has chips to build CXL fabric

Startup IntelliProp has announced its Omega Memory Fabric chips so servers can share internal and external memory with CXL, going beyond CPU socket limits and speeding memory-bound datacenter apps. It has three field-programmable gate array (FPGA) products using the chips.

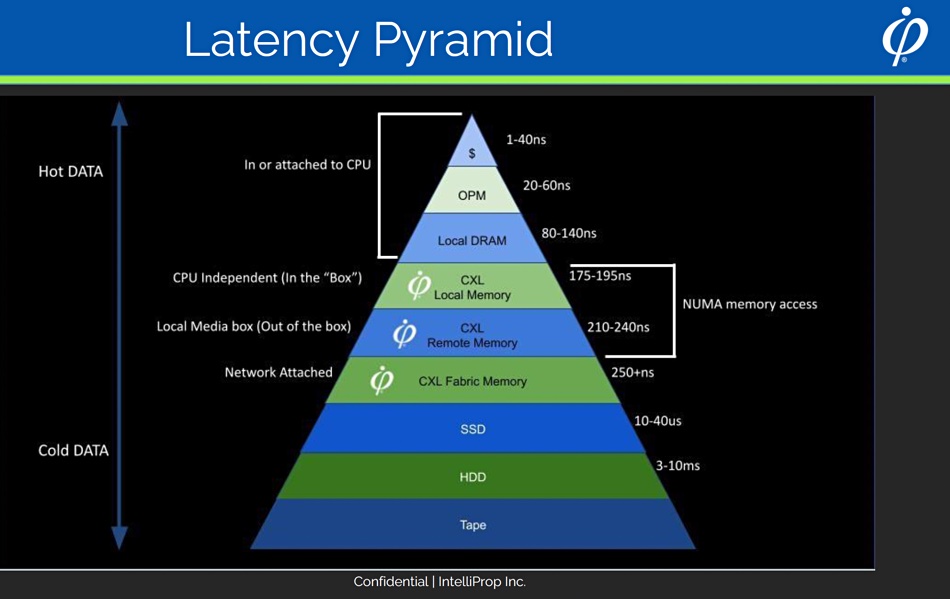

CXL will enable servers to use an expanded pool of memory, meaning applications can fit more of their code and data into memory to avoid time-consuming storage IO. They will need semiconductor devices to interface between and manage the CXL links running from server CPUs to external devices and IntelliProp is providing this plumbing.

IntelliProp CEO John Spiers said in a statement: “History tends to repeat itself. NAS and SAN evolved to solve the problems of over/under storage utilization, performance bottlenecks and stranded storage. The same issues are occurring with memory.

“For the first time, high-bandwidth, petabyte-level memory can be deployed for vast in-memory datasets, minimizing data movement, speeding computation and greatly improving utilization. We firmly believe IntelliProp’s technology will drive disruption and transformation in the datacenter, and we intend to lead the adoption of composable memory.”

IntelliProp says its Omega Memory Fabric allows for dynamic allocation and sharing of memory across compute domains both in and out of the server, delivering on the promise of Composable Disaggregated Infrastructure (CDI) and rack scale architecture. Omega Memory Fabric chips incorporate the Compute Express Link (CXL) standard, with IntelliProp’s Fabric Management Software and Network-Attached Memory (NAM) system.

Currently servers have a static amount of DRAM which is effectively stranded inside a server and not all of it used. A recent Carnegie Mellon/Microsoft report quoted Google stating that average DRAM utilization in its datacenters is 40 percent, and Microsoft Azure said that 25 percent of its server DRAM is idle and unused. That’s a waste of the dollars spent buying it.

Memory disaggregation from server CPUs increases memory utilization and reduces stranded or underutilized memory. It also means server CPUs can access more memory than they currently are able to use because the number of CPU sockets is no longer a limiting factor in memory capacity. The CXL standard offers low-overhead memory disaggregation and provides a platform to manage latency, which slower RDMA-based approaches cannot do.

The Omega chips are ASICs and IntelliProp says its memory fabric features include:

- Dynamic multi-pathing and allocation of memory

- E2E security using AES-XTS 256 w/ addition of integrity

- Support non-tree topologies for peer-to-peer

- Direct path from GPU to memory

- Management scaling for large deployments using multi-fabrics/subnets and distributed managers

- Direct memory access (DMA) allows data movement between memory tiers efficiently and without locking up CPU cores

- Memory agnostic and up to 10x faster than RDMA

The three Omega FPGA devices are:

- Omega Adapter

- Enables the pooling and sharing of memory across servers

- Connects to the IntelliProp NAM array

- Omega Switch

- Enables the connection of multiple NAM arrays to multiple servers through a switch

- Targeted for large deployments of servers and memory pools

- Omega Fabric Manager (open source)

- Enables key fabric management capabilities:

- End-to-end encryption over CXL to prevent applications from seeing the contents in other applications’ memory along with data integrity

- Dynamic multi-pathing for redundancy in case links go down with automatic failover

- Supports non-tree topologies for peer-to-peer for things like GPU-to-GPU computing and GPU direct path to memory

- Enables Direct Memory Access for data movement between memory tiers without using the CPU

- Enables key fabric management capabilities:

IntelliProp says its Omega NAM is well suited for AI, ML, big data, HPC, cloud and hyperscale/enterprise datacenter environments, and obviously specifically targeting applications requiring large amounts of memory.

DragonSlayer analyst Marc Staimer said: “IntelliProp’s technology makes large pools of memory shareable between external systems. That has immense potential to boost datacenter performance and efficiency while reducing overall system costs.”

Samsung and others are introducing CXL-supporting memory products. It’s beginning to look as if the main event holding up CXL adoption will be the delayed arrival of Intel’s Sapphire Rapids.

The IntelliProp Omega Memory Fabric products are available as FPGA versions and will have the full features of the Omega Fabric architecture. The IntelliProp Omega ASIC based on CXL technology will be available in 2023.

Bootnote – CXL

CXL, the Computer eXpress Link, aims to link server CPUs with memory pools across PCIe cabling. CXL v1, based on PCIe 5.0, enables server CPUs to access shared memory on accelerator devices with a cache coherent protocol. CXL v1.1 was the initial productized version of CXL with each CXL-attached device only able to connect to a single server host

CXL v2.0 has support for memory switching so each device can connect to multiple hosts. These multiple host processors can use distributed shared memory and persistent (storage-class) memory. CXL 2.0 enables memory pooling by maintaining cache coherency between a server CPU host and three device types:

- Type 1 devices are I/O accelerators with caches.

- Type 2 devices are accelerators fitted with their own DDR or HBM (High Bandwidth) memory

- Type 3 devices are memory expander buffers or pools

CXL 2.0 will be supported by Intel Sapphire Rapids and AMD Genoa processors.

Complementary and partially overlapping memory expansion standards OpenCAPI and Gen-Z have been folded into the CXL consortium.

Bootnote – IntelliProp

IntelliProp is focused on CXL-powered systems to enable the composing and sharing of memory to disparate systems. The company was founded in 1999 to provide ASIC design and verification services for the data storage and memory industry. In August it recruited John Spiers from Liqid as its CEO and president, with co-founder Hiren Patel stepping back from his CEO role to become CTO.

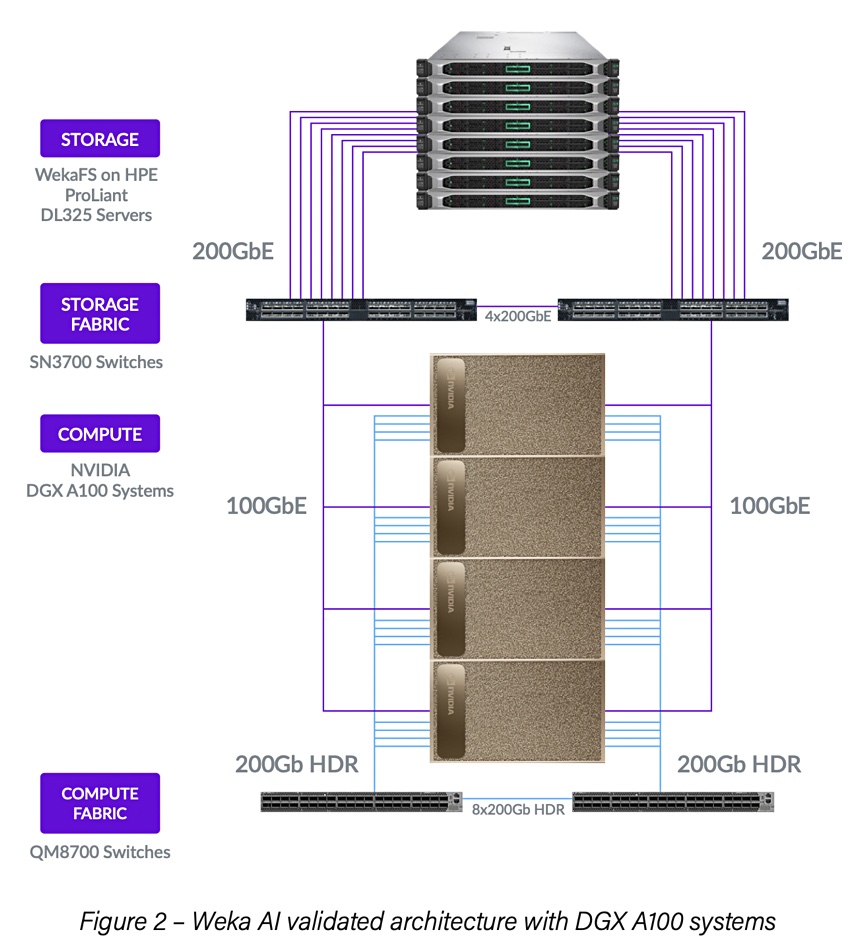

Nvidia unveils DGX BasePOD and partners

Nvidia has revealed the DGX BasePOD, a variant on its DGX POD, with six storage partners so that AI-using customers can start small and grow large.

Nvidia’s basic DGX POD reference architecture specifies up to nine Nvidia DGX-1 DGX A100 servers, 12 storage servers (from Nvidia partners), and three networking switches. The DGX systems are GPU server-based configurations for AI work and combine multiple Nvidia GPUs into a single system.

A DGX A100 groups eight A100 GPUs together using six NVSwitch interconnects supporting 4.8TB per second of bi-directional bandwidth and 5 petaflops. There is 320GB HBM2 memory, and Mellanox ConnectX-6 HDR links to external systems. The DGX SuperPOD is composed of between 20 and 140 such DGX A100 systems.

The DGX BasePOD is an evolution of the POD concept and incorporates A100 GPU compute, networking, storage, and software components, including Nvidia’s Base Command. Nvidia says BasePOD includes industry systems for AI applications in natural language processing, healthcare and life sciences, and fraud detection.

Configurations range in size, from two to hundreds of DGX systems, and are delivered as integrated, ready-to-deploy offerings, with certified storage through Nvidia partners DDN, Dell, NetApp, Pure Storage, VAST Data, and Weka. They support Magnum IO GPUDirect Storage technology, which bypasses host x86 CPU and DRAM to provide low-latency, direct access between GPU memory and the storage.

Weka

Weka has announced its Weka Data Platform Powered by DGX BasePOD, saying it delivers linear scaling high-performance infrastructure for AI workflows. The design implements up to four Nvidia DGX A100 systems, Mellanox Spectrum Ethernet and Mellanox Quantum InfiniBand switches, and the WekaFS filesystem software.

By adding additional storage nodes, the architecture can grow to support more DGX A100 systems. WekaFS can expand the global namespace to support a data lake with the addition of any Amazon S3 compliant object storage.

It says that scaling capacity and performance is as simple as adding DGX systems, networking connectivity, and Weka nodes. There is no need to perform complex sizing exercises or invest in expensive services to grow. Download this reference architecture (registration required) here.

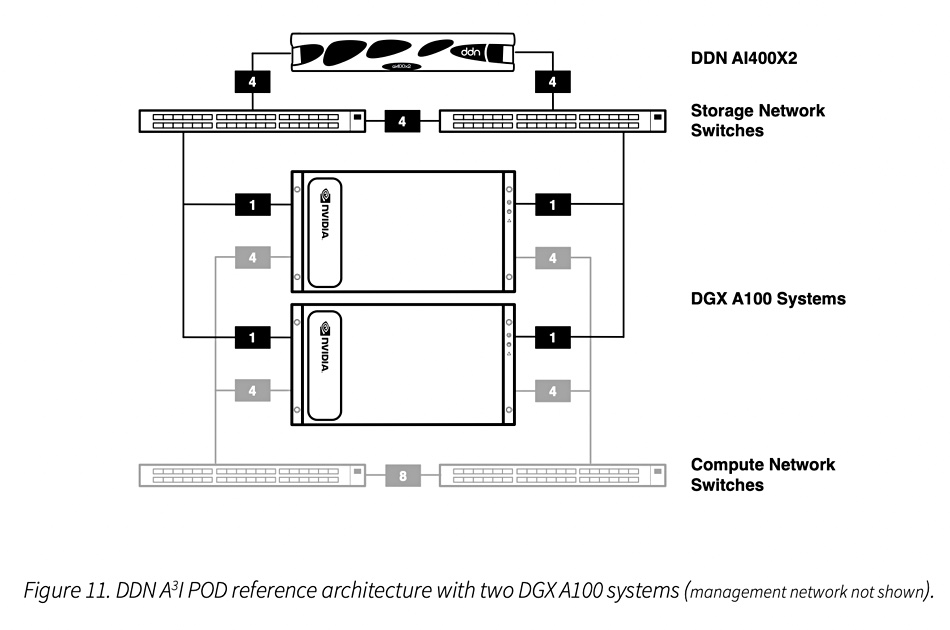

DDN

DDN’s BasePOD configurations use its A3I AI400X2 all-NVMe storage appliance. These are EXAScaler arrays running Lustre parallel filesystem software. They are configurable as all-flash or hybrid flash-disk variants in the BasePOD architecture. DDN claims recent enhancements to the EXAScaler Management Framework have cut deployment times for appliances from eight minutes to under 50 seconds.

Standard DDN BasePOD configurations start at two Nvidia DGX A100 systems and a single DDN AI400X2 system, and can grow to hundreds. Customers can start at any point in this range and scale out as needed with simple building blocks.

Nvidia and DDN are collaborating on vertical-specific DGX BasePOD systems tailored specifically to financial services, healthcare and life sciences, and natural language processing. Customers will get software tools including the Nvidia AI Enterprise software suite, tuned for their specific applications.

Download DDN’s reference architectures for BasePOD and SuperPOD here (registration required).

DDN says it supports thousands of DGX systems around the globe and deployed more than 2.5 exabytes of storage overall in 2021. At the close of the first half of 2022, it had 16 percent year-over-year growth, fueled by commercial enterprise demand for optimized infrastructure for AI. In other words, Nvidia’s success with its DGX POD architectures is proving to be a great tailwind for storage suppliers like DDN.

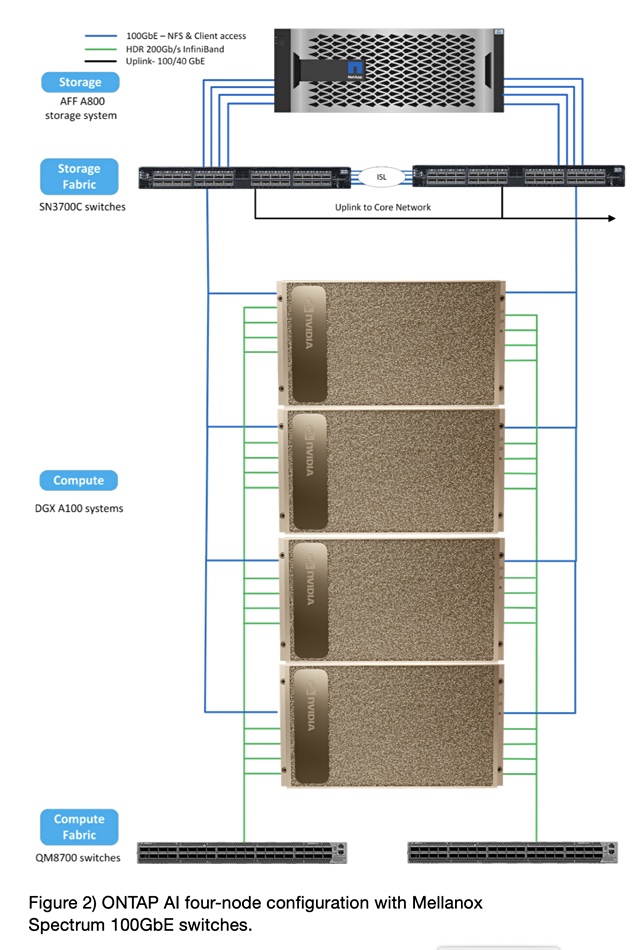

NetApp

NetApp’s portfolio of Nvidia-accelerated solutions includes ONTAP AI, which is built on the DGX BasePOD. It includes Nvidia DGX Foundry, which features the Base Command software and NetApp Keystone Flex Subscription.

The components are:

- Nvidia DGX A100 systems

- NetApp AFF A-Series storage systems with ONTAP 9

- Nvidia Mellanox Spectrum SN3700C, Nvidia Mellanox Quantum QM8700, and/or Nvidia Mellanox Spectrum SN3700-V

- Nvidia DGX software stack

- NetApp AI Control Plane

- NetApp DataOps Toolkit

The architecture includes NetApp’s all-flash AFF A800 arrays with 2, 4 and 8 DGX A100 servers. Customers can expect to get more than 2GB/sec of sustained throughput (5GB/sec peak) with under 1 millisecond of latency, while the GPUs operate at over 95 percent utilization. A single NetApp AFF A800 system supports throughput of 25GB/sec for sequential reads and 1 million IOPS for small random reads, at latencies of less than 500 microseconds for NAS workloads.

NetApp has released specific ONTAP AI reference architectures for healthcare (diagnostic imaging), autonomous driving, and financial services.

Blocks & Files expects Nvidia’s other storage partners – Dell, Pure Storage, and VAST Data – to announce their BasePOD architectures promptly. In case you were wondering about HPE, its servers are included in Weka’s BasePOD architecture.

CSAL

CSAL – Cloud Storage Acceleration Layer is an open-source host-based Flash Translation Layer (FTL) project under the Storage Performance Development Kit (SPDK) that uses high-performance SSDs as an ultra-fast cache and write buffer for write shaping workloads to NAND-friendly, large, sequential writes. It is designed to sit between the application and the high-density NAND SSDs, intercepting write requests and optimizing write amplification and performance. CSAL uses advanced algorithms to dynamically optimize the use of compute resources, enabling multi-tenancy, reducing latency, improving throughput, and enabling faster access to data.

Cirrus Data: Migration for blockheads

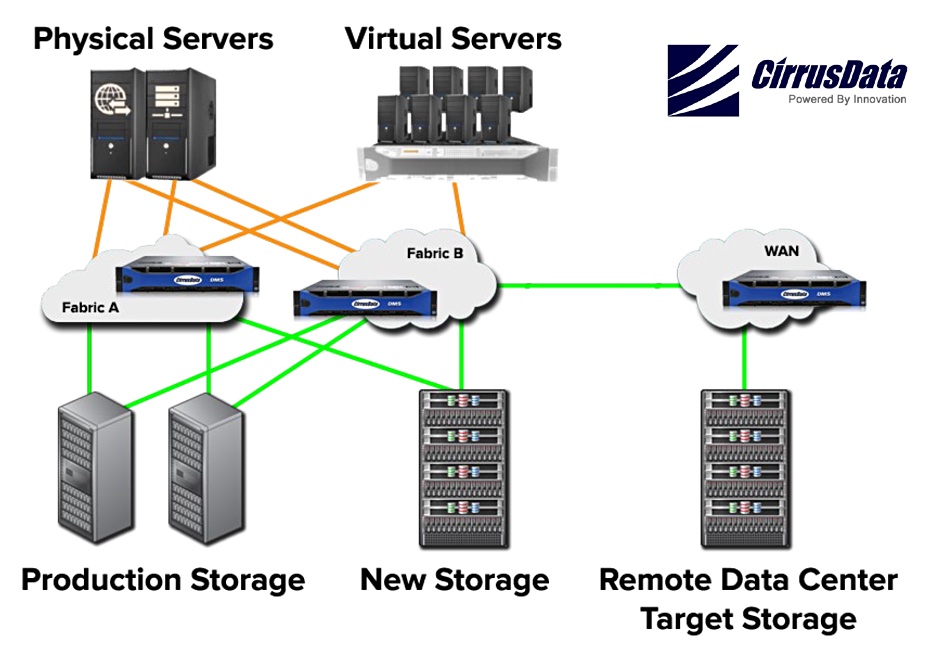

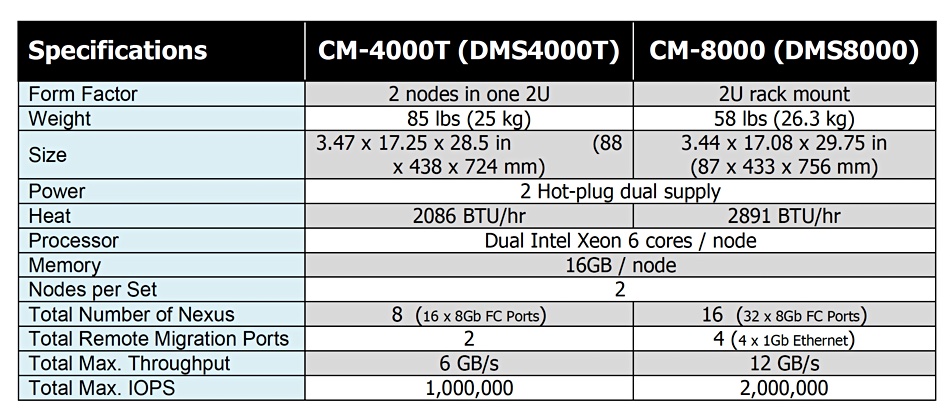

Block data migration provides an exact copy of data from a source storage system to a target system. Blocks and Files has written about file and object-level migrations with Datadobi, Komprise, and WANdisco, but little about block-level migrations. Enter Cirrus Data Solutions, which offers Cirrus Migrate On-Premises to move data at block level between Storage Area Network (SAN) arrays.

There’s no need to reboot, change multi-path drivers or modify existing SAN zoning when migrating data. A second Cirrus Migrate Cloud product moves block data from an on-premises system to the public clouds including AWS, Azure, GCP and Oracle, and is present in the marketplaces for each of these clouds.

Block-level data migrations can involve complexities such as installing drivers at the hosts, changing FC zones, and LUN masking, with SAN downtime required to sort them out. Cirrus has its own patented Transparent Datapath Intercept (TDI) software technology to fix these problems.

This is embodied in a physical appliance, the DMS (Data Migration Server) appliance, that is hooked up in the data path; to the Fibre Channel or iSCSI Ethernet cable between the SAN target and the Fibre Channel/Ethernet switch. For example, Cirrus Data says: “The appliance should look like a wire when installed to both the hosts and storage. The installation process is a matter of removing each FC connection between the switch and storage and connecting it on the appliance; a process that takes just 5 seconds per path! To install an appliance with 4 paths, the total time is less than a minute, and in an HA solution, less that 2 minutes. And if there is ever a problem, the appliances can be uninstalled just as fast.”

Cirrus says there are there steps in the migration process: inserting the appliance, migrating the data, and cutover. It has four named elements as part of its software offering:

- TDI – transparently intercepts SAN traffic without downtime and enables a copy to be made and transmitted to the new array or cloud. It eliminates, Cirrus Data says, all the pain points associated with inserting an in-band appliance to a live FC or iSCSI SAN. It can be inserted logically or physically.

- iQoS (Intelligent Quality of Service) – monitors individual I/Os to ensure there is no migrate application impact to the host and to dynamically utilize breaks in production traffic to migrate more data through to the new array.

- pMotion – emulates the old array to the hosts, which are actually running on the new storage array. While this is being used the old array can be removed.

- cMotion – carries out storage-level cut-over from a source to the target system without downtime to the source host, once the old array is removed, if not before. It swings the workload over from the original FC or iSCSI source system to the destination array.

A video provides more information:

Cirrus Data’s technology can also be used in Cirrus Protect On-Premises to continuously protect a SAN array by building and maintaining a copy in a remote data center.

Alternatives

Pure Storage offers a block data migration service moving data from other suppliers’ SAN systems to its own FlashArray storage.

DataCore offers a similar service with its SANsymphony software. RiverMeadow also offers block data migration services to the cloud, providing live migration of database and message queue-related application workloads. Its service uses an application-consistent snapshot to enable live migration without open file concerns.

Background

Cirrus Data Solutions was started in New York in 2011 by brothers Wayne Lam, Chairman and CEO and Wai Lam, who is CTO and VP of Engineering, funded by a $480,000 seed round and then $3.2 million in debt financing. A $2.5 million venture round took place in January this year. That funding is paying for new sales and marketing activities.

The company developed and patented its Transparent Datapath Intercept (TDI) technology to enable customers to bring new storage architectures online without downtime. This formed the basis of product offerings which culminated in the Cirrus Migrate Cloud introduced in 2021. Cirrus holds 30 patents, 11 concerning TDI.

Wayne and Wai Lam were co-founders at FalconStor Software in 2000. Wai Lam served as CTO and VP of Engineering, being Falconstor’s chief architect, and responsible for 23 of 34 FalconStor patents.

Wayne Lam was, Cirrus Data says, largely responsible for most of FalconStor’s successful storage products (think IPStor). He was a senior executive at CA Technologies before that, and Cheyenne Software, prior to its acquisition by CA.

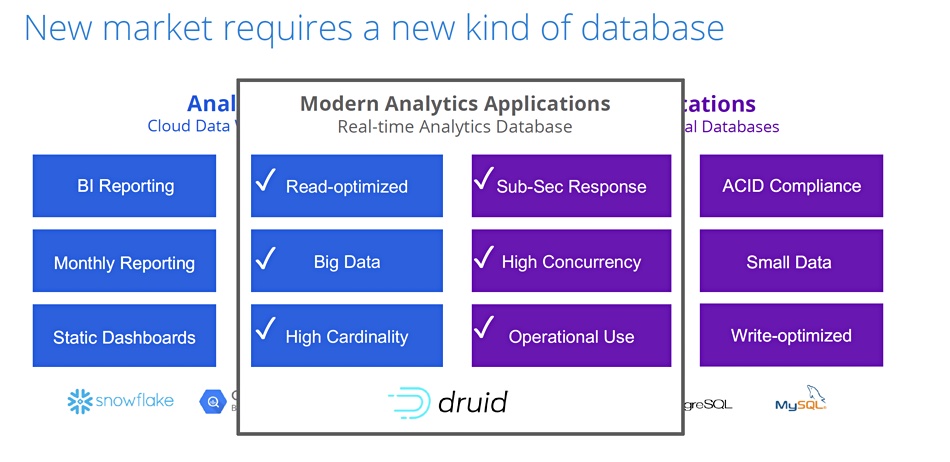

Imply accelerates massive real-time analytics

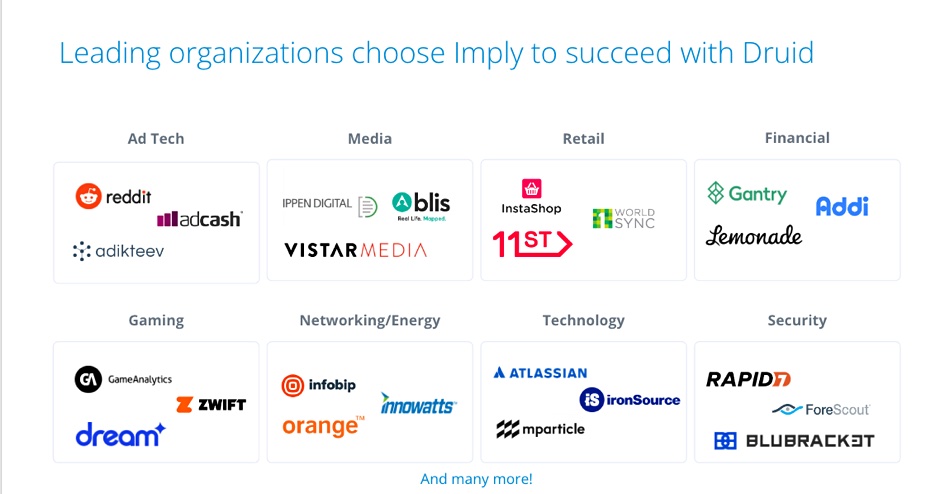

Startup Imply has made its parallel query engine generally available to speed reporting from massive real-time Apache Druid databases, added SQL ingestion, a total cost of ownership pledge and announced 250 customers for its Polaris Druid-based cloud database service.

The open source Apache Druid database stores huge sets of streaming and historical data, delivering real-time answers from analytics queries. Druid’s code originators founded Imply and put a multi-stage query engine into a private preview in March. Queries are split into stages and run across distributed servers in parallel with a so-called shuffle mesh framework. This software engine is now generally available.

Gian Merlino, Imply CTO and co-founder and PMC chair for Apache Druid, said: “We always thought of Druid as a shapeshifter when we originally built it to support analytics apps of any scale. Now we’re excited to show the world just how nimble it can be with the addition of multi-stage queries and SQL-based ingestion.”

Apache Druid v24.0, with its multi-stage query engine, also enables and features:

- Easier and up to 65 percent faster data ingestion with common SQL queries. Thus counts when Druid databases can ingest hundreds of terabytes a day

- Druid supports any in-database transformation without tuning or expertise using SQL, enabling data enhancement, data enrichment, experimentation with aggregates, approximations (including hyperloglogs and theta sketches), and more

Imply says Druid now has a foundation for integration with open source and commercial data tools, covering transformation (dbt), data integration (Informatica, FiveTran, Matillion, Nexla, Ascend.io), data quality (Great Expectations, Monte Carlo, Bigeye), and others.

Total value guarantee

Imply claims that Apache Druid users have a total cost of ownership which includes software, support, and infrastructure. It is introducing a Total Value Guarantee for qualified participants that it says guarantees the total cost of ownership (TCO) to run Druid with Imply will be less than this. What is a qualified participant? A web page should provide that information (it wasn’t live when we checked).

Vadim Ogievetsky, Imply CXO and co-founder, said: “Now with Imply’s Total Value Guarantee, developers can get a partner for Druid that will help them get all the advantages of Imply’s products and services and be there in the middle of the night if needed – with Imply effectively for free.”

Imply said it now has more than 250 customers for its Polaris cloud Druid database service, which was introduced last March. Polaris has been updated with:

- Support for schemaless ingestion to accommodate nested columns, allowing for arbitrary nesting of typed data like JSON or Avro.

- DataSketches supported at ingestion for faster sub-second approximate queries

- Performance monitoring alerts to ensure consistent performance for ultra-low latency queries and greater security with resource-based access control and row-level security

- Updates to Polaris’ built-in visualization enables faster slicing and dicing

- New node types to flexibly meet price/performance requirements at any scale

- Hibernate services for savings

- Comprehensive consumption and billing metrics for instant usage visibility

Imply said its short-term roadmap includes reports about very large result sets with long-running queries and CPU-heavy but infrequent runs, and an alerting function. This will track large numbers of objects with complex conditions and scale to millions of alerts.