Startup IntelliProp has announced its Omega Memory Fabric chips so servers can share internal and external memory with CXL, going beyond CPU socket limits and speeding memory-bound datacenter apps. It has three field-programmable gate array (FPGA) products using the chips.

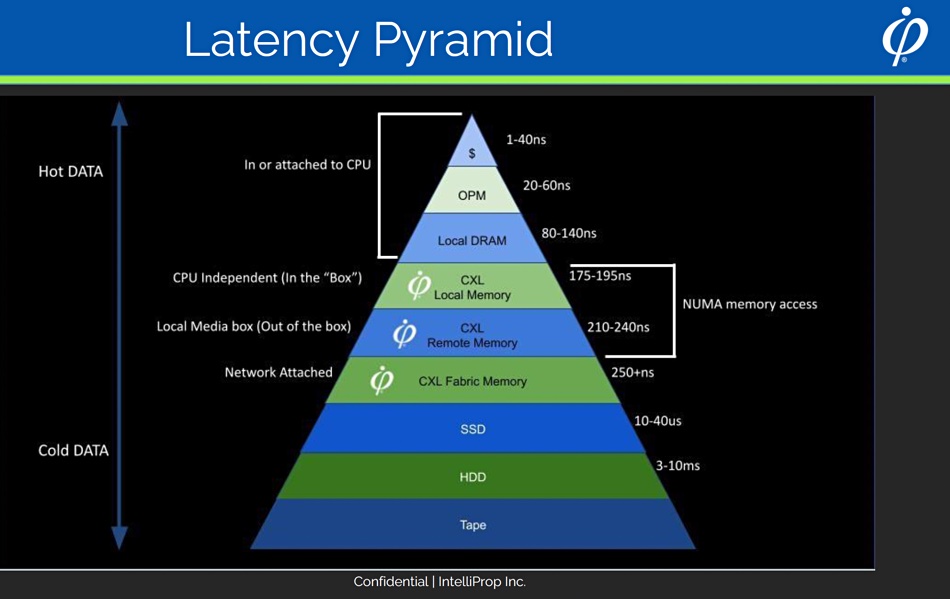

CXL will enable servers to use an expanded pool of memory, meaning applications can fit more of their code and data into memory to avoid time-consuming storage IO. They will need semiconductor devices to interface between and manage the CXL links running from server CPUs to external devices and IntelliProp is providing this plumbing.

IntelliProp CEO John Spiers said in a statement: “History tends to repeat itself. NAS and SAN evolved to solve the problems of over/under storage utilization, performance bottlenecks and stranded storage. The same issues are occurring with memory.

“For the first time, high-bandwidth, petabyte-level memory can be deployed for vast in-memory datasets, minimizing data movement, speeding computation and greatly improving utilization. We firmly believe IntelliProp’s technology will drive disruption and transformation in the datacenter, and we intend to lead the adoption of composable memory.”

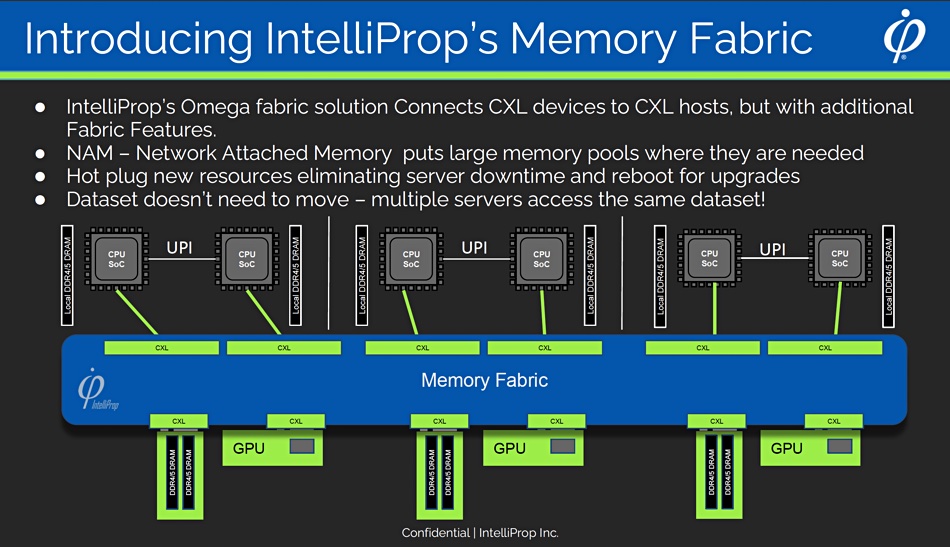

IntelliProp says its Omega Memory Fabric allows for dynamic allocation and sharing of memory across compute domains both in and out of the server, delivering on the promise of Composable Disaggregated Infrastructure (CDI) and rack scale architecture. Omega Memory Fabric chips incorporate the Compute Express Link (CXL) standard, with IntelliProp’s Fabric Management Software and Network-Attached Memory (NAM) system.

Currently servers have a static amount of DRAM which is effectively stranded inside a server and not all of it used. A recent Carnegie Mellon/Microsoft report quoted Google stating that average DRAM utilization in its datacenters is 40 percent, and Microsoft Azure said that 25 percent of its server DRAM is idle and unused. That’s a waste of the dollars spent buying it.

Memory disaggregation from server CPUs increases memory utilization and reduces stranded or underutilized memory. It also means server CPUs can access more memory than they currently are able to use because the number of CPU sockets is no longer a limiting factor in memory capacity. The CXL standard offers low-overhead memory disaggregation and provides a platform to manage latency, which slower RDMA-based approaches cannot do.

The Omega chips are ASICs and IntelliProp says its memory fabric features include:

- Dynamic multi-pathing and allocation of memory

- E2E security using AES-XTS 256 w/ addition of integrity

- Support non-tree topologies for peer-to-peer

- Direct path from GPU to memory

- Management scaling for large deployments using multi-fabrics/subnets and distributed managers

- Direct memory access (DMA) allows data movement between memory tiers efficiently and without locking up CPU cores

- Memory agnostic and up to 10x faster than RDMA

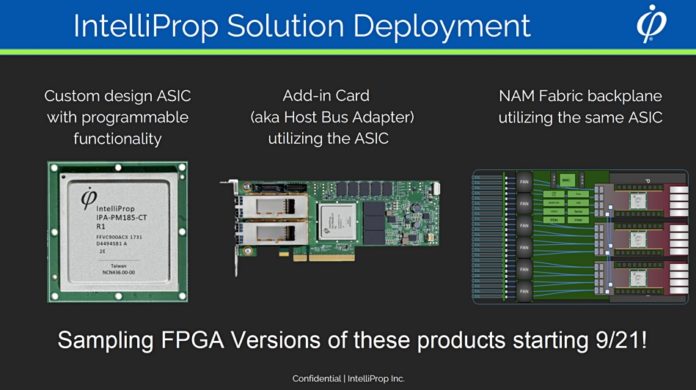

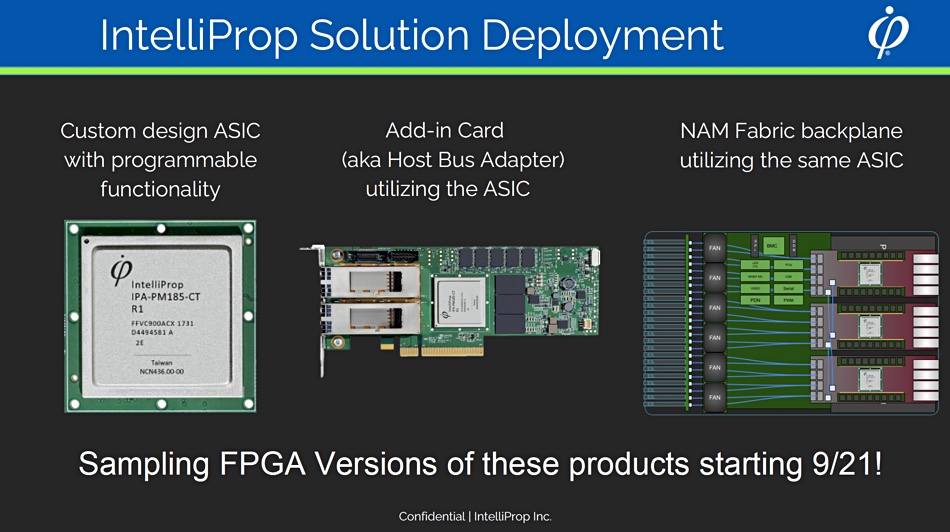

The three Omega FPGA devices are:

- Omega Adapter

- Enables the pooling and sharing of memory across servers

- Connects to the IntelliProp NAM array

- Omega Switch

- Enables the connection of multiple NAM arrays to multiple servers through a switch

- Targeted for large deployments of servers and memory pools

- Omega Fabric Manager (open source)

- Enables key fabric management capabilities:

- End-to-end encryption over CXL to prevent applications from seeing the contents in other applications’ memory along with data integrity

- Dynamic multi-pathing for redundancy in case links go down with automatic failover

- Supports non-tree topologies for peer-to-peer for things like GPU-to-GPU computing and GPU direct path to memory

- Enables Direct Memory Access for data movement between memory tiers without using the CPU

- Enables key fabric management capabilities:

IntelliProp says its Omega NAM is well suited for AI, ML, big data, HPC, cloud and hyperscale/enterprise datacenter environments, and obviously specifically targeting applications requiring large amounts of memory.

DragonSlayer analyst Marc Staimer said: “IntelliProp’s technology makes large pools of memory shareable between external systems. That has immense potential to boost datacenter performance and efficiency while reducing overall system costs.”

Samsung and others are introducing CXL-supporting memory products. It’s beginning to look as if the main event holding up CXL adoption will be the delayed arrival of Intel’s Sapphire Rapids.

The IntelliProp Omega Memory Fabric products are available as FPGA versions and will have the full features of the Omega Fabric architecture. The IntelliProp Omega ASIC based on CXL technology will be available in 2023.

Bootnote – CXL

CXL, the Computer eXpress Link, aims to link server CPUs with memory pools across PCIe cabling. CXL v1, based on PCIe 5.0, enables server CPUs to access shared memory on accelerator devices with a cache coherent protocol. CXL v1.1 was the initial productized version of CXL with each CXL-attached device only able to connect to a single server host

CXL v2.0 has support for memory switching so each device can connect to multiple hosts. These multiple host processors can use distributed shared memory and persistent (storage-class) memory. CXL 2.0 enables memory pooling by maintaining cache coherency between a server CPU host and three device types:

- Type 1 devices are I/O accelerators with caches.

- Type 2 devices are accelerators fitted with their own DDR or HBM (High Bandwidth) memory

- Type 3 devices are memory expander buffers or pools

CXL 2.0 will be supported by Intel Sapphire Rapids and AMD Genoa processors.

Complementary and partially overlapping memory expansion standards OpenCAPI and Gen-Z have been folded into the CXL consortium.

Bootnote – IntelliProp

IntelliProp is focused on CXL-powered systems to enable the composing and sharing of memory to disparate systems. The company was founded in 1999 to provide ASIC design and verification services for the data storage and memory industry. In August it recruited John Spiers from Liqid as its CEO and president, with co-founder Hiren Patel stepping back from his CEO role to become CTO.