Nvidia has revealed the DGX BasePOD, a variant on its DGX POD, with six storage partners so that AI-using customers can start small and grow large.

Nvidia’s basic DGX POD reference architecture specifies up to nine Nvidia DGX-1 DGX A100 servers, 12 storage servers (from Nvidia partners), and three networking switches. The DGX systems are GPU server-based configurations for AI work and combine multiple Nvidia GPUs into a single system.

A DGX A100 groups eight A100 GPUs together using six NVSwitch interconnects supporting 4.8TB per second of bi-directional bandwidth and 5 petaflops. There is 320GB HBM2 memory, and Mellanox ConnectX-6 HDR links to external systems. The DGX SuperPOD is composed of between 20 and 140 such DGX A100 systems.

The DGX BasePOD is an evolution of the POD concept and incorporates A100 GPU compute, networking, storage, and software components, including Nvidia’s Base Command. Nvidia says BasePOD includes industry systems for AI applications in natural language processing, healthcare and life sciences, and fraud detection.

Configurations range in size, from two to hundreds of DGX systems, and are delivered as integrated, ready-to-deploy offerings, with certified storage through Nvidia partners DDN, Dell, NetApp, Pure Storage, VAST Data, and Weka. They support Magnum IO GPUDirect Storage technology, which bypasses host x86 CPU and DRAM to provide low-latency, direct access between GPU memory and the storage.

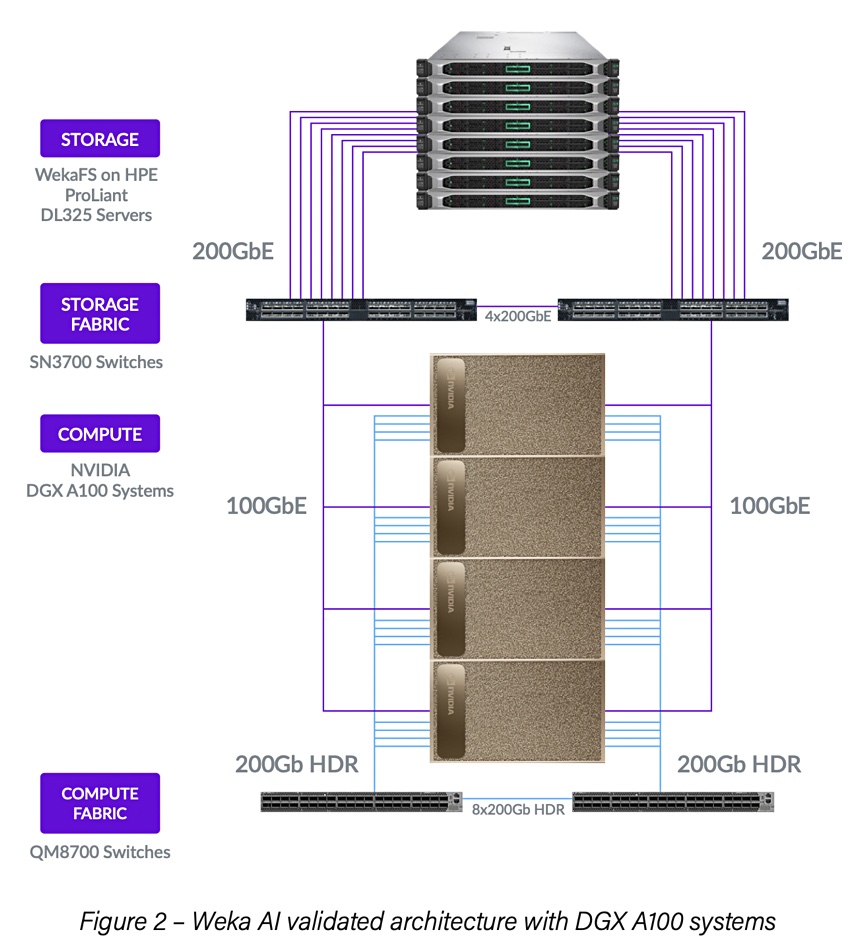

Weka

Weka has announced its Weka Data Platform Powered by DGX BasePOD, saying it delivers linear scaling high-performance infrastructure for AI workflows. The design implements up to four Nvidia DGX A100 systems, Mellanox Spectrum Ethernet and Mellanox Quantum InfiniBand switches, and the WekaFS filesystem software.

By adding additional storage nodes, the architecture can grow to support more DGX A100 systems. WekaFS can expand the global namespace to support a data lake with the addition of any Amazon S3 compliant object storage.

It says that scaling capacity and performance is as simple as adding DGX systems, networking connectivity, and Weka nodes. There is no need to perform complex sizing exercises or invest in expensive services to grow. Download this reference architecture (registration required) here.

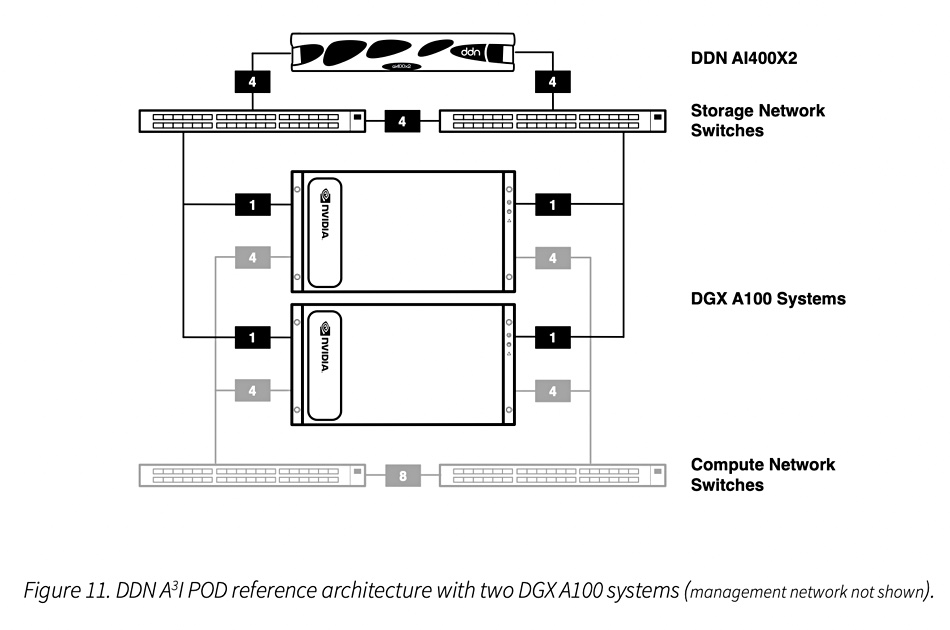

DDN

DDN’s BasePOD configurations use its A3I AI400X2 all-NVMe storage appliance. These are EXAScaler arrays running Lustre parallel filesystem software. They are configurable as all-flash or hybrid flash-disk variants in the BasePOD architecture. DDN claims recent enhancements to the EXAScaler Management Framework have cut deployment times for appliances from eight minutes to under 50 seconds.

Standard DDN BasePOD configurations start at two Nvidia DGX A100 systems and a single DDN AI400X2 system, and can grow to hundreds. Customers can start at any point in this range and scale out as needed with simple building blocks.

Nvidia and DDN are collaborating on vertical-specific DGX BasePOD systems tailored specifically to financial services, healthcare and life sciences, and natural language processing. Customers will get software tools including the Nvidia AI Enterprise software suite, tuned for their specific applications.

Download DDN’s reference architectures for BasePOD and SuperPOD here (registration required).

DDN says it supports thousands of DGX systems around the globe and deployed more than 2.5 exabytes of storage overall in 2021. At the close of the first half of 2022, it had 16 percent year-over-year growth, fueled by commercial enterprise demand for optimized infrastructure for AI. In other words, Nvidia’s success with its DGX POD architectures is proving to be a great tailwind for storage suppliers like DDN.

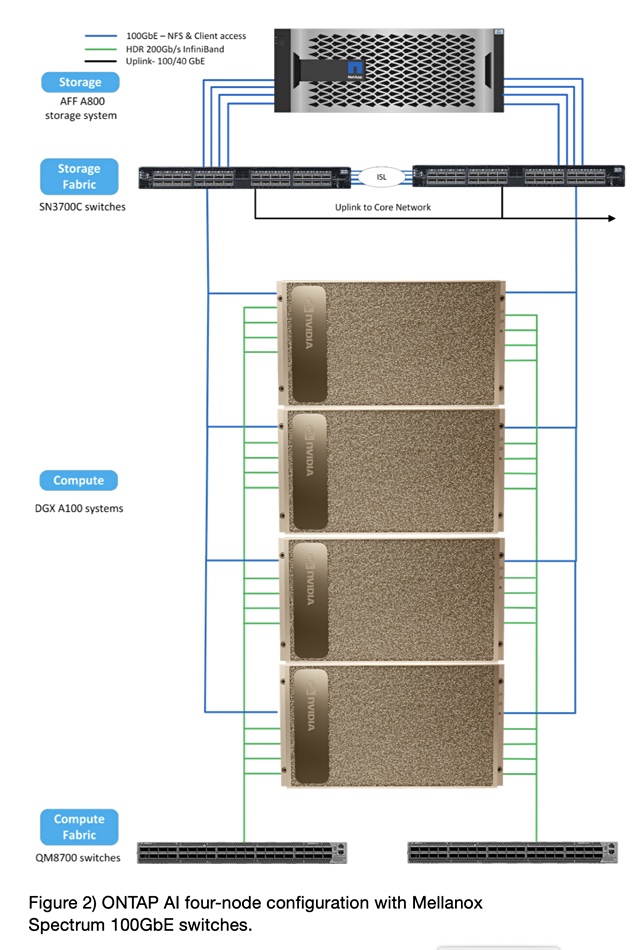

NetApp

NetApp’s portfolio of Nvidia-accelerated solutions includes ONTAP AI, which is built on the DGX BasePOD. It includes Nvidia DGX Foundry, which features the Base Command software and NetApp Keystone Flex Subscription.

The components are:

- Nvidia DGX A100 systems

- NetApp AFF A-Series storage systems with ONTAP 9

- Nvidia Mellanox Spectrum SN3700C, Nvidia Mellanox Quantum QM8700, and/or Nvidia Mellanox Spectrum SN3700-V

- Nvidia DGX software stack

- NetApp AI Control Plane

- NetApp DataOps Toolkit

The architecture includes NetApp’s all-flash AFF A800 arrays with 2, 4 and 8 DGX A100 servers. Customers can expect to get more than 2GB/sec of sustained throughput (5GB/sec peak) with under 1 millisecond of latency, while the GPUs operate at over 95 percent utilization. A single NetApp AFF A800 system supports throughput of 25GB/sec for sequential reads and 1 million IOPS for small random reads, at latencies of less than 500 microseconds for NAS workloads.

NetApp has released specific ONTAP AI reference architectures for healthcare (diagnostic imaging), autonomous driving, and financial services.

Blocks & Files expects Nvidia’s other storage partners – Dell, Pure Storage, and VAST Data – to announce their BasePOD architectures promptly. In case you were wondering about HPE, its servers are included in Weka’s BasePOD architecture.