It’s all change at Gartner’s distributed files systems and objects Magic Quadrant, with three suppliers leaving, one arriving, and major and minor movements across the board.

The leaders have all done well as have niche players Huawei and DDN. Gartner said end users have been reporting unstructured data as growing between 30 per cent and 60 per cent year over year.

Its MQ report states: “The steep growth of unstructured data for emerging and established workloads is now requiring new types of products and cost-efficiencies. Most products in this market are driven by infrastructure software-defined storage (iSDS), capable of delivering tens of petabytes of storage.

“iSDS can also potentially leverage hybrid cloud workflows with public cloud IaaS to lower total cost of ownership (TCO) and improve data mobility. New and established storage vendors are continuing to develop scalable storage clustered file systems and object storage products to address cost, agility and scalability limitations in traditional, scale-up storage environments.”

A Gartner MQ is like a doubles tennis court with niche players and visionaries one side of the net, and challengers and leaders the other. The objective, rather than blast balls across the net and out of court, is to move into the leader’s section of the court and as far from the net and to the edge of the court as possible.

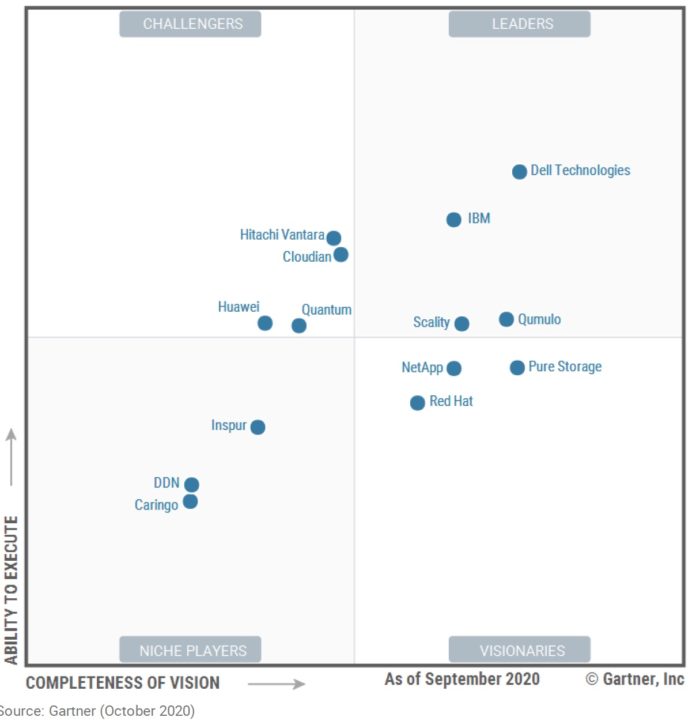

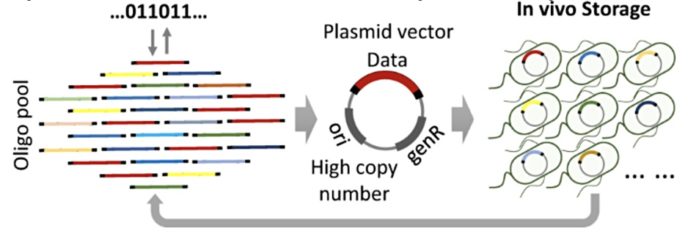

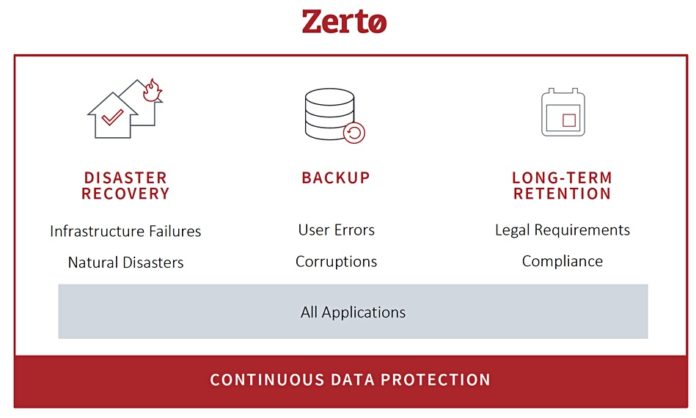

Here is the October 2020 MQ:

Pretty straightforward with 14 suppliers but there is an oddity. IBM has bought Red Hat so there should really be a single IBM entry with the Red Hat one removed. A look at last year’s MQ will show who’s left the court, who has arrived, and how players have moved:

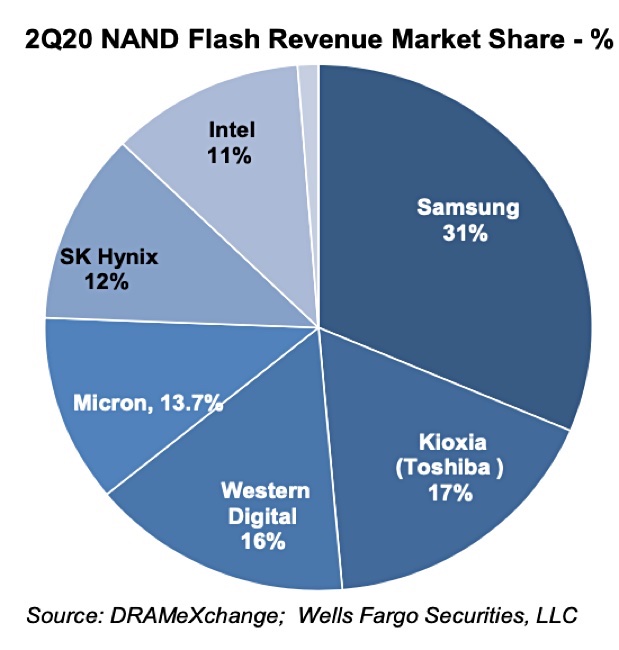

The leavers include Western Digital, with Quantum acquiring its ActiveScale product and appearing for the first time. SUSE has departed as has SwiftStack, which was bought by Nvidia.

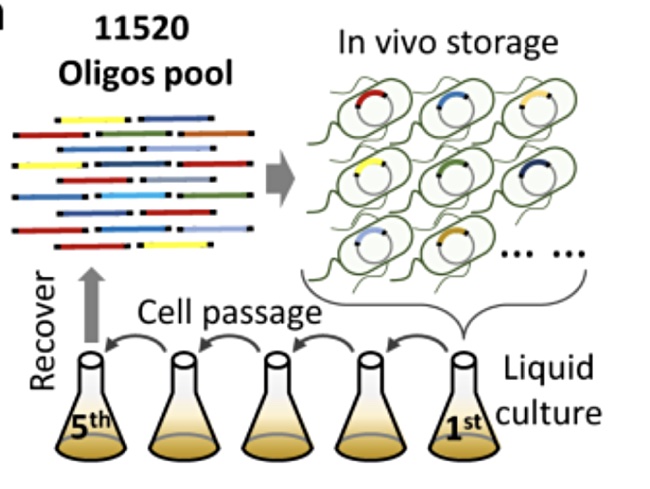

By doing some image magic we can overlay the two charts and see how players have moved:

The 2019 entries are in grey while the 2020 entries are in blue.

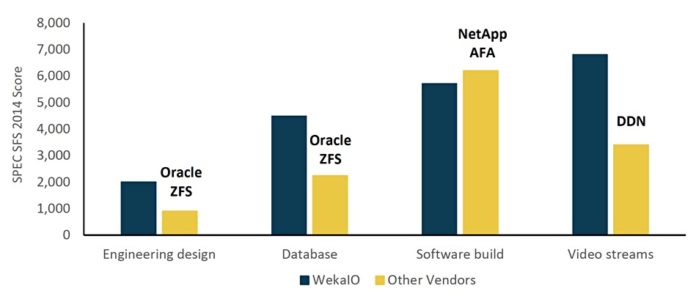

In the leaders’ box leading leader Dell has moved a good way to the right, improving its completeness of vision, ditto second placed IBM. Both Scality and Qumulo have also moved significantly to the right.

Huawei has migrated from the niche players’ box to become a challenger. Cloudian and Hitachi Vantara have both moved slightly, while Quantum’s position shows less ability to execute compared to Western Digital, whose position it inherited/acquired.

The niche players box show DDN moving up and to the right, improving on both the vision and execution axes. Caringo has moved in the opposite direction and Inspur has gained some so-called “vision completeness”.

What do the vendors say?

A Qumulo spokesperson positioned the company in David and Goliath terms: “Companies like us … are rocket ships of growth and taking customers from all over the world out of the hands of all kinds of competitors, but most often, they are coming from the dinosaur vendors.”

IBM said it had been in the leader’s box for five years.