Analysis Like the Roman god Janus, Intel’s Optane technology faces two ways, to memory and to storage, and that has made adoption harder, because it is neither memory fish nor storage fowl, but both.

Why should this matter?

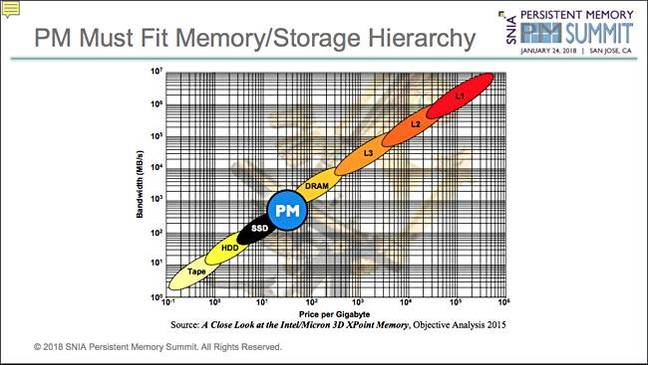

There is a memory/storage hierarchy forming a kind of continuum from high-capacity/slow access/low-cost tape at the bottom to low capacity/ extremely fast access/expensive level 1 processor cache at the top. Jim Handy, a semiconductor analyst at Objective Analysis, has created a log-scale diagram to show this with technologies placed in a space defined by bandwidth and cost axes.

The technologies form two types: storage with tape, disk drives and SSDs , and memories, with DRAM, and three levels of CPU cache. The chart shows PM, persistent memory or 3D XPoint, at the point where the storage devices give way to memory devices.

Memory devices are accessed by application and system software at the bit and byte-level using load/store instructions with no recourse to the operating system’s IO stack. DRAM memory devices are built in DIMMs (Dual Inline Memory Modules).

Storage devices are accessed at the block, file or object level, meaning groups of bytes, through an operating system’s IO stack. This takes a lot of time compared to memory access.

Whenever new storage devices come along, such as a new type of disk or a new type of 3D NAND SSD, they are accessed in the same way – through the storage IO stack. Storage device accesses generally use SATA, SAS or NVMe protocols across a cable between them and the host’s PCIe bus or, with NVMe, a direct link to the PCIe bus.

New types of memory, such as DDR5, use memory protocol, the load/store instructions, like their predecessors – DDR4, DDR3 and so on.

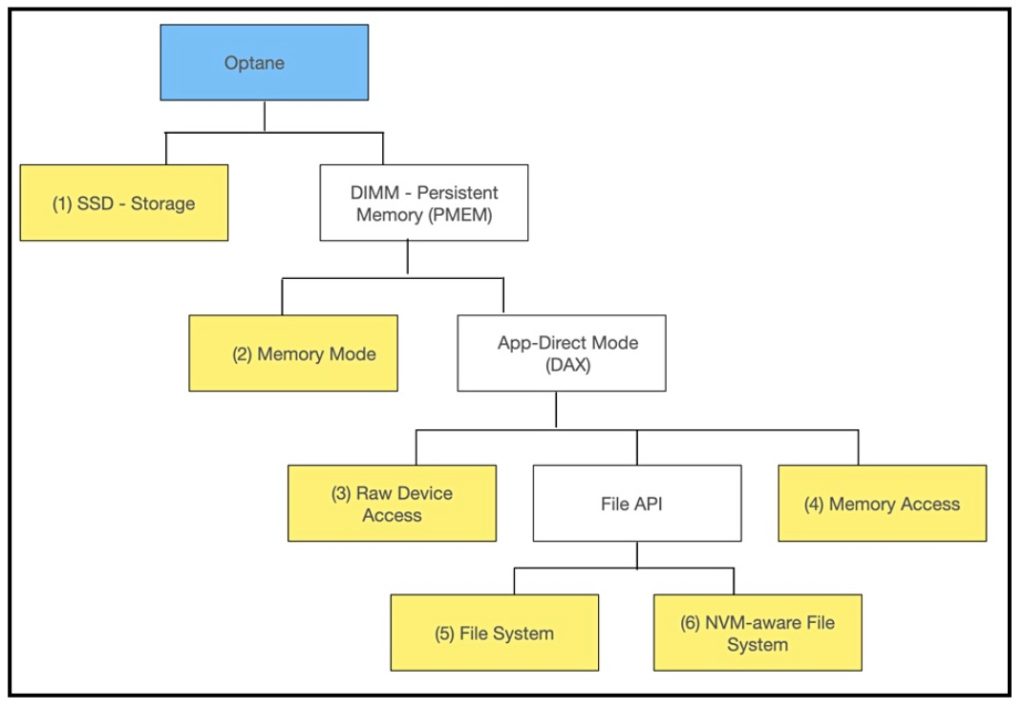

3D XPoint can be accessed either as storage, with Optane SSDs, or as memory, with Optane Persistent Memory (PMEM) products built in the DIMM form factor. That makes it two products.

Accessing it as storage is basically simple; it’s just another NVMe drive, albeit faster than flash SSDs.

Accessing it as memory is hard because it can be done in five different ways. It starts with Memory Mode or App Direct Mode (DAX or Direct Access Mode). DAX can be sub-divided into three options; Raw Device Access, access via a File API, or Memory Access. The File API method then has two further sub-options; via a File System or via a Non-volatile Memory-aware File System (NVM-aware).

These multiple PMEM access modes mean that applications using Optane PMEM have to decide which ones to use and then produce code and test it, which takes months of effort.

Let’s make a couple of extra points. As storage, an Optane SSD is neither fast enough, nor cheap enough (cost/GB) to replace NVMe SSDs. The 64-layer and 96-layer SSDs currently shipping are less expensive to make than 2-layer Optane SSDs. The coming 4-layer Optane drives should help to reduce the gap between Optane and NAND SSDs.

Optane PMEM is slower than DRAM and its appeal is based on servers having more total memory capacity with Optane (4.5TB vs 1.5TB) and running more applications/VM faster than a maxed-out DRAM-only server. That means Optane PMEM is a high-end application-centric choice and not a generic I-want-my-servers-to-go-faster choice.

The breadth of such application support is expanding all the time and this will broaden Optane PMEM’s market appeal.

In effect, Optane PMEM is not fast enough to be a generic DRAM substitute and various access mode tweaks are needed to use it to best effect.

Note. Janus was the Roman god of beginnings and transitions, dualities and doorways. He is often depicted as having two faces looking in opposite directions.