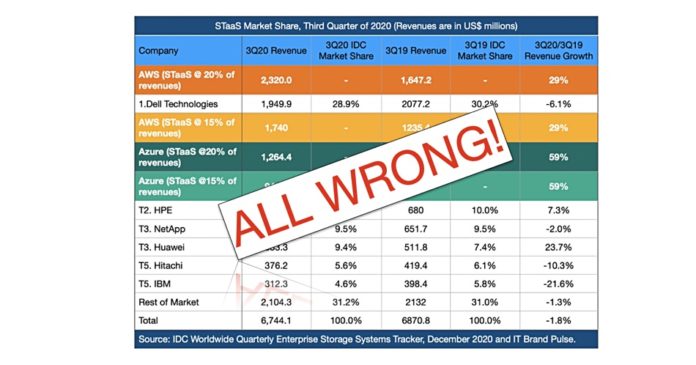

A couple of weeks ago, we reported IT Brand Pulse’s estimates that placed AWS as the world’s biggest – or second biggest – enterprise storage supplier. HPE’s Neil Fleming thought the comparison was flawed and now Dell has waded in.

A company spokesperson who declined to be named said that if its storage sales are measured on the same basis as that implied by the IT Brand Pulse study, “One would find that Dell Technologies’ Q3 2020 storage sales were assessed as external storage hardware, storage software and internal storage for a total IDC estimates exceeds $6 billion… roughly 3-4 times larger than the assumed AWS STaaS revenue.”

To recap, IT Brand Pulse compared IDC Storage Tracker supplier storage sales with AWS and Azure storage service revenues, calculated as a percentage of the cloud giants’ published IaaS revenues. Dell said this is “truly an apples and oranges comparison,” that misunderstands the basis of the two sets of numbers.

The spokesperson noted the IT Brand Pulse calculations do not factor in Dell’s storage and service-based storage revenues. “The [IDC] tracker used for the comparison focuses only on external storage hardware sales and does not include storage software, internal server storage or storage services/support.”

Furthermore, “this Dell Tech figure also does not account for storage service and support revenue. Further, considering that the public cloud storage service is mostly delivered from server-based internal storage, the comparison is off by a huge measure.”

The spokesperson liked HPE senior manager Neil Fleming’s comparison of AWS with Avis car rental, and HPE and Dell with Ford and Chevy car dealerships enough to extend the car-ownership analogy. “Consider a ride sharing service vs. owning a car. When you use a ride share service, you’re paying more for the gas, maintenance, mileage, operator, etc. Using the service full time costs a lot more than the cost of acquiring a vehicle.”

The AWS and Azure public clouds have to buy their storage drives and enclosures of course, but “AWS will have more associated costs built into their storage service that are substantially more than the cost of hardware alone [and] the costs of running the systems includes space, power, networking, operation services, etc.”

IDC and Gartner view

There is a general storage industry output measurement problem here and Dell is talking to IDC about resolving it. “We’re discussing this with the firm and looking to better understand their methodology for both AWS and IDC-tracked storage vendors,” the company spokesperson said.

IDC’s Matt Eastwood, SVP Enterprise Infrastructure, Cloud, Developers and Alliances, told us: “It is true that the delivery of an as-a-service offering includes a number of costs such as datacenter facility, power, cooling, utilities, taxes, administration, etc. that are included in the OPEX pricing which of course are not included in CAPEX product pricing.

“And there are a number of intangibles such as workload optimisation, utilisation, media density, scale, admin ratios and even location (land cost, construction costs, taxes, utility costs, labour costs, etc.) that weigh on the full cost of delivery.

“We have been discussing what IDC might do to help showcase some of these considerations. That said, we don’t have any plans to mix a CAPEX view of storage (aka Storage Tracker) with an OPEX view of cloud storage services (aka cloud service tracker) as the number of intangibles is too broad and variable to do this fairly and accurately.”

Ashish Nadkarni, IDC’s Group VP in its Worldwide Infrastructure Practice, added: “At IDC, we take care not to mix and match revenue types. It is for this reason that we have a separate software, public cloud services and enterprise infrastructure trackers (or data products) – each with its own revenue collection and attribution model. Amazon Web Services shows up as a vendor in the public cloud services tracker but is not even listed in the enterprise infrastructure tracker.”

Regarding discussions with Dell and others, he said: “We have been discussing how best to capture flexible consumption (as-a-service) revenue from OEMs. We need to be careful not to lump it in the public cloud services trackers (even though on the face of it they are as-a-service revenues). As such a hasty comparison would be flawed, and warrants a very nuanced approach at the very least.”

A Gartner spokesperson told Blocks & Files: “While we do track overall storage market share, we do not currently track the combination of storage segments that you are looking at and it is not on our 2021 research agenda to begin tracking it. Because we don’t currently measure these segments, we aren’t able to speak about methodology and measurement related to storage sales to enterprises from the public cloud and on-premises vendors.”