Fungible Inc. has launched an FS1600 storage appliance driven by its DPU microprocessor in the next step in its quest to achieve composable data centre domination.

Fungible’s DPU is a dedicated microprocessor that handles millions of repetitive IT infrastructure-related processing events every second. It does this many times faster than an x86 CPU or even a GPU.

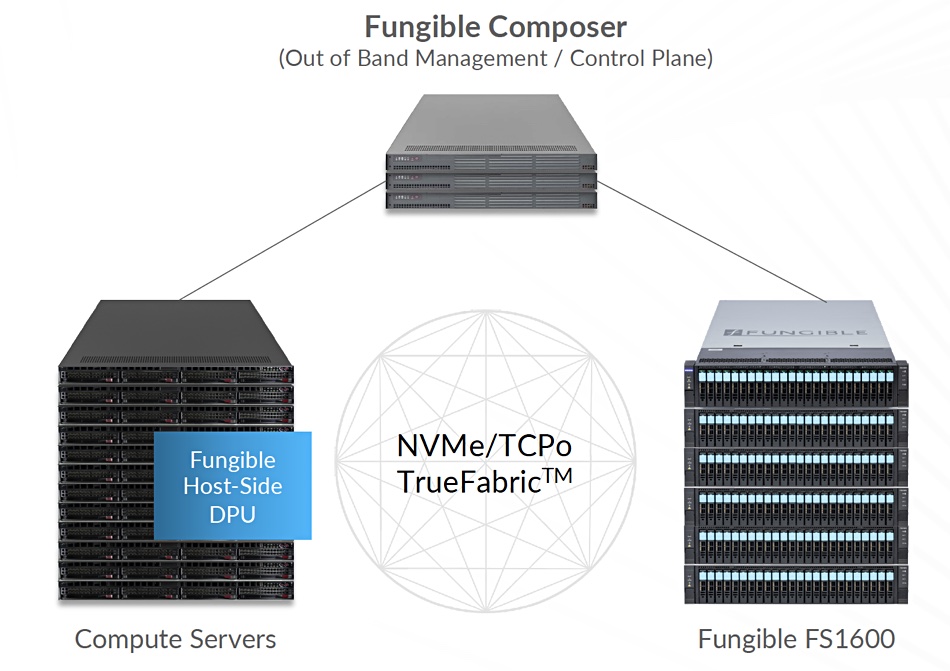

The lavishly funded startup intends also to deploy DPUs in servers. Its goal is to link its products across a TrueFabric network – development is still in the works – using its Composer control plane to build massively scalable and efficient data centres linking GPU and X86 servers. Fungible GPU server cards are expected by the end of 2021 and should deliver data from FS1600 storage servers faster than GPUDirect storage systems.

Pradeep Sindhu, CEO and founder of Fungible, said in a launch statement: “Today, we demonstrate how the breakthrough value of the Fungible DPU is realised in a storage product. The Fungible Storage Cluster is not only the fastest storage platform in the market today, it is also the most cost-effective, reliable, secure and easy to use.”

He added: “This is truly a significant milestone on our journey to realise the vision of Fungible Data Centres, where compute and storage resources are hyper-disaggregated and then composed on-demand to dynamically serve application requirements.

Fungible Storage Cluster

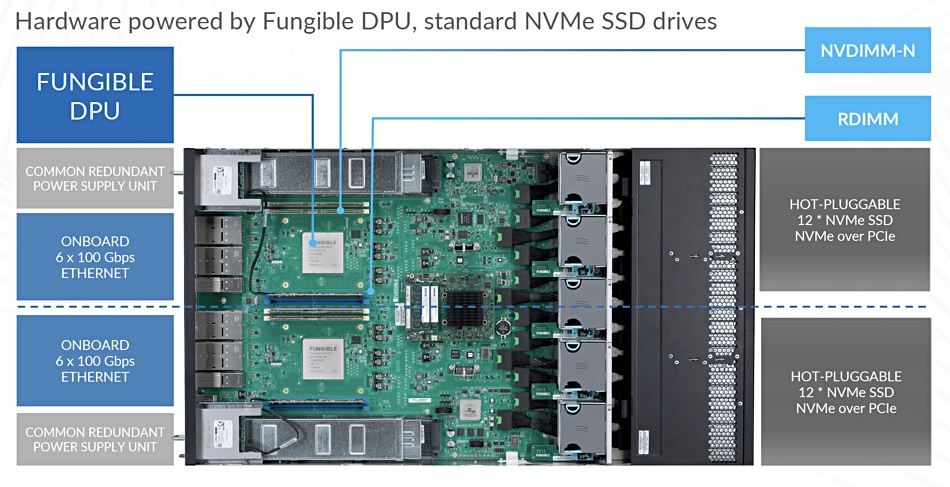

The Fungible FS1600 storage server is a data plane system which is managed from a separate control plane running on a Fungible Composer standard X86 server.

Fungible Composer is an orchestration engine and provides services for storage, network, telemetry, NVMe discovery, system and device management. Storage, network and telemetry agents in the FS1600 storage server link to these services.

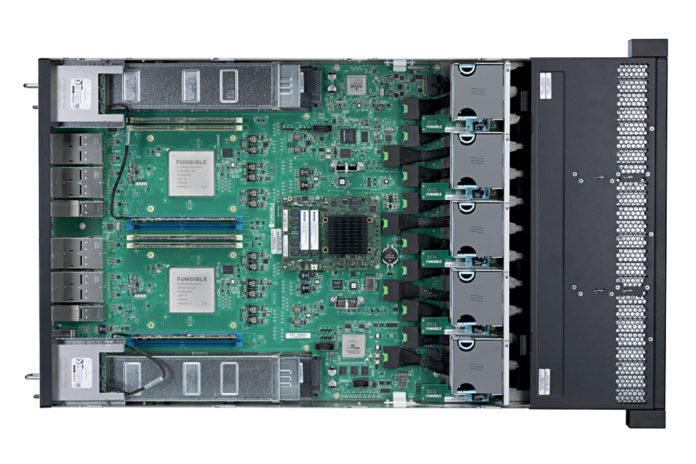

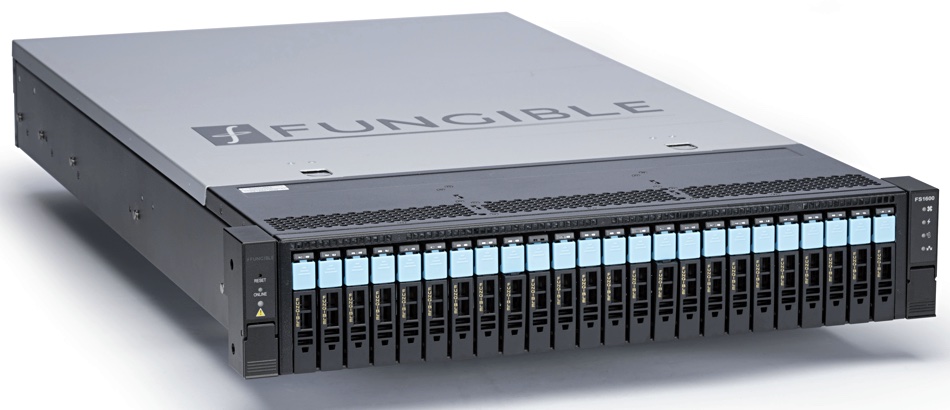

The FS1600 is a scale-out, block storage server in a 2RU, 24-slot NVMe SSD box with two F1 variant DPUs controlling its internal activities. It is accessed via NVMe-over Fabrics across TCP.

The FS1600 can be deployed as a single node or clustered, with and without node failure protection, out to hundreds or even thousands of nodes.

It differs from any other 24-slot, 2RU box filled with SSDs because it is seriously faster, offering 15 million IOPS compared to the more typical two to three million IOPS of other 24-slot boxes.. That’s with 110μs latency, 576TB capacity, and inline compression, encryption and erasure coding. It means a rack full of FS1600s could output 300 million IOPS.

Fungible claims it offers a 5x media cost reduction versus hyperconverged infrastructure (HCI) in a 1PB effective capacity deployment. That’s because it has better SSD utilisation, meaning less over-provisioning, and efficient erasure coding protection, equivalent to 3-copy replication.

According to Fungible, the FS1600 substantially outperforms competing systems and is much cheaper at petabyte scale because it uses the media less wastefully. A 2-node FS1600 cluster can deliver 18 million IOPS, which is about 10 times faster than an equivalent Dell EMC Unity-style system and 30 times faster than Ceph.

There are three configurations;

- Fast (7.6TB SSDS, 81 IOPS/GB, 326MB per sec/GB),

- Super Fast (3.8TB SSDS, 163 IOPS/GB, 651 MB per sec/GB)

- Extreme (1.9TB SSDs, 326 IOPS/GB, 1,302MB per sec/GB).

The FS1600 will support 15TB SSDs at a later date, along with deduplication, snapshots, cloning and NVMe over RoCE.

Potential customers

Fungible is targeting tier 2 cloud service providers – it says its technology give them four to five times more performance than AWS and Google Cloud.

The company cites Uber, Dropbox and FlipCard as examples of tier 2 CSPs Fungible also hopes to sell DPU chips to hyperscalers of the AWS, Azure and Facebook class. It is staying away from the enterprise market, but don’t know if that is a for now or forever thing.

FS1600 clusters are available today from Fungible and partners.