….

A partnership between Robin.io, Blue Arcus and KloudSpot will combine the technologies needed to enable end-to-end 4G and 5G services and provide a platform to accelerate deployment of 5G and edge applications. The consortium will bring together capabilities such as location-aware, AI-led video analytics, surveillance, data analysis, hyper automation, network slicing, cloud-native application lifecycle management and hyperscale orchestration. The partners hope this will halve the time-to-market and operating costs for evolving digital businesses at the edge.

…

WeRide, China’s leading L4 autonomous driving company, is using Alluxio’s Data Orchestration software as a hybrid cloud storage gateway for applications on-premises to access public cloud storage like AWS S3. There is a localised cache per location to eliminate redundant requests to S3. In addition to removing the complexity of manual data synchronisation, Alluxio directly serves data to engineers working with the same data in the same office, circumventing transfer costs associated with S3 and improving end-user work efficiency several-fold.

…

A Cycle and Backblaze B2 Cloud Storage integration enables companies that utilise containers to automate and control stateful data across multiple providers from one dashboard. This partnership enables developers to deploy containers without dealing with Kubernetes complexities, unify their application management, including automating or scheduling backups via an API-connected portal. They can choose the microservices and technologies they need without, the two claim, compromising on functionality.

…

Panzura has appointed Brian Brogan as its new VP of Global Sales Channels, looking after system integrators, value-added resellers, technology alliance partners, and OEMs. He comes to Panzura with more than two decades’ experience in the IT channel, having served in leadership roles at Automation Anywhere, SAP, EMC (now Dell EMC), and IBM.

…

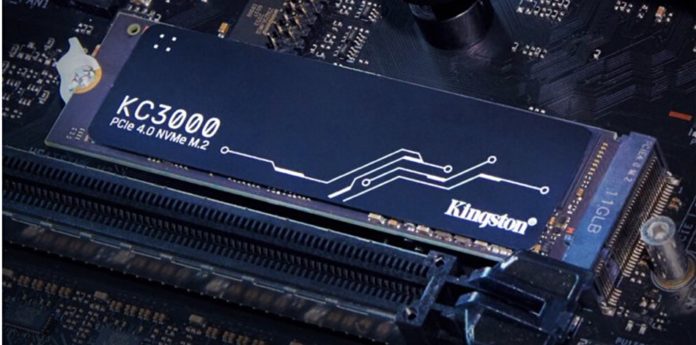

Kingston Technologies’ forthcoming KC3000 NVMe PCIe Gen-4 SSD with extraordinary performance. Read and write bandwidth is said to be 7GB/sec, and both read and write IOPS are up to 1,000,000. The capacity range for this M.2 2280 format drive is 512GB, 1TB, 2TB and 4TB. It uses TLC 3D NAND, a Phison PS5018-E18 controller, and has a graphene heat spreading layer atop the card. The endurance in TB written terms is 512GB: 400TBW, 1TB: 800TBW, 2TB: 1600TBW, and 4TB: 3200TBW.

…

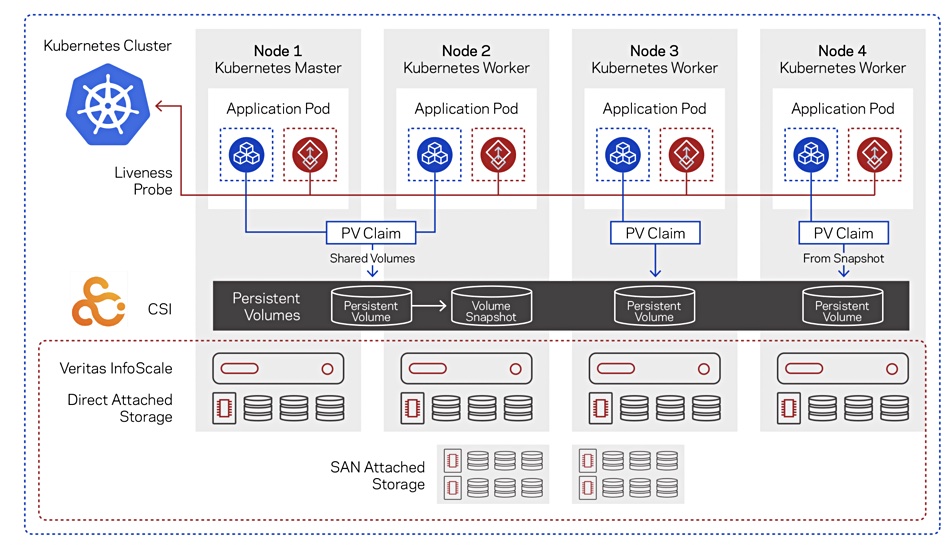

Zerto for Kubernetes (Z4K) delivers disaster recovery, backup, and data mobility to containerized applications. A new version includes support for VMware Tanzu (6.7 and 7.0) and Rook Ceph, protection of stateless applications for both backup and disaster recovery in addition to existing support for stateful applications and persistent volumes, and restore to a separate namespace so you can test recovery without impacting production namespaces. A Zerto blog discusses all this.

…

Tape is green — at least compared to disk — and brings green ($) to FujiFilm. It announced its Sustainable Data Storage Initiative to highlight how tape technology can reduce electricity consumption and CO2 emissions related to data storage. It launches with a sponsored IDC white paper, Accelerating Green Datacenter Progress with Sustainable Storage Strategies, published by technology research firm IDC, providing an analysis of the energy savings and resulting environmental benefits of moving more data to tape storage. And, of course, thereby contributing to Fujifilm’s business. Self-serving? Moi? Mais non!

…

Quantum has updated its CatDV 2021 products with major new features, performance enhancements, and a range of new deployment options. It introduces a new review and approval framework with real-time messaging, support for clip stacking meta-folders to flexibly organise content with versioning to make team-based collaboration faster and more focused.

New features include:

- StorNext filesystem metadata integration for dramatically efficient file-system operations on StorNext systems;

- Faster duplicate file detection on file moves, copies, and renames;

- Improvements and updates for playback and export including rendering pipelines powered by Nvidia RTX GPUs;

- Extended support for image sequences, DPX and EXR content;

- More performance and support for camera RAW and native formats including Canon, Blackmagic, and RED;

- Proxy and mezzanine creation from Avid DNx media;

- Docker deployment support via XML deployment configuration for rapid testing, evaluation, and cloud deployments;

- Precision web playback support for Google Chrome and Firefox client scrubbing and clip annotation;

- Extended theming and customisation of web client;

- Multiple User Roles support and User Directory integration improvements for LDAP/SAML environments;

- Native CatDV support for Two-Factor Authentication using authenticator applications.

…

Storage Made Easy released a new version of its secure multi-cloud data management software product, the Enterprise File Fabric. It features SMBStream Office to office file acceleration, updated Microsoft Teams App, AutoCAD Previewer, Data Automation Rule enhancements, Secure Link Sharing update, and File Fabric’s SMB Connector can now use SAML for delegated authentication. The integrated content search engine has been upgraded to Solr 8 and the Audit event Log sees numerous improvements. Also included: M-Stream Fast File Transfer WAN Acceleration and enhancements to all connectors — RStor, SoftNAS, Qumulo, Pixit Storage, Seagate Lyve. NetApp Object Storage, NetApp Global File Cache and Lucidlink have been added as certified storage connectors.

…