Interview; Storage IO slows down applications and there is nothing you can do about it, except by not doing it. In-memory computing accelerates applications to sprint speed by having them run entirely in memory. This eliminates storage IO, except when the application is first loaded into memory.

However, memory is expensive and capacity per server is limited by the number of sockets and channels up to 1.5TB. If an application’s code and data is larger than 1.5TB it can’t all run in memory. Some storage IO Is necessary.

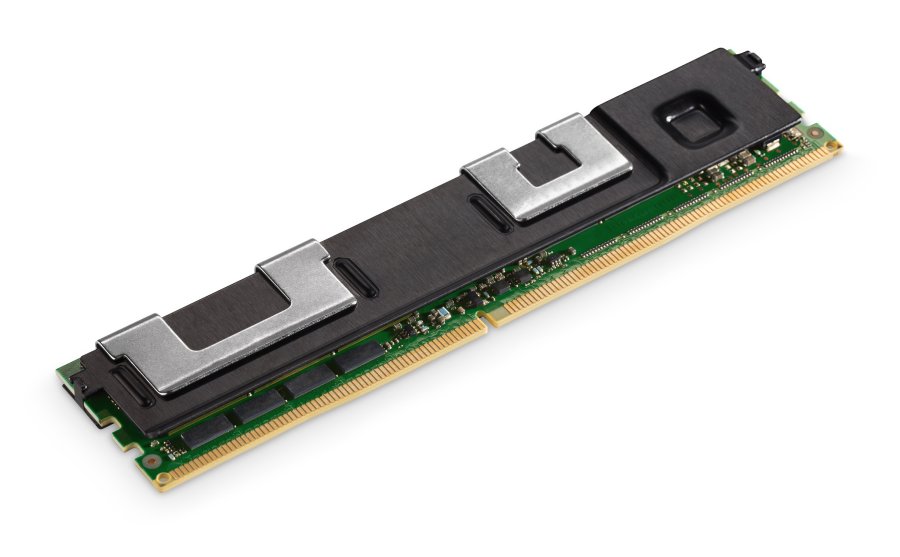

The use of Optane persistent memory increases server memory capacity to a maximum of 4.5TB per server, greatly increasing the space available for in-memory applications. Newer memory technologies such as DDR5 and HBM should enable in-memory applications to run even faster. But how much faster?

John DesJardins, CTO of Hazelcast, the developer of an in-memory computing platform, has shared his thoughts with us about Intel Optane PMem, HBM and DDR5 DRAM. Check out our interview below.

Blocks & Files: DRAM is expensive so an in-memory app needs costly memory. Is storage-class memory, like Optane, worth the money?

John DesJardins: Hazelcast has benchmarked with Intel Optane and found it performs well, offering an attractive, lower cost alternative to DRAM.

Blocks & Files: How does Hazelcast extend memory by using persistent memory like Optane or Samsung’s Z-SSD or Kioxia’s X SSD?

DesJardins: Hazelcast is optimised as a pure in-memory platform, meaning that our data, indexes and metadata all are retained in-memory for ultra-low-latency performance. So, we don’t use SSDs or other storage to extend memory. We do offer persistence features for ultra-fast restarts or optionally for data structures such as within our CP Subsystem. These persistence features can leverage either Optane PMem or SSDs including Intel Optane SSD, OCZ Octane SSD, Samsung Z-SSD, etc.

Blocks & Files: How does it compare and contrast these different storage-class memory devices?

DesJardins: Intel Optane PMem dramatically outperforms other storage class memory in our benchmarks. We see nearly 3X faster restart times with Optane PMem, for example.

Blocks & Files: How does it use Optane PMem such that no app code changes are needed?

DesJardins: Hazelcast provides advanced Native Memory features that extend beyond Java to fully leverage memory and storage interactions available from the Operating System level, and this has been extended to leverage Intel libraries for Optane PMem. These capabilities are all abstracted and managed via simple configurations within our platform, eliminating any need for application code changes to leverage Optane PMem.

Blocks & Files: Can Hazelcast show price performance data to justify PMem use?

DesJardins: We are working with Intel on a paper that will include the price/performance TCO figures to justify Optane PMem. We expect to release this paper within the next few weeks and are happy to share it.

Blocks & Files: What does Hazelcast think of DDR5?

DesJardins: Hazelcast tracks and evaluates all advances in hardware technology to assess their impact and the benefits to our customers. As they become available on the market, we will do more detailed benchmarks. We see good potential in DDR5 to improve performance.

However, as the standard for DDR5 DIMMs was only released on July 14, 2020, we have not yet done benchmarks to know what that level of impact will be. Until now, DDR5 was only available integrated into GPUs and other specialised processors.

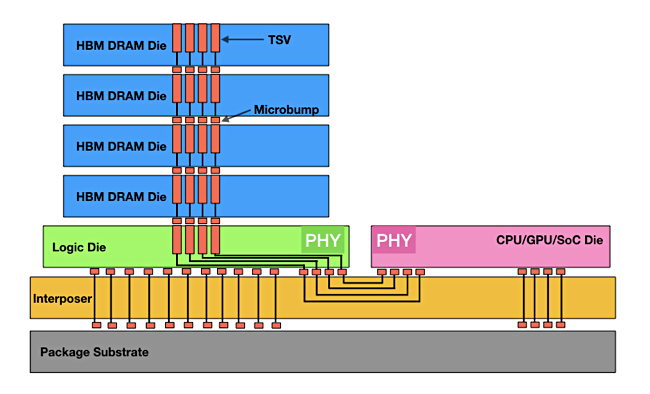

Blocks & Files: What does Hazelcast think of High Bandwidth Memory technology?

DesJardins: We are tracking High Bandwidth Memory technology but have not yet seen it mad available in server class hardware or from Cloud Service Providers outside of being integrated with GPUs or other specialised processors. The first availability of HBM in general purpose DIMMs seems to be with DDR5. We will continue to track demand and availability, as well as assess how these technologies apply to our customers’ use-cases. As technology is available, we will benchmark with it, and where it makes sense, optimise our platform to leverage it.

Blocks & Files: Does in-memory processing benefit containerised apps? Can Hazelcast work with Kubernetes?

DesJardins: Yes, in-memory platforms work very well within containerised applications. Hazelcast is Cloud-Native and supports Kubernetes, as well as Red Hat OpenShift, VMWare Tanzu and Kubernetes on major Cloud Service Providers.

Comment

The net:net here is that in-memory applications will get larger and execute in less time. They have an inherent advantage over traditional applications that can’t fit into memory and have to use storage IO.

But in-memory compute will still only be available to a minority of applications overall, until memory capacity limits such as CPU sockets and memory channels are swept away, and until DRAM becomes more affordable.