Independent cloud file services supplier Nasuni is seeking a spot at the top cloud file services table, citing an improved technology that doesn’t require lock-in, and claimed lower costs than rivals NetApp, AWS, Azure and Google Cloud Platform.

Update. Nasuni’s mistaken 192TB filesystem limit for AWS FSx ONTAP corrected. 18 February 2022.

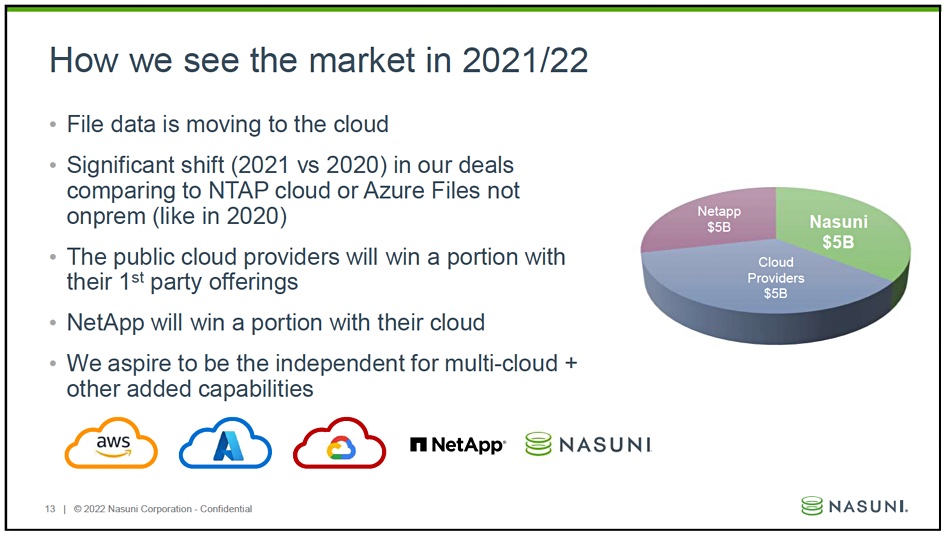

Its ambition is to grab $5 billion share of the cloud file market, which is quite audacious seeing as it would be equal to the share of cloud filer giant NetApp – or the combined share of the top public cloud trio of hyperscalers AWS, Azure, and Google Cloud Platform.

Nasuni, unsurprisingly, sees NetApp and its data fabric offerings as its biggest competition. Nasuni believes it can grow and prosper by similarly working with the titan cloud trio – it has co-sell deals with them – and, in theory, offering simpler technology, lower costs and better services.

It’s a competitive sphere, with not much new growth, it seems. Nasuni president and CEO Paul Flanagan said “40 per cent of our business is business we’ve taken” from competitors.

Co-selling

COO David Grant said “We believe that the cloud companies, Azure, and AWS, will win their fair share of this market with their own first-party offerings. We also believe that NetApp will win a fair share of the portion of this market, because they have a lot of happy customers that have been using them for 20 years.

“But we also believe that there is room for an independent vendor being us in this case. We aspire to be that independent. … We think it’s essentially a race right now that when you look at pure cloud file storage sales, Azure AWS, NetApp and Nasuni are roughly the same size, maybe Azure’s a little bit bigger.”

He said “We have co-sell relationships with all three cloud providers. Last year, they brought us about 30 per cent of our business.”

He said Microsoft brings Nasuni into accounts when scale is needed. “Azure Files is a good product, but it’s a good product for small and medium sized businesses. It’s not a product suitable for scale.”

It’s different with AWS. “They have a lot of high-end HPC type offerings [like] FSX for NetApp, but in the middle, they don’t cover a lot of the general NAS with that product, because … it’s extremely costly [and] they have a lot of limits on how big it can scale.”

Google has “been a great relationship for us. They brought us a lot of good accounts in 2021. And they continue to sell us as an option, as … they do not have a file storage product except for on the high end side of things with file store.”

Nasuni’s growth

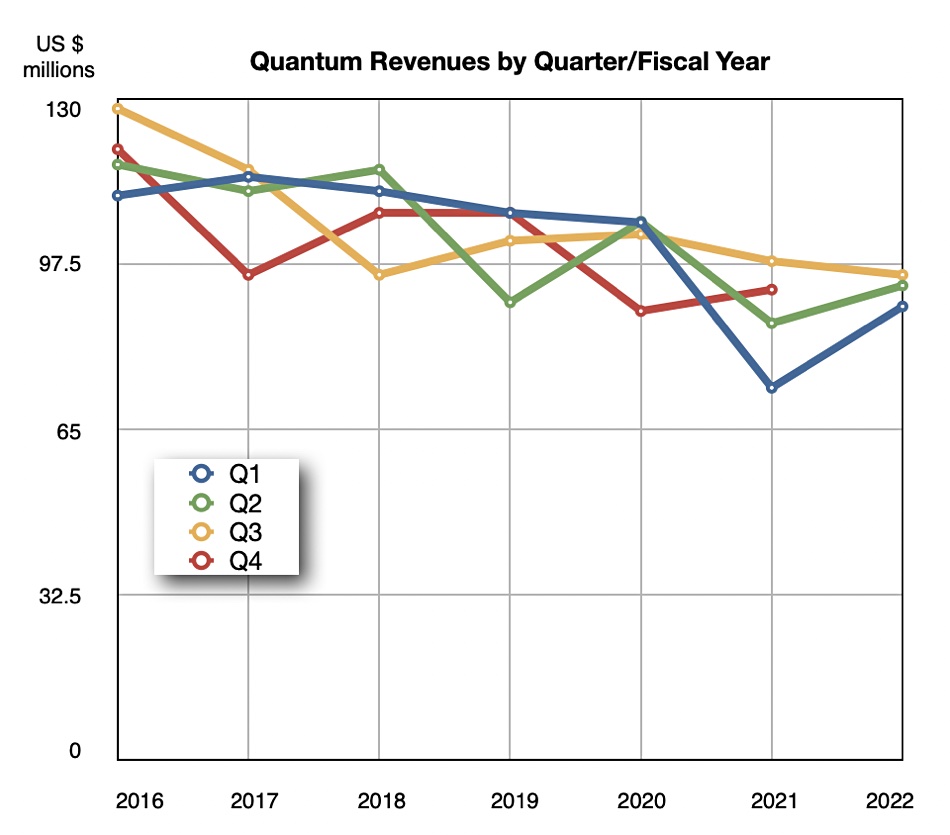

Flanagan said “We’re building a file services platform that is servicing some of the largest companies in the world. … our revenues continue to grow,” with a 30 per cent year-on-year annual recurring revenue rate in 2020 and in 2021. The new customer growth rate was 82 per cent in 2021 and the year ended with around 600 enterprise customers. Between 98 and 99 per cent of its customers renew their yearly subscriptions.

He claimed the company has a “120 per cent revenue expansion rate.”

The Nasuni boss added “So that means if a customer is spending $1, this year, next year, they’re going to spend $1.20. In the year after that, they’re going to spend $1.50, and the year after that they’re going to spend $1.85.”

How the technology works

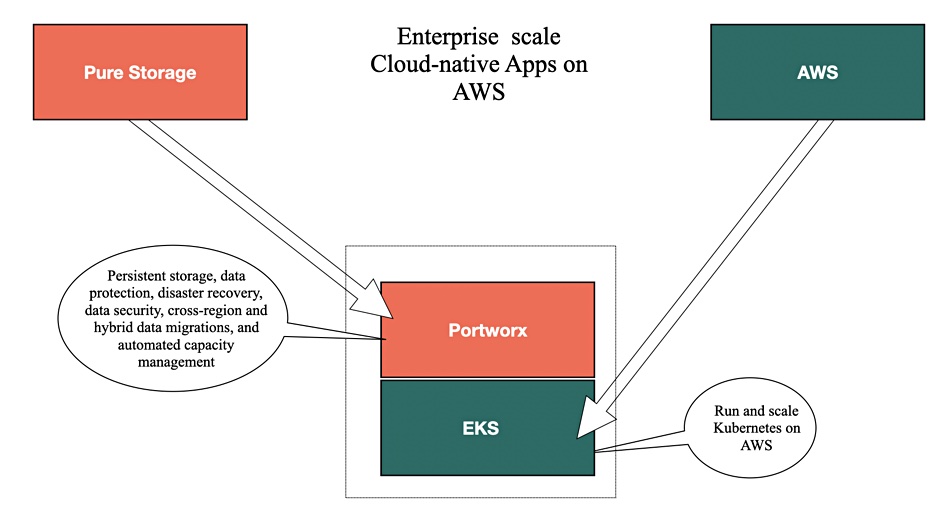

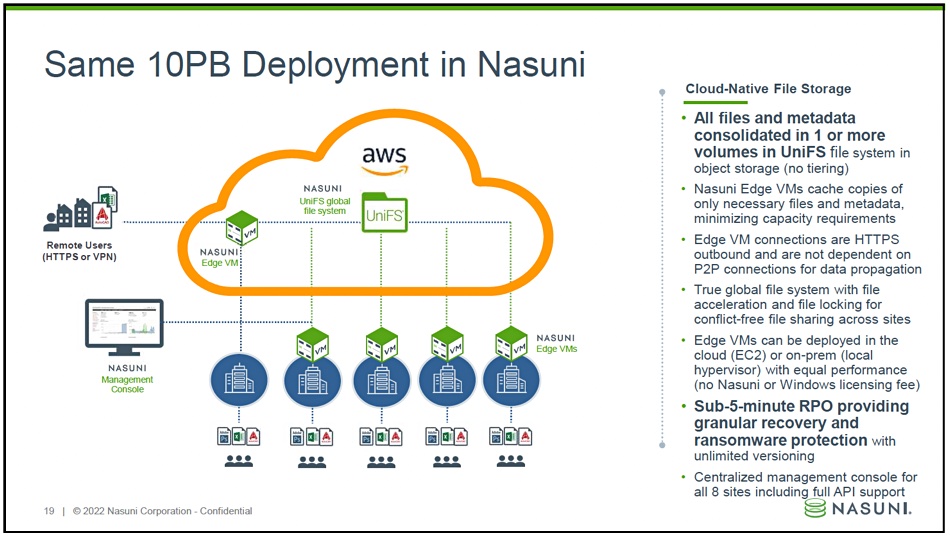

Nasuni presents a file system to its users who access it via so-called edge appliances. These are typically virtual machines or actual x86 appliances running Nasuni’s software on-premises or in the public clouds and offered in a SaaS business model. They provide local caching and access to Nasuni’s global UniFS filesystem data and metadata stored in object form in the public clouds.

Flanagan said “We have fundamentally changed the entire architecture by writing to object storage.”

UniFS has built-in global locking, file backup, disaster recovery and file synchronisation – reflecting Nasuni’s origin as a file sync and sharer – and helps with ransomware recovery through its versioning system. Because it is cloud-native, Nasuni says it is limitlessly scalable – unlike its main competitor, NetApp.

This point was hammered home by Nick Burling, Nasuni’s product management VP, who said “The edge caching is fundamental” and backed up by limitless cloud object storage and limitless numbers of immutable snapshots with single pane of glass management. This then “leads into being able to displace other services that customers are having to leverage, like file backup [and] disaster recovery.”

The edge VMs or appliances have Nasuni software connectors that enable file migration off existing NAS systems into Nasuni’s UniFS system. Nasuni’s edge software also deduplicates, compresses and encrypts locally written data before sending it up to the customer’s chosen cloud object store.

There are separate control and data planes and “Nasuni is never in the data path for our customers. They own and manage their own object storage account.”

Nasuni’s software is built to be generic in the way it provides gets, puts and versioning to the cloud object stores. It does its own versioning rather than relying on each cloud vendor’s scheme. Burling said “We made a decision early on to build out our own versioning system independent of any object store – it took a lot of time. It’s a very, very big piece of IP for us. But it gives us the flexibility we need.”

He told us “If you use the versioning of the [CSP’s] object store, we’ll never get the performance that you can get from our custom versioning. … They built versioning for lots of different use cases. We built our versioning specifically for a file system. And that really changes the game in terms of how you how you construct, where you convert from, how you construct, where you convert back to, and the relationships between objects.”

Object Lambda

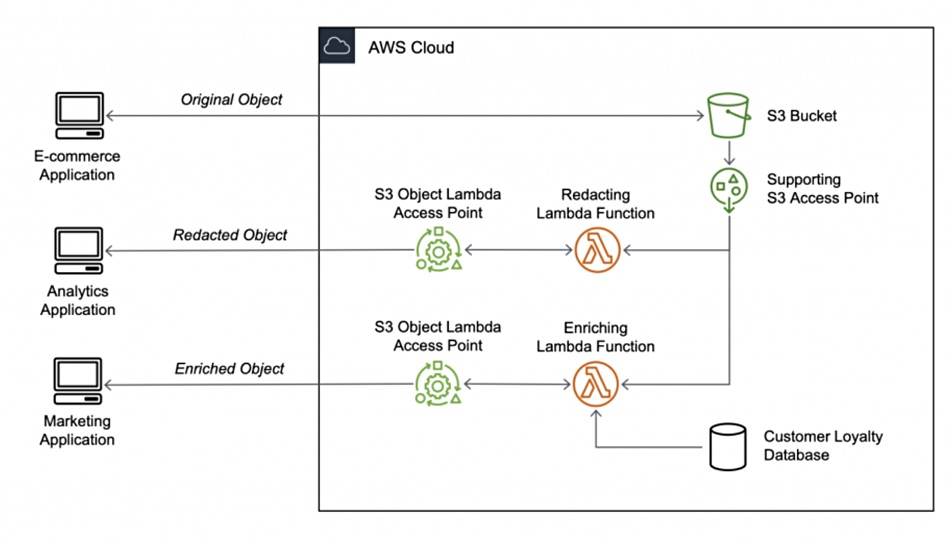

An aspect of this cross-CSP approach affects added data services, as Burling explained. “When we see the opportunity to implement new features of the object store, we look for opportunities where we see a large scale change across different object stores. And one that we see on the horizon is this idea of every protocol being enhanced by custom code.”

An example of this is “The S3 protocol being able to be extended with custom code inside of AWS, which is called Object Lambda.” The Object Lambda idea is to apply filtering functions to a data set before it is passed on destination applications for e-commerce, analytics, marketing, etc. so that the same source dataset can be used for each application with no dataset copying involved.

Burling said “There are other things they’re developing inside of Google and Azure, as far as we understand, that have a lot of potential to really open up new possibilities for us and for other customers.” Although the actual services in each CSP’s environment will be specific to that CSP, “Underneath all of that will be the same Python code. And the implementation of the function is different per different platform. But having the flexibility to have your own get or your own put that does a transformation is the part where we see independent value across the different providers.”

Further, “We see Python as a function … just think of it as an algorithm that we can easily transport to different platforms. And it just happens to get wrapped up in either Lambda, or it’s in Cloud Functions or it’s an Azure function on the other platforms.”

Nasuni vs NetApp

Even in a single cloud use case, Burling claimed, “Because of our architecture, we’re still better than the first-party services that our customers are looking at, because of those same things: scale, simplicity, and cost.”

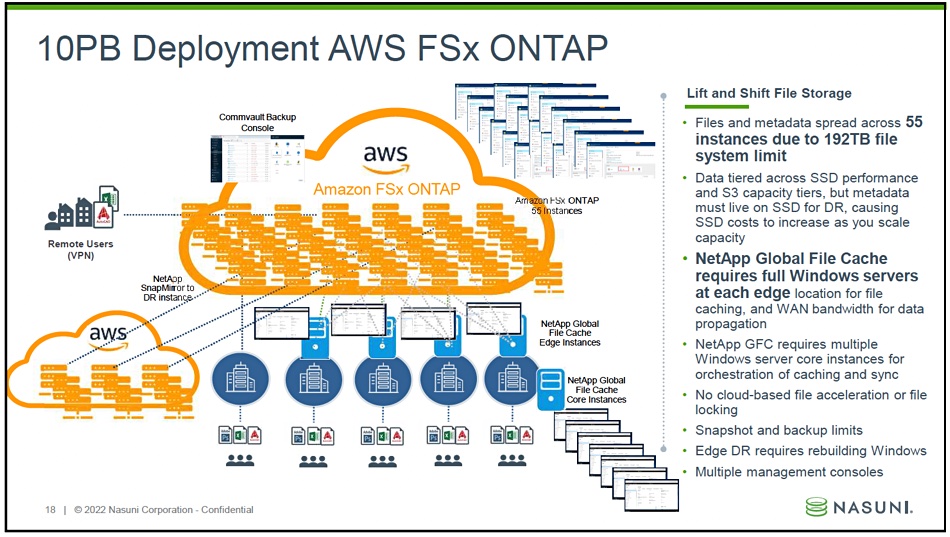

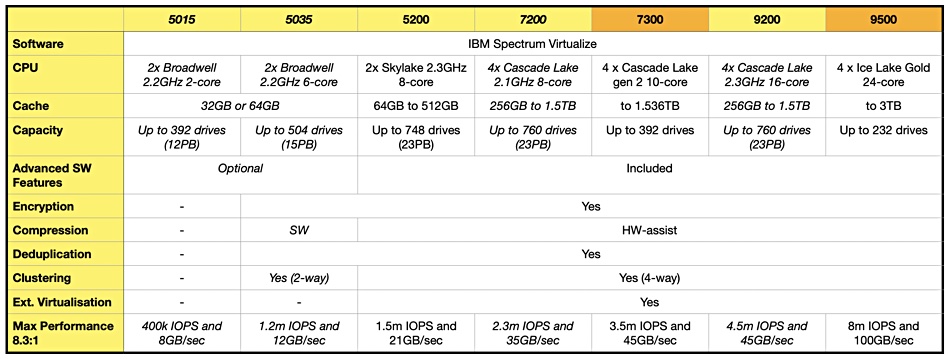

He singled out a bid against NetApp where a $32 billion global manufacturer that designs and distributes engines, filtration and power generation products wanted to deploy 10PB of file data in AWS and looked at both NetApp (FSx for ONTAP) and Nasuni.

There had to be 55 instances of FSX ONTAP because of a 192TB filesystem limit.

Update: The 192TB filesystem limit mentioned on the Nasuni slide is incorrect. An AWS spokesperson told us: “Each Amazon FSx for NetApp ONTAP file system can scale to petabytes in size.” 18 February 2022.

Burling said “They’re going to have to tier across SSD and S3, because the metadata has to remain an SSD, if they want to be able to have disaster recovery. So [they’re] not able to benefit from the full cost savings of of object storage with a first-party AWS service.”

The Nasuni alternative looked like this:

It featured a single tier, with no SSDs needed for metadata, and comparatively slimmed down edge access as full Windows server edge systems are not needed. Nasuni hopes to win the bid, on the basis of its simplicity and cost advantage, with Burling explaining “This is very early in the process for us and so we’re not at the point of being able to do a full public case study.”

Nasuni roadmap

Nasuni wants to build out file data services in five areas: ransomware protection, data mobility, data intelligence, anywhere access, and SaaS app integration. We can expect an announcement about enhanced ransomware protection in a few weeks.

Data mobility means the ability to write to clouds simultaneously, not just to one cloud. Data intelligence refers to the custom functions for clouds, such as Object Lambda, which has already been mentioned. It also refers to better management of chargeback and showback. The latter provides iT resource usage information to departments and lines of business without the cost or charging information associated with chargeback.

On the SaaS integration front, Burling said “We have customers that have workflows with critical third-party SaaS services, and they want them to be better integrated into their overall experience with Nasuni.”

Nasuni is also setting up a Nasuni Labs operation – a GitHub account managed by Nasuni for the supply of open source additions to its base product. Examples are the Nasuni Management Console API, a Nasuni Analytics Connector, Amazon OpenSearch and Azure Cognitive Search.

Comment

Nasuni is fired up on the strength of how its co-sell agreements are working, its place in the marketing strategies of the public cloud providers, and its experience winning bids against NetApp and other filer incumbents such as Dell EMC’s PowerScale/Isilon.

NetApp users can be ferociously loyal to NetApp, which has built a multi-cloud data fabric strategy that AWS, Azure and GCP view positively – witness its OEM deals. It’s also building a CloudOps set of functions with its Astra and Spot product sets which differentiate it from Nasuni and are winning it new customers.

There’s no quick win here for Nasuni. It faces hard work to progress, but it is confident of progressing. It has loyal customers of its own, and the cross-cloud added functions like Object Lambda look a useful idea. Let’s see how it pans out this year and next.