NAKIVO has extended its backup software to: support SharePoint Online; add ransomware protection facilities; and give managed server providers (MSPs) a way to manage tenant resources.

NAKIVO’s new Backup & Replication 10.2 backs up SharePoint Online sites and sub-sites, recovers document libraries and lists to the original or a different location. Search functionality enables users to locate items for compliance and e-discovery purposes. Microsoft Azure does not backup SharePoint users’ data; that’s the responsibility of the users.

AWS S3 ObjectLock support lets users set up retention periods for immutable S3 buckets with no subsequent change in the retention period allowed. Ransomware can’t touch the data in these buckets.

NAKIVO Backup & Replication 10.2 allows MSPs to provide a more reliable service, by organising and schedule infrastructure resources to prevent noisy neighbours. The MSPs can allocate data protection resources such as hosts, clusters, VMs, Backup Repositories and Transporters, to tenants.

NAKIVO provides perpetual license and subscription license options in six configurations, ranging from Basic to Enterprise Plus. The company says it is typically 50 per cent cheaper than competitors. A Microsoft 365 subscription price is $10/user/year. Per-workload subscription pricing is also available. A perpetual license price for a virtual server is $99/socket sat the Basic level and $899/socket at the Enterprise Plus level.

A bit more about NAKIVO

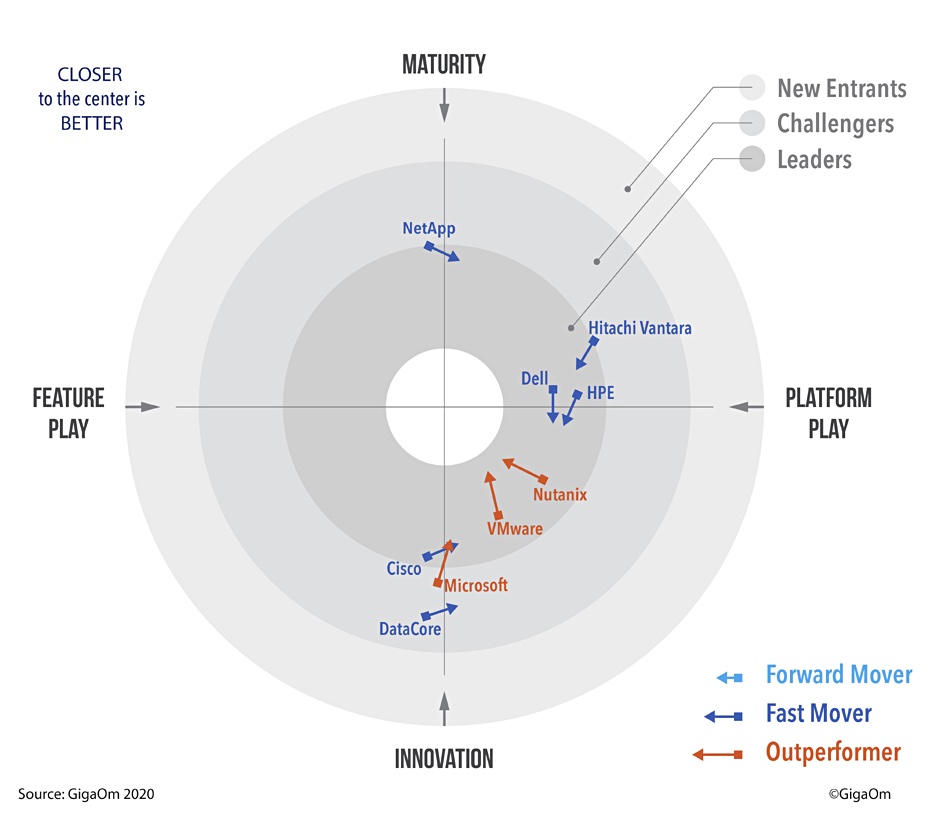

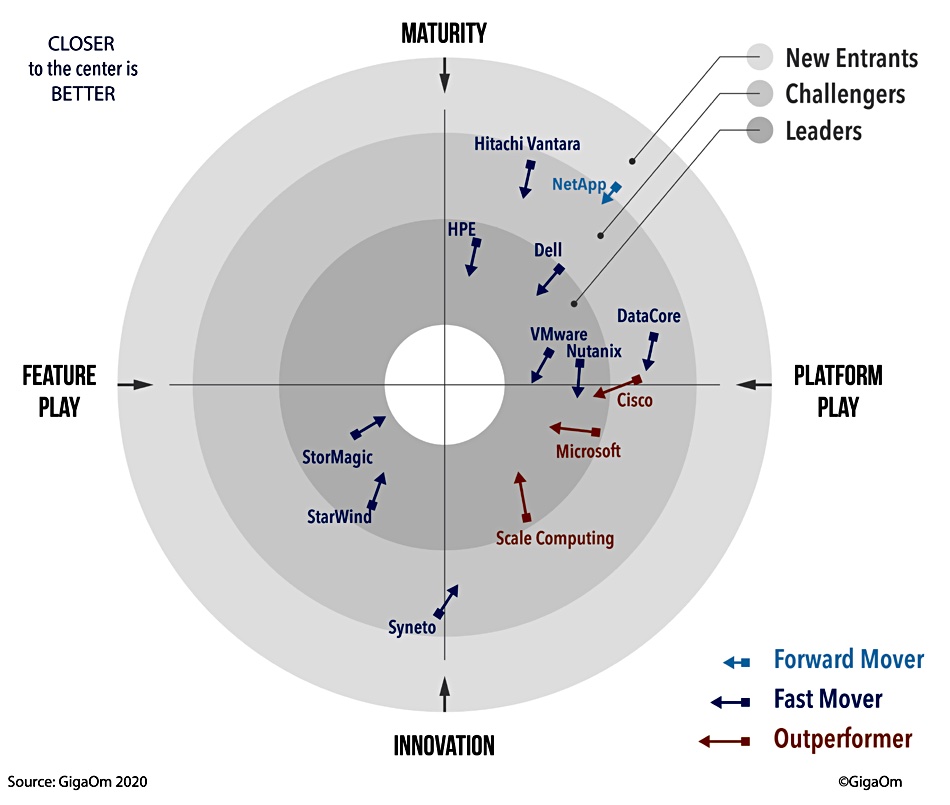

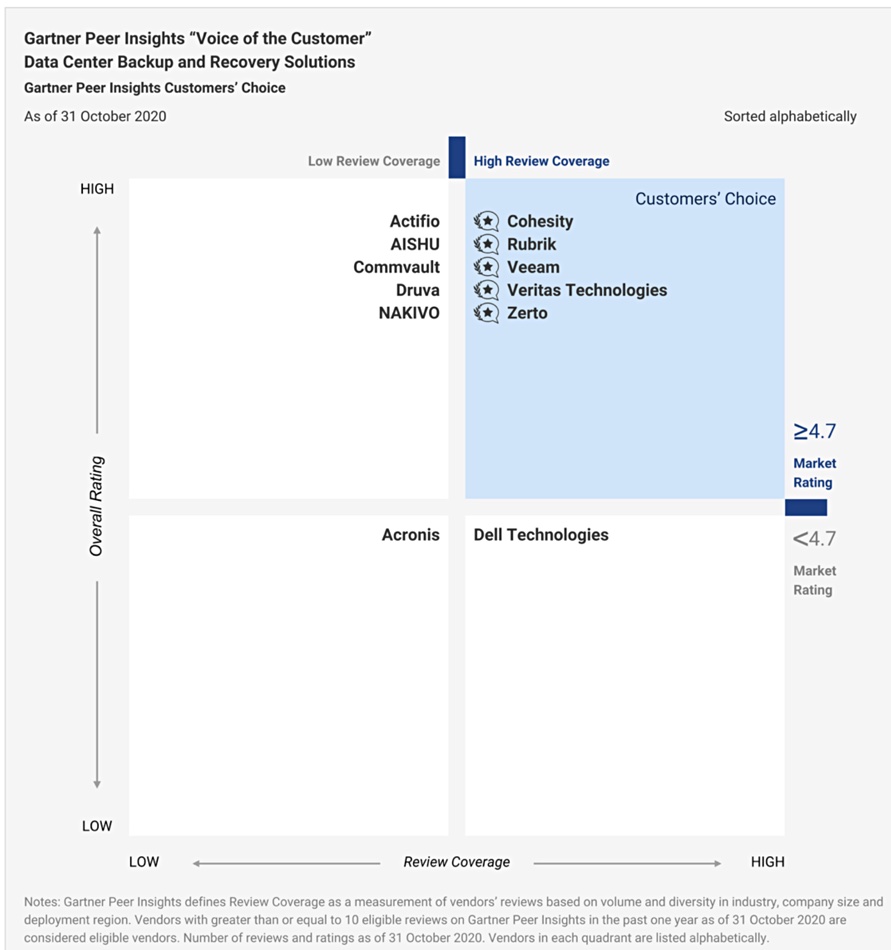

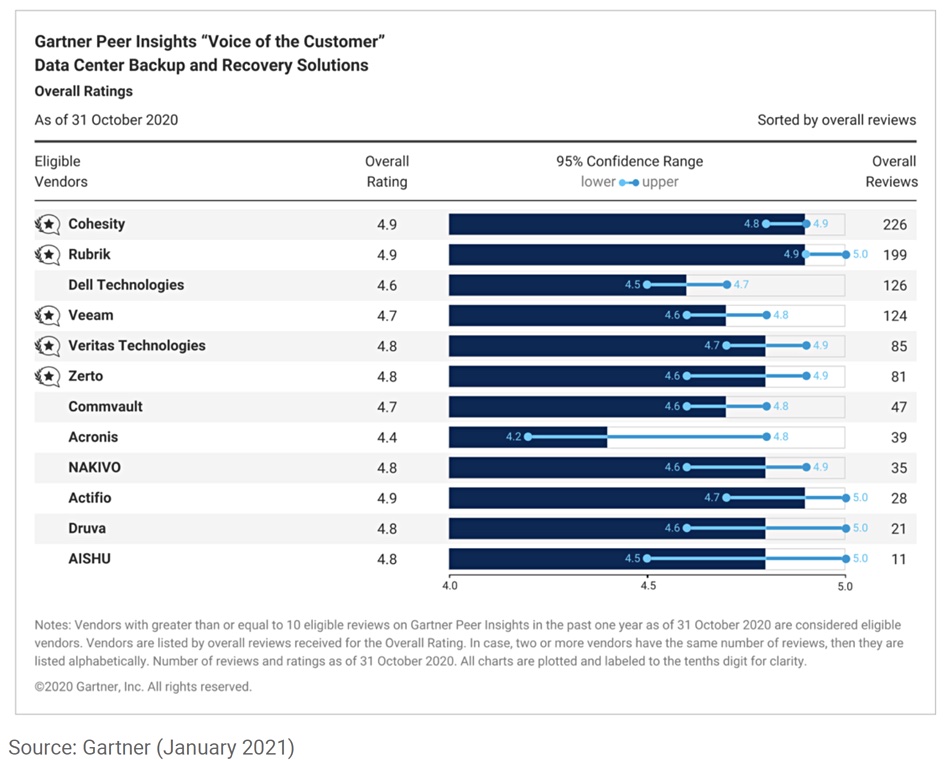

NAKIVO says it has more than 5,000 resellers 16,000 customers, which include Coca-Cola, DHL, Honda, Radisson, SpaceX, and the US Army and Navy. The US-headquartered company competes against mainstream backup suppliers such as Commvault Dell EMC and Veritas. It is also up against blitzscaling competitors such as Clumio, Cohesity, Rubrik and Veeam. So, to date it has been overshadowed on the marketing front.

NAKIVO founders have self-funded the company with the proceeds of a previous US-based and sold-startup. Sergei Serdyuk, VP product management, told us: “For the first five years we spent zero on marketing. We lived on what we made.”

Its actual US footprint is slim, comprising the CEO and a few other staff. The company. conducts the bulk R&D and engineering in the Ukraine with help from a Vietnamese office. Serdyuk said it would be cost-prohibitive to have engineering located in the USA.

Expect to hear more about NAKIVO. It has now grown large enough to set aside some money for marketing.

.