Microsoft has released Azure Purview, a tool to uncover hidden data silos wherever they live – on-premises, across multiple public clouds or within SaaS applications.

Julia White, Corporate VP for Azure, blogged: “For decades, specialised technologies like data warehouses and data lakes have helped us collect and analyse data of all sizes and formats. But in doing so, they often created niches of expertise and specialised technology in the process.

She said: “This is the paradox of analytics: the more we apply new technology to integrate and analyse data, the more silos we can create.”

Microsoft’s Alym Rayani, GM for Compliance Marketing, wrote in a blog: “To truly get the insights you need, while keeping up with compliance requirements, you need to know what data you have, where it resides, and how to govern it. For most organisations, this creates arduous ongoing challenges.” Purview reduces the arduousness factor.

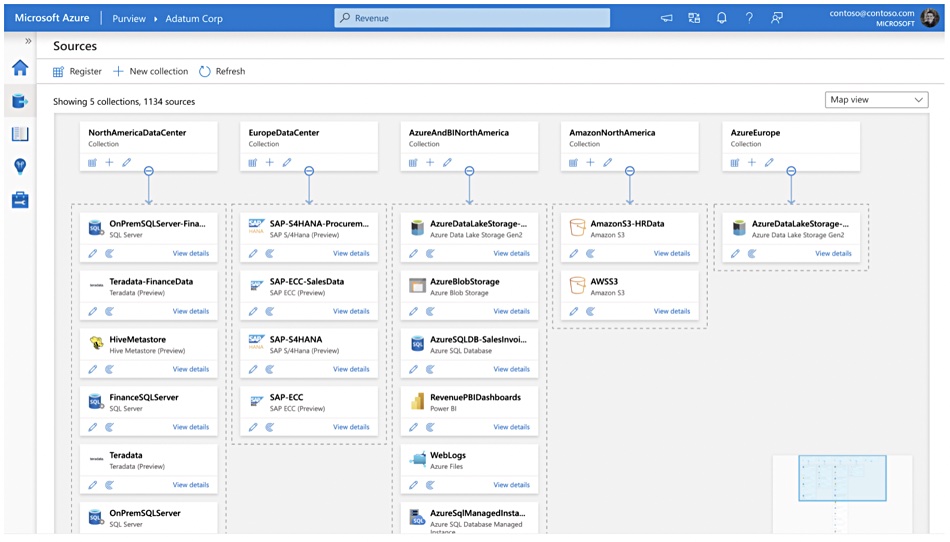

Azure Purview is a unified data governance service with automated metadata scanning. Users can find and classify data using built-in, custom classifiers, and sensitivity labels – Public, General, Confidential and Highly Confidential markers. They can create a business term glossary.

Discovered data goes into the Purview Map. A Purview Data Catalog enables users to search the Map for particular data, understand the underlying sensitivity, and see how data is being used across the organisation with data lineage; knowing where it came from.

Purview contains 100 AI classifiers that automatically look for personally identifiable information and sensitive data. The app also pinpoints out-of-compliance data.

The existing Microsoft Information Protection software uses sensitivity labels to classify data, helping to keep it protected and preventing data loss. This applies premises or in the cloud across Microsoft 365 Apps, services such as Microsoft Teams, SharePoint, Exchange, Power BI, and third-party SaaS applications.

Azure Purview extends the sensitivity label approach to a broader range of data sources such as SQL Server, SAP, Teradata, Azure Data Services, and Amazon AWS S3, thus helping to minimise compliance risk.

Using Purview, admins can scan their Power BI environment and Azure Synapse Analytics workspaces with a few clicks. All discovered assets and lineage are entered into the Data Map. Purview can connect to Azure Data Factory instances to automatically collect data integration lineage. It can then determine which analytics and reports already exist, avoiding any re-invention of the wheel.

B&F thinks Purview’s ultimate usefulness will depend on how many data sources it can explore and interrogate. The fewer the black holes in an enterprise’s data universe the more effective Purview’s data governance will be.

Azure Purview is free to use in preview mode until January 1, 2021.