Nvidia is adding storage controller functions to its BlueField-2 SmartNIC card and is gunning for business from big external storage array vendors such as Dell, Pure and VAST Data.

Update: Fungible comment added; 3 December 2020.

Kevin Deierling, Nvidia SVP marketing for networking, outlined in a phone interview yesterday a scheme whereby external storage array suppliers, many of whom are already customers for Nvidia ConnectX NICs, migrate to SmartNICs in order to increase array performance, lower costs and increase security. The BlueField SmartNIC incorporates ConnectX NIC functionality, making its adoption simpler.

Deierling said Nvidia is already having conversations with array suppliers; “BlueField is a superb storage controller. … We’ve demo’d it as an NVMe-oF platform … This is very clearly a capability we are able to support, at a cost point that’s a fraction of the other guys.”

As BlueField technology and functionality progresses, array suppliers could consider recompiling their controller code to run on the BlueField Arm CPUs. At this point the dual Xeon controller setup changes to a BlueField DPU system – more than a SmartNIC, and the supplier says goodbye to Xeons, Deierling declared. “It’s software-defined and hardware-accelerated. Everything runs the same, only faster.”

SmartNICs and DPUs

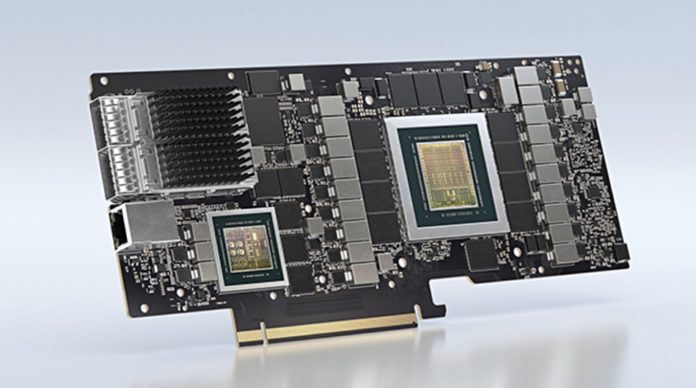

SmartNICs are an example of DPU (Data Processing Unit) technology. Nividia positions the DPU as one of three classes of processors, sitting alongside the CPU and the GPU. In this worldview, the CPU is a general-purpose processor. The GPU is designed to process graphics-type instructions much faster than a CPU. The DPU acts as a co-ordinator and traffic cop, routing data between the CPU and GPU.

Deierling told B&F that growth in AI use by enterprises was helping to drive DPU adoption; “One server box can no longer run things. The data centre is the new unit of computing. East-west traffic now dominates north-south traffic. … The DPU will ultimately be in every data centre server.”

He thinks the DPU could appear in external arrays as well, replacing the traditional dual x86 controller scheme.

In essence, an external array’s controllers are embedded servers with NICs (Network Interface cards) that link to accessing servers and may also link to drives inside the array. Conceptually, it is easy to envisage their replacement by SmartNICs that offload and accelerate functions like compression from the array controller CPUs.

DPU discussion

Today, the DPU runs repetitive network and storage functions within the data centre. North-south traffic is characterised as network messages that flow into and out of a data centre from remote systems. East-west traffic refers to network messages flowing across or inside a data centre.

The east-west traffic grows as the size of the datasets increases. Therefore it makes increasing sense to offload repetitive functions from the CPU, and accelerate it at the same time, by using specialised processors. This is what the DPU is designed to do.

The DPU can run server controlling software, such as a hypervisor. For example, VMware’s Project Monterey ports vSphere’s ESXi hypervisor to the BlueField 2’s Arm CPU and uses it to manage aspects of storage, security and networking in this east-west traffic flow. VMware functions such as VSAN and NSX could then run on the DPU and use specific hardware engines to accelerate performance.

SoCs not chips

The DPUs will help feed the CPUs and GPUs with the data they need. Deierling sees them as multiple SoCs (system on chips) rather than a single chip. A controlling Arm CPU would use various accelerator engines to handle packet shaping, compression, encryption or deduplication, which could run in parallel.

Fungible, a composable systems startup, aggregates this work in a single chip approach, but this does not allow the engines to work in parallel, according to Deierling.

In rebuttal, Eleena Ong, VP of Marketing at Fungible, gave us her view: “The Fungible DPU has a large number of processor elements that work in parallel to run infrastructure computations highly efficiently, specifically the storage, network, security and virtualisation stack.

“The Fungible DPU architecture is unique in providing a fully programmable data path, with no limit on the number of different workloads that can be simultaneously supported. The high flexibility and parallelism occur inside the DPU SoC as well as across multiple DPU SoCs. Depending on your form factor, you would integrate one or multiple DPUs.”

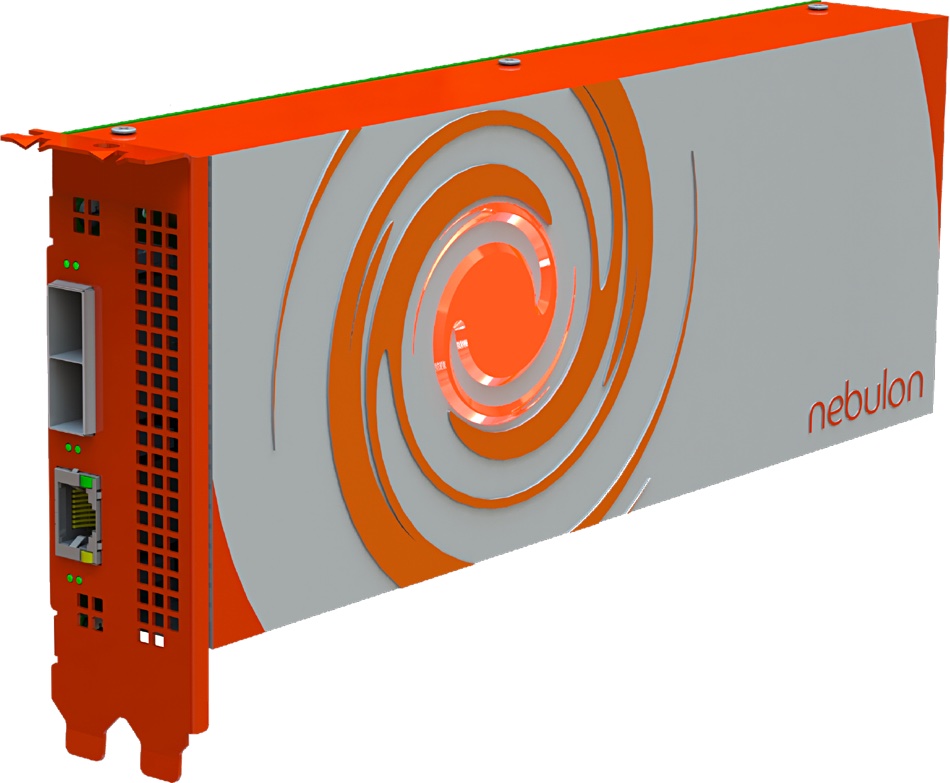

SoC it to Nebulon

Nebulon, another startup is trying to do something similar to Nvidia, with its ‘storage processing unit’ (SPU). This consists of a PCIe card on which there are dual Arm processors and various offload engines to perform SAN controller functions at a lower hardware cost than an external array with dual Xeon controllers. That’s a match with Deierling’s definition of a DPU.