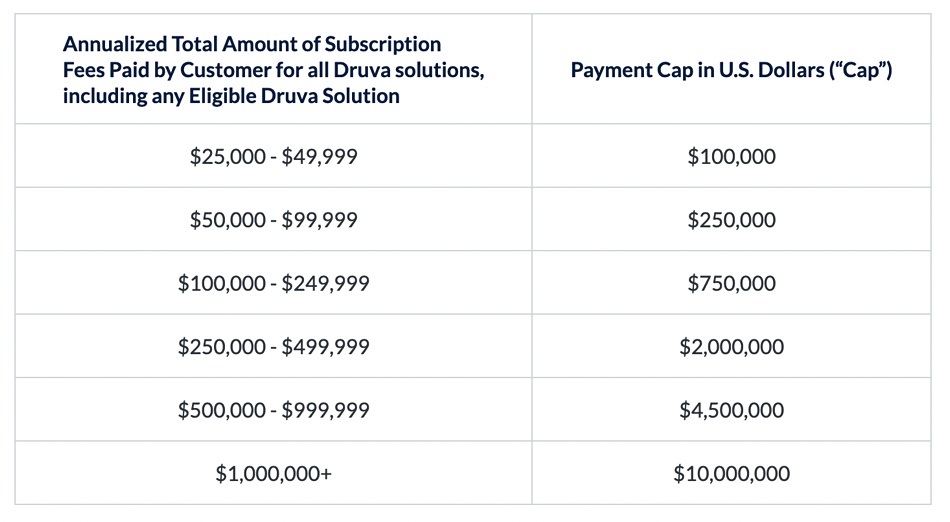

Druva is offering a legally binding warranty that its SaaS data protection service – the Druva Resiliency Cloud – will meet confidentiality, immutability, reliability, durability and availability service levels with up to $10 million in coverage.

Druva’s Data Resiliency Cloud is a cloud-native SaaS offering based on Amazon Web Services public cloud infrastructure. The Data Resiliency Guarantee (DRG) is an expansion of the company’s existing limitations of liability. It enables qualifying customers to protect against a wide variety of data loss and downtime events across five categories of risk, made possible by what Druva claims are best-in-class SLAs. Competitor Rubrik offers a guarantee against its backup data being compromised by ransomware with up to $5 million being payable if data is lost from its backups.

Jaspreet Singh, Druva’s founder and CEO, said “Ransomware protection alone isn’t enough to satisfy the pressures, challenges and speed of modern businesses.”

In his view, “Protecting data from outside attackers should be table stakes at this point but most vendors are simply unable to make stronger commitments given the limitations of their business models. In contrast, our SaaS model offers complete control over the various technology functions and the ability for our team to manage the entire customer experience.”

The DRG SLAs are:

- 100 percent Confidentiality SLA to guarantee customer data stored in backups will not be compromised (i.e. malicious and unauthorized access) as a result of a security incident;

- 100 percent Immutability SLA to guarantee backups will not be deleted, encrypted, or otherwise changed as a result of a cyber attack;

- 99 percent Reliability SLA guaranteeing successful backup services;

- 99.999 percent Durability SLA to ensure successful backups will be recoverable;

- Up to 99.5 percent Availability SLA to maximize uptime.

Singh explained in a briefing that “all of the SaaS vendors, including Druva, have a legal requirement called limitation of liability, that we we are liable if data is lost. … We saw the industry asking for more, like, can you guarantee us reliability of software that the backup success percentage would be 99 percent? Can you guarantee availability of my data? Can you guarantee it won’t be tampered with? Can you guarantee it will not be leaked by employees? So we extended what we typically have called limitation of liability into a full-fledged warranty.”

And that meant that Druva had to be certain its infrastructure and operating procedures were good enough to support offering such a warranty, because it had management ownership of customers’ data. The Druva SaaS system infrastructure had to be available and accessible. The backup service had to be reliable enough, and immutability rock solid, and so forth.

Singh said “We made reliability the number one goal. And we are proud to say we have 99 percent success rate or higher of backup and recovery jobs being successful. And we have zero breach in durability. We have zero lost confidentiality. We have never, ever had a third party get access to data nor have we lost data. So now we are actually firming up our promises by offering a warranty.”

He explained that Druva had to add extra telemetry to its service to make this warranty possible. “We had to get an understanding of operations to show to customers … we have enough trust and visibility into operations into customer accounts and setups so we can actually back our offering.” It also had to improve its environmental reporting.

Singh said “There was a fair amount of work involved both in our architecture, operations and visibility into customers to make it a reality.”

We asked Singh if any other on-premises data protection supplier provide such a warranty?

“They don’t, and they cannot, because … you have to be SaaS. … They cannot take responsibility for their confidentiality unless they’re in managed operations. Because we manage operations, we have the visibility and control of all these outcomes. An on-premises data protection vendor couldn’t do the same thing.”

Druva is publishing a master service contract with legal language around the warranty, covering things like scheduled remediation. Singh said this warranty is a sign that the data protection industry is maturing and it means customers can trust Druva more.

For more information on program terms, conditions and eligibility, visit the Druva Data Resiliency Guarantee FAQ web page.