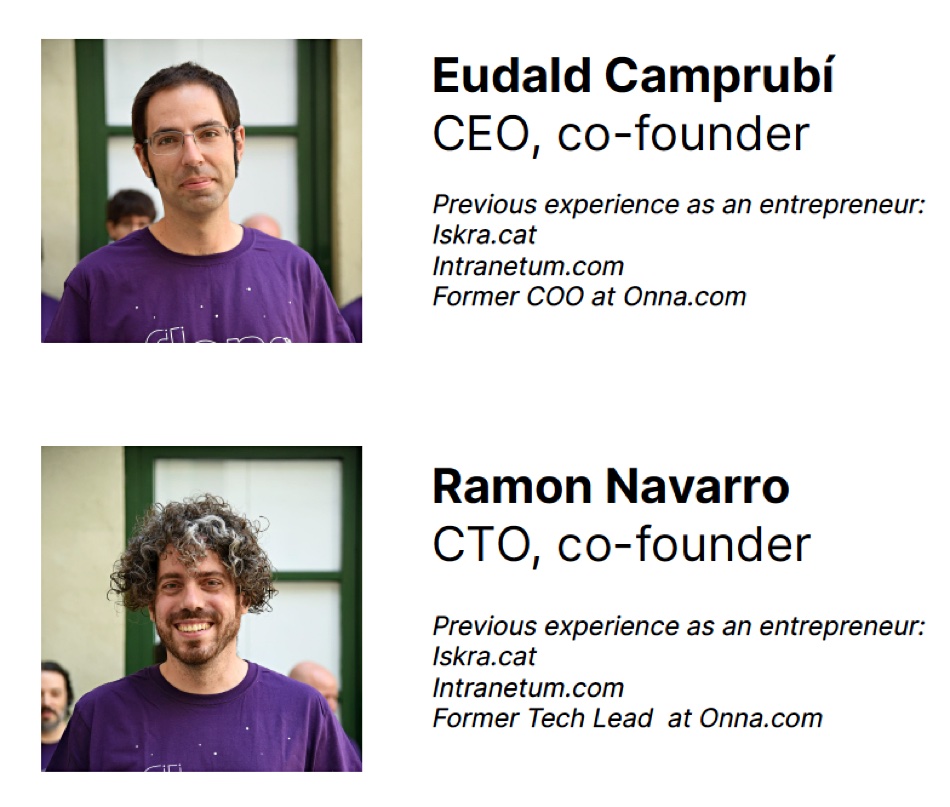

Blocks and Files talked to Gleb Budman, co-founder and CEO of cloud storage supplier Backblaze, well known for providing helpful reports detailing reliability statistics on the drives used in its infrastructure. We talked to Budman about cold storage tiers, region expansion, Wasabi competition, decentralized Web3 storage and shingled magnetic media drives.

His answers may surprise you as this cloud storage supplier appears to be sticking to its single tier, non-SMR knitting with a firm grasp.

Blocks & Files: Would Backblaze consider providing colder storage backup – for example, using tape media? If it can devise disk-based pods, then perhaps it could devise tape-based ones too.

Gleb Budman: [O]ur products are architected to offer a single tier of hot storage in B2 Cloud Storage, and automatic, unlimited backup through Backblaze Computer Backup. Adding tiers adds complexity, price, and forces customers to make decisions about what to keep readily accessible. At 1/5th the price of most hot cloud storage providers, we let customers keep and use everything they store at cold-storage pricing. Additional tiers of storage is not something we are considering at this time. Although publishing “Tape Stats” would be a nice addition to the blog…

Blocks & Files: How does Backblaze consider and respond to competition from Wasabi for cloud and backup storage?

Gleb Budman: Over 15 years, we’ve grown the Backblaze Storage Cloud to offer performant, reliable, trusted storage solutions to over 500,000 customers. We essentially bootstrapped our way to going public – we only took some $3 million in outside funding up until 2021 – which makes us extremely efficient, built for the long haul, and affordable for our customers. We celebrate everyone who is working to bring the benefits of cloud solutions to more businesses and individuals, but we don’t ever want to surprise customers with delete fees driven by storage duration requirements, rapid access fees, or other unpredictable expenses.

We think our long track record of building zettabyte-scale infrastructure, serving half a million customers, and doing so with radical transparency all along the way is valuable to existing and future customers, and that is value that’s hard to replicate.

Blocks & Files: How does Backblaze consider the idea of expanding its regions to cover more of the world’s geography?

Gleb Budman: We recently announced that Backblaze expanded its storage cloud footprint with a new data region in the Eastern United States. Our new US East region provides more location choice overall, particularly for businesses that wish to easily store replicated datasets to two or more cloud locations. Backblaze also announced it provides free data egress for Cloud Replication across the Backblaze platform, making its cost-effective object storage even more compelling. We serve customers in more than 175 countries – rolling out datacenters and regions at exabyte scale provides them better value and better serves the use cases we enable.

Blocks & Files: What is your view of the distributed decentralized storage Web3 ideas? How would you describe the pros and cons of such storage vs Backblaze?

Gleb Budman: We actually considered building a peer-to-peer backup service as our original idea 15 years ago. Since our goal is to make it astonishingly easy to store, protect, and use data, we decided not to because ultimately it would be too complex for most users to adopt. There still doesn’t seem to be much customer demand to store data on decentralized platforms.

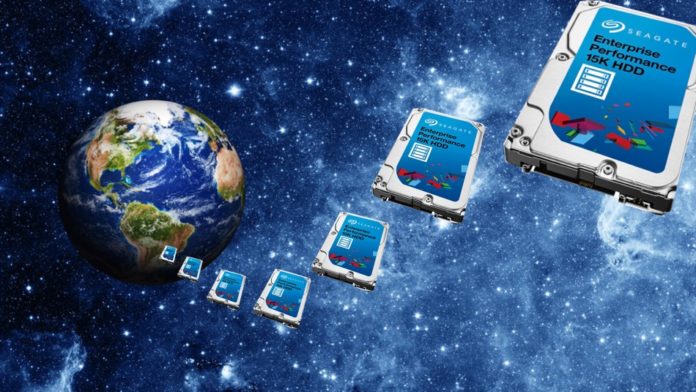

Blocks & Files: What is Backblaze’s view on the advantages and disadvantages of shingled magnetic media recording (SMR) disk drives? Could we see them having a role in Backblaze’s pods?

Gleb Budman: We do not use SMR drives. We experimented with them a couple of years ago and while they can be a little less expensive upfront, in practice they did not meet our operational, cost, and performance metrics. SMR drives are best suited for data archive purposes, while application storage, media and entertainment, and backup customers need to be able to use their data. Also, we think customers should be able to use their data as they want to, including deleting it without having to worry about the delete fees driven by the use of SMR drives.

Bootnote

Backblaze has just added a new data region in the Eastern United States, bringing increased access speed for customers based there and greater geographic separation for backup data stored by businesses operating in other regions. It also helps provide an additional destination for businesses seeking to store copies of their datasets to two or more cloud locations for compliance and continuity needs. A Cloud Replication service is provided with free data egress, enabling customers to copy data across the Backblaze platform at no expense.