Sponsored Feature Cybercriminals tend not to discriminate when it comes to the type of data they steal. Structured or unstructured, both formats contain valuable information that will bring them a profit. From a cybersecurity practitioner’s perspective, however, structural state presents specific challenges when it comes to storing and moving sensitive data assets around.

Generally speaking, structured – quantitative – data is stored in an organized model, like a database, and easily read and manipulated by a standard application.

Unstructured – qualitative – data can be harder to manipulate and analyze using standard data processing tools. Typically, it’s stored in orderly but unorganized ways, sliced across silos, applications, and access control systems, without formalized information about its state or location.

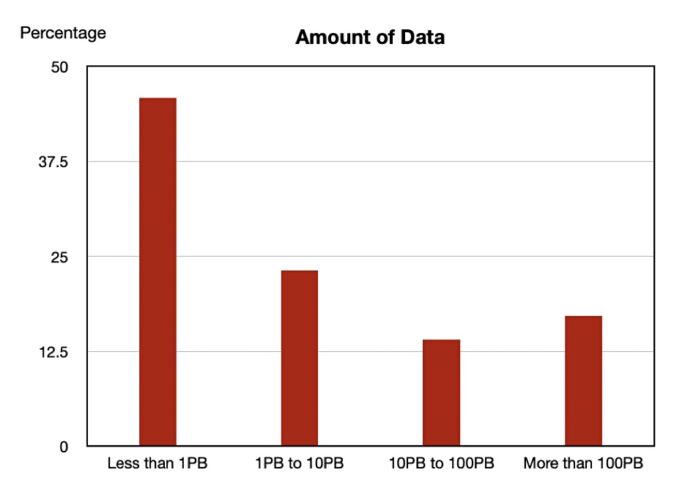

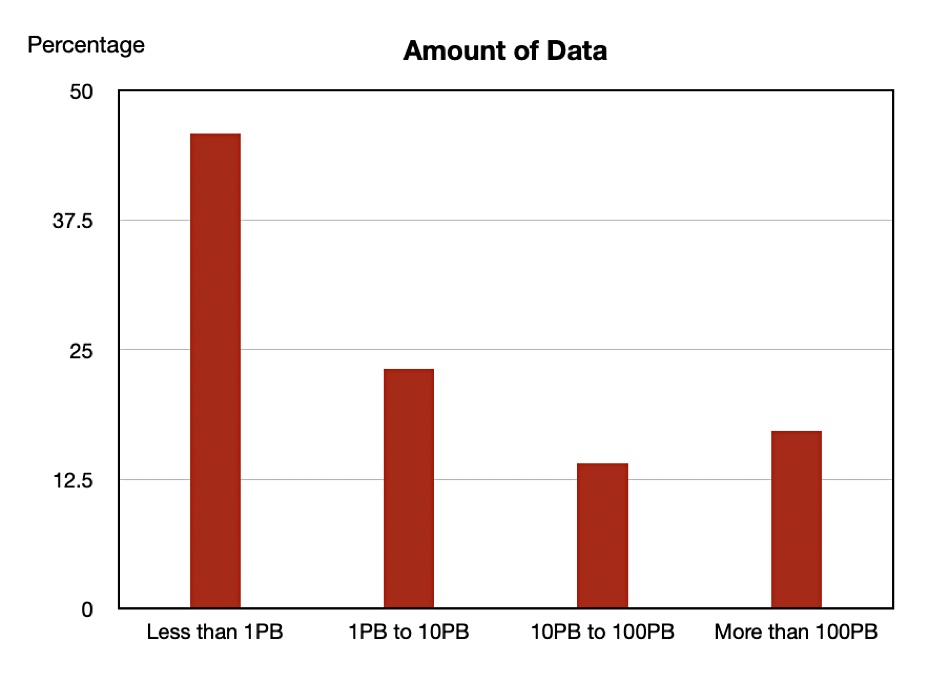

To complicate matters, unstructured data is increasing in importance, needed to drive business growth and planning. Some projections indicate that unstructured data constitutes more than 90% of all enterprise data and continues to grow at 21% per year, so assurance of the ability to store it securely over the long term is imperative.

It’s a challenge for IT security chiefs because unstructured data’s decentralized nature makes it harder to maintain effective and consistent security controls that govern access to it.

Complying with Executive Orders

The challenge is compounded by the regulatory requirements pertaining to cyber-governance that organizations globally must now comply with. It’s no longer solely a matter of risking penalties for non-compliance. Compliance is becoming a condition of business, and has been formalized in the US by Presidential Executive Order 14028.

Importantly, EO 14028 implies that suppliers lacking comprehensive security will not be doing business with the US government. In effect, cybersecurity responsibility is being deferred to solutions providers, and away from their customers, having a game-changing impact on procurement procedures – and cybersecurity provisioning.

EO 14028 was spurred into effect by 2020’s near-catastrophic cybersecurity breach event when hackers – suspected to be operating under the auspices of Russian espionage agencies – targeted managed IT infrastructure service provider SolarWinds by deploying malicious code into its monitoring and management software.

The SolarWinds hack triggered a much larger supply chain incident that affected 18,000 of its end user organisations, including both government agencies and enterprises. It’s assumed that the former were the primary targets, with some enterprise users suffering ‘collateral damage’.

“Concepts of best practice in data storage have evolved rapidly since the SolarWinds hack,” says Kevin Noreen, Senior Product Manager – Unstructured Data Storage Security at Dell Technologies. “This and other cyberbreaches involving ransomware have accelerated that evolution at both the tech vendors and their customers. At Dell, the recent feature focus for our PowerScale OneFS family of scale-out file storage systems reflects those changes, and in doing so, orients the platform’s future development.”

Securing stored unstructured data poses specific challenges, especially when it comes to provisioning high-performance data access for applications in science and analytics, or video rendering, adds Phillip Nordwall, Senior Principal Engineer, Software Engineering at Dell Technologies.

“Cybercriminals have growing interest in these fields. Intellectual Property in life sciences, for example, holds high transferable resale value,” Nordwall reports. “Streamed entertainment data is also highly saleable. So effective security while that data, at rest or inflight, is being managed is now totally critical.”

The way in which such security directives influence specification development for the newly-released Dell’s PowerScale OneFS 9.5 has helped to inform five broader future trend trajectories that Dell’s experts foresee for 2023, as Noreen and Nordwall explain.

Finding closures

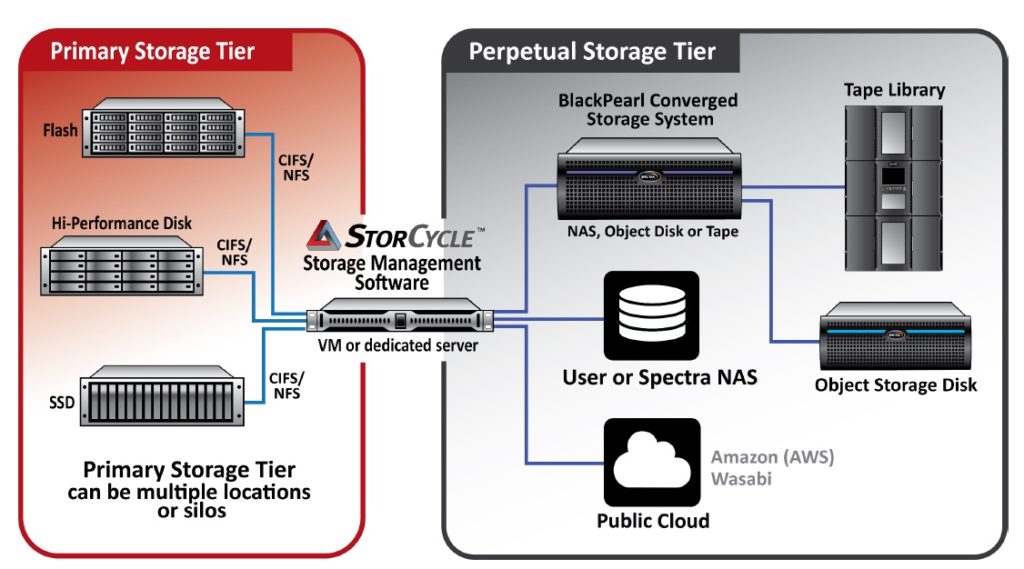

The first prediction is that datacenter infrastructure vendors will close-out cyber vulnerabilities by engineering safeguards directly into their products, like network-attached storage solutions.

“While in 2023 cybercriminals will continue to exploit what they’ve exploited before, they’ll find their efforts increasingly frustrated as new built-in security features are introduced across the datacenter infrastructure, closing off their customary attack vectors,” says Noreen, a closing-off which will happen gradually, but which will happen nonetheless.

To that end, the latest version of Dell’s PowerScale scale-out NAS solution – OneFS 9.5 – brings an array of enhanced security features and functionality. These include multi-factor authentication (MFA), single sign-on support, data encryption in-flight and at rest, TLS 1.2, USGv6R1/IPv6 support, SED Master Key rekey, plus a new host-based firewall.

“In the past, security requirements were always viewed as important, but are now being emphasized to be more proactive as opposed to being reactive,” explains Noreen. “In addition, PowerScale OneFS 9.5’s latest specification scopes the growing range of security enhancements required by US Federal and Department of Defense mandates such as FIPS 140-2, Common Criteria and DISA STIG.”

PowerScale is undergoing testing for government approval on the DoD Information Network Approved Products List, for example.

“Having enhanced security compliance very evidently in place wherever and whenever possible across the spec serves a dual role,” says Noreen. “It reinforces your cyber-defensive posture, and it speaks a message to would-be attackers: ‘we are protected – go focus your attacks elsewhere’.”

Caught in the cyber crossfire

Dell’s second 2023 prediction is that commercial entities will find themselves scathed by cyberwar offensives if geopolitical conflicts cause renewed cyber offensives from nation state sponsored threat actors – especially as they probe the effectiveness of new governmental regulatory security compliance frameworks.

“Gartner has declared that geopolitics and cybersecurity are inextricably linked, and hybrid warfare is a new reality,” says Noreen. “Because of the increased interconnectedness between economies and societies, definitions of critical infrastructure have extended to include many commercial operations such as shipping, logistics and supply chains. Geopolitical conflict escalates cyber-risk, but will also accelerate the introduction and criticality of zero trust adoption over the coming 12 months.”

Zero-ing in on trust models

Following from this, the third prediction from Dell is that zero trust models will continue to reinforce enterprise cybersecurity strategies in 2023 as they are integrated into product platform technologies that work in sync with enterprise zero trust procedures and practices.

“Zero trust security models allow organizations to better align their cybersecurity strategy across the datacenter, clouds, and at the edge,” says Nordwall. “Our aim is to serve as a catalyst for Dell customers to achieve zero trust outcomes by making the design and integration of this architecture easier.”

“We designate zero trust as a journey,” says Noreen. “We need different implementations of zero trust that work together. Organizations now have to think about their IT infrastructure from multi-cloud to edge, their user-base – including supply chain partners – and think about how zero trust applies at a process level. In the datacenter that means component by component – servers, networking and, of course, NAS.”

Noreen adds: “Another thing we believe will step-up in 2023 is the notion of zero trust, and also resiliency borne of enhanced systems that ‘patrol’ data assets in order to detect attacks before they’ve had the opportunity to cause damage. These will be cybersecurity gamechangers.”

It will likely be mid-way into 2023 before the full benefits of end-to-end zero trust feed through. In the meantime, systems must be ‘patrolled’ for malware attacks that manage to infiltrate networks.

To perform this task PowerScale integrates with Superna’sRansomware Defender module [part of the Superna Eyeglass Data Protection Suite] and uses per-user behavior analytics to detect abnormal file access behavior to protect the file system.

“Superna’s Ransomware Defender solution minimizes the cost and impact of ransomware by protecting data from attacks originating inside the network,” Nordwall explains. “The Ransomware Defender module uses automatic snapshots, identifies compromised files, and denies infected users’ accounts from attacking data by locking the users out.”

Demands on supply

The combined benefits of enhanced security built into storage platforms, plus compliance with emergent regulatory mandates, will remediate longstanding cybersecurity weak links in supply chains in 2023, Dell predicts.

“We will see things continue to improve in supply chain resiliency in terms of better safeguards to ensure security of solutions as shipped,” says Noreen. “These measures eliminate any opportunity for vendor integrity to be compromised by intermediate interference.”

Noreen explains the safeguards: “When a PowerScale unit is assembled in our factory, we put an immutable certificate that sits on its system. That system is then shipped from the factory to the customer site. When ready to be installed there’s a software product that customers run against that hardware. It validates that what was shipped from the factory is what was delivered to the customer site. It attests that the system hasn’t been booted in the interim, nobody has installed additional memory – or anything that could relay malware.”

Workforce enforcement

Fifth on the Dell list of 2023 predictions is that organizations will invest even more emphasis on employee ransomware awareness training, leveraging tools and guidance that pinpoint patterns in cyber-defense weak spots.

“From one perspective organizations are compelled to step-up the focus on workforce education and training because employees continue to constitute a non-technological vulnerability in enterprise security, despite past investments in cyber-threat education and training,” says Noreen. “We expect them to make more extensive use of tools such as Superna Eyeglass’s Ransomware Defender to close-out these kinds of vulnerability.”

“If Ransomware Defender detects ransomware attack behavior, it initiates multiple defensive measures, including locking users from file shares – either in real-time or delayed,” adds Nordwall. “There are also timed auto-lockout rules such that action is taken even if an administrator is not available, as well as automatic response escalation if multiple infections are detected.”

Sponsored by Dell.