Real-time data platform supplier Aerospike has unveiled a new graph database with native support for the Gremlin query language. Aerospike claims today’s graph databases fail to perform at scale with a sustainable cost model. Aerospike Graph delivers millisecond multi-hop graph queries at extreme throughput across trillions of vertices and edges. Benchmarks show a throughput of more than 100,000 queries per second with sub-5ms latency – on a fraction of the infrastructure.The Aerospike Database handles diverse workloads across three NoSQL data models – key value, document, and graph – in a single real-time data platform.

…

Data catalog and intelligence tech supplier Alation says its product is now available through Databricks Partner Connect. It says that data science, data analytics, and AI teams can now find, understand, and trust their data across both Databricks and non-Databricks data stores. This is accomplished by extending the data discovery, governance, and catalog capabilities built into Databricks and Unity Catalog across other enterprise data sources.

…

AMD EPYC Embedded Series processors are powering HPE Alletra Storage MP, HPE’s modular, multi-protocol storage product. This was first revealed in April this year. Now we learn that Alletra Storage MP uses a range of EPYC Embedded processor models, with performance options ranging from 16 to 64 cores, and a thermal design power profile ranging from 155W to 225W.

…

A ransomware survey from Arcserve highlights potential vulnerabilities within local government and other public services. 36 percent of government IT departments do not have a documented disaster recovery plan. 24 percent of remote government workers are not equipped with backup and recovery solutions. 45 percent of government IT departments mistakenly believe it is not their responsibility to recover data and applications in public clouds.

…

AvePoint announced new functionality for Cloud Backup for Salesforce with FedRAMP (moderate) authorization, and the addition of the product to the AWS, Azure, and Salesforce AppExchange marketplaces. Cloud Backup for Salesforce augments native Salesforce Data Recovery services by providing automatic, daily, and comprehensive backup of data and metadata and quick restores at the organization, object, record and field levels.

…

Open source real-time analytics, columnar database supplier ClickHouse has released ClickHouse Cloud on the Google Cloud Platform (GCP). General availability offers support across Asia, the European Union and the United States, and follows the December 2022 debut of ClickHouse Cloud on AWS. ClickHouse Cloud launched in December 2022 to provide a serverless, fully managed, turnkey platform for real-time analytics built on top of ClickHouse. Vimeo is a customer.

…

Catalogic’s CloudCasa business unit now supports the Red Hat OpenShift APIs for Data Protection Operator that installs open source Velero data protection on Red Hat OpenShift clusters for Kubernetes backups. The Red Hat OpenShift APIs for Data Protection Operator installs Velero and Red Hat OpenShift plugins for Velero to use for backup and restore operations. Red Hat is a contributor to Velero, along with VMware and CloudCasa, sponsoring many key features and maintaining Red Hat OpenShift APIs for Data Protection alignment with the upstream Velero project.

…

Real-time data connectivity supplier CData Software has announced that Salesforce Data Cloud customers will have access to select CData connectors that bring more data into Data Cloud from their SaaS, database and file-based sources. With CData Connectors, Salesforce customers will be able to streamline access to customer data across a wide collection of data sources and touchpoints.

…

Lakehouse supplier Databricks has introduced Lakehouse Apps, a way for developers to build native, secure applications for Databricks. It says customers will have access to a range of apps that run inside their Lakehouse instance, using their data, with Databricks’ security and governance capabilities. It also introduced new data sharing providers and AI model sharing capabilities to the Databricks Marketplace, which will be generally available at the Data + AI Summit, June 26-29.

…

Storage supplier DDN has been selected alongside Helmholtz Munich as a winner of the 2023 AI Breakthrough Awards in the “Best AI-based Solution for Life Sciences” category. DDN and Helmholtz Munich were recognized for their work in accelerating AI-driven discoveries that deliver concrete benefits to society and human health. Part of the Helmholtz Association, Germany’s largest research organization, Helmholtz Munich is one of 19 research centers that develops biomedical solutions and technologies. Helmholtz Munich has four fully populated SFA ES7990X systems that span a global namespace, an SFA NVMe ES400NVX system with GPU integration and EXAscaler parallel filesystem appliances.

…

Purpose-built backup appliance supplier ExaGrid has released v6.3 of its software with a security angle. Now only admins can delete a share, and all share deletes require a separate security officer’s approval. Two-factor authentication (2FA) is turned on by default. It can be turned off; a log is kept if that happens. RBAC roles are more secure as admins can only create/change/delete users and roles other than the security officer. Users with the admin and security officer roles cannot create/modify each other, and only those with the security officer role can delete other security officers (and there must always be at least one security officer identified).

…

Filecoin says its Filecoin Virtual Machine (FVM) is an execution environment for smart contracts allowing the first user programmability to the Filecoin blockchain. With FVM, developers can write and deploy custom code to run on the Filecoin blockchain, allowing them to connect, augment, and innovate around the building blocks of the Filecoin economy: storage, retrieval, and computation of content-addressed data at scale.

The future holds making sure that FVM is easy to use and ensuring these onramps make sense for builders. Smaller storage deals, to allow building dApps and DAOs around pieces of individual, user-uploaded data. LLMs will play a central role in open-source generative AI models, all built as dataDAOs (DAO – Decentralized Autonomous Organisation) on top of FVM. There will be a decentralized aggregation standard, that will allow anybody to spin up “data dropboxes” for FVM.

..

HPE has added new capabilities to GreenLake for Backup and Recovery, which provides long-term retention, and protects data and workloads across customers’ hybrid clouds. It now protects on-premises and cloud databases managed by Microsoft SQL Server and Amazon Relational Database Service (RDS). GreenLake for Backup and Recovery also supports Amazon EC2 instances and EBS volumes.

…

Immutable and cryptographically verifiable enterprise-scale database supplier immudb has released immudb Vault. As an append-only database, immudb Vault tracks all data changes – preserving a complete and verifiable data change history – so data can only be appended, but never modified or deleted, ensuring the integrity of the data and the change history. Dennis Zimmer, CTO and co-founder of Codenotary, the primary contributor to the immudb project, said: “Before immudb Vault, businesses sometimes resorted to using ledger technologies and blockchains to share data with their counterparties, resulting in slow performance, complexity, high cost, and regulatory headaches.”

…

IBM is using AMD Pensando DPUs in its cloud. IBM says it has a 3 percent market share in the hybrid cloud market. Its niche is in the highly regulated public sector, financial services and airline customers including Canadian Imperial Bank of Commerce (CIBC), Delta Airlines and Uber. IBM is using the DPUs, with stateful security services, for virtual server instances and bare-metal systems. This allows IBM to unify provisioning across environments. It says this is pivotal in its strategy as it aims to continue double-digit revenue growth in its cloud business in 2023.

…

Reuters reports that NAND and DRAM supplier Micron will spend up to $825 million in a new chip assembly and test facility in Gujarat, India, its first factory in the country. With support from the Indian central government and from the state of Gujarat, the total investment in the facility will be $2.75 billion. Of that total, 50 percent will come from the Indian central government and 20 percent from the state of Gujarat. Construction is expected to begin in 2023 and the first phase of the project will be operational in late 2024. A second phase of the project is expected to start toward the second half of the decade. The two phases together will create up to 5,000 new direct Micron jobs

…

Following its acquisition by BMC, Model9 chief strategy officer Ed Ciliendo has left the company. His LinkedIn post says: “I am happy to announce that for the next few weeks I will assume the position of Chief Vacation Officer, managing a dynamic young team working on a broad range of KPIs from drastically increasing time spent in the sun to doubling our quota of ice cream consumption.”

…

Veeam’s 2023 Ransomware Trends Report found that 56 percent of companies risk reinfection during data restoration following a cyber-attack. This is because IT teams are often throwing precautions to the wind to minimize downtime, or are unsure of the proper safeguards to implement. ObjectFirst says the only way to ensure reinfection is impossible is through a combination of immutable storage to protect your data against attackers targeting backups as a vector for infection, and virus scanning/staged restores to ensure data that was already corrupted prior to being backed up does not make its way back. By themselves, these precautions are significantly less impactful at preventing reinfection, but with both in place companies can restore their data with confidence.

…

Oracle‘s latest generation of its Exadata X10M platforms use 4th Gen AMD EPYC processors, delivering more capacity with support for higher levels of database consolidation than previous generations. Using EPYC CPUs allows for improved price performance, more storage and memory capacity, which enables more database consolidation and lowers costs for all database workloads. The EPYC-powered X10M provides up to 3X higher transaction throughput and 3.6X faster analytic queries. It enables support for 50 percent higher memory capacity – enabling more databases to run on the same system.

…

Peak:AIO has doubled AI DataServer capacity by using Micron’s 9400 NVMe PCIe gen 4 SSDs instead of the 15TB drives used back in March. The then 367TB of capacity becomes 650TB in the same classic 2RU x 24-bay data server chassis. It provides up to 171GBps RAID6 bandwidth to a single GPU client using NVMe/TCP and ordinary, not parallel, NAS.

…

Decentralized storage startup Seal Storage, based in Toronto, has been selected as one of the 100 most-promising “Technology Pioneers’’ by the World Economic Forum. It says such Technology Pioneers are early-stage companies that lead in adopting new technologies and driving innovation, with the potential to have a significant impact on both the business landscape and society as a whole. Seal Storage was founded in 2021. Its software uses datacenters powered by renewable energy and operates on top of Filecoin DeStor’s open source technology to minimize its environmental impact. Seal offers zero egress fees to researchers allowing them to access their data around the globe.

What the WEF knows about decentralized storage can only be guessed at. Seal says it is poised to make meaningful contributions to the future of business and society through immutable, affordable and sustainable data storage – which gives a sense of the level of marketing fluff involved here.

…

SK hynix has launched is first portable SSD, the Beetle X31 with 512GB and 1TB capacities. It has a DRAM buffer supporting the NAND which drives sequential write speed up to 1,050 MBps and reads to 1,000 MBps. Its heat management means it can transfer 500 GB of data at over 900 MBps. It is shaped, SK hynix says, like a beetle, with an aluminum case, weighs 53 grams and measures 74 x 46 x 14.8 mm. Host linkage is via USB C-to-C or C-to-A and there is a three-year warranty.

…

SK hynix has received the automotive ASPICE Level 2 certification, the first time that a Korean semiconductor company wins the recognition. ASPICE (Automotive Software Process Improvement & Capability dEtermination) is a guideline for automotive software development that was introduced by European carmakers to evaluate reliability and capabilities of auto part suppliers. SK hynix will now aim for the ASPICE Level 3 certification with a more advanced software development process.

…

VAST Data is counting down for a 9:00am August 1 live-streamed Build Beyond event, saying: “The world deserves a single data engine for every workload to integrate Edge, Datacenter and Cloud. Data & Metadata. Unleashed Performance, Capacity & Insights! Build Beyond!” Register at buildbeyond.ai and watch a teaser video here.

…

Vaughan Stewart, ex VP Technology Alliances at Pure Storage, has joined VAST Data as its VP of Systems Engineers.

…

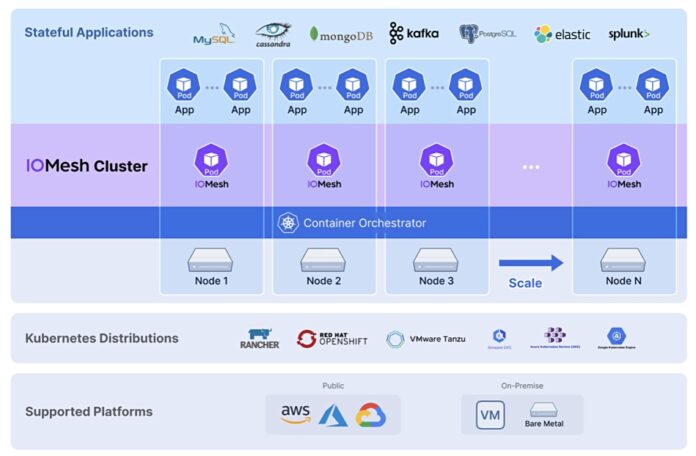

AIOPs supplier Virtana has acquired cloud observability platform OpsCruise, the only purpose-built cloud-native, and Kubernetes observability platform. OpsCruise’s software helps predict performance degradation and pinpoint its cause. It has a deep understanding of Kubernetes and unique contextual AI/ML-based behavior profiling. OpsCruise software is integrated with leading open source monitoring tools and cloud providers for metrics, logs, traces, flows, and configuration data, freeing customers from proprietary alternatives.