Commissioned: Cloud software lies at the heart of modern computing revolution – just not in the way you might think.

When most people think of cloud computing, they think of the public cloud, which is fair play. But if you’re like most IT leaders, your infrastructure operations are far more diverse than they were 10 or even five years ago.

Sure, you run a lot of business apps in public cloud services but you also host software workloads in several other locations. Over time – and by happenstance – you’re running apps on premises, in private clouds and colos and even at the edge of your network, in satellite offices or other remote locations.

Your organization isn’t unique in this regard. Eighty-seven percent of 350 IT decision makers believe that their application environment will become further distributed across additional locations over the next two years, according to an Enterprise Strategy Group poll commissioned by Dell.

It’s a multicloud world; you’re just operating in it. But you need options to help make it work for you. Is that the public cloud, or somewhere else? Yes.

The many benefits of the public cloud

Public cloud services offer plenty of options. You know this better than most people because your IT teams have tapped into the abundant and scalable services the public cloud vendors offer.

Need to test a new mobile app? Spin up some virtual machines and storage, learn what you need to do to improve the app and refine it (test and learn).

What about that bespoke analytics tool your business stakeholders have been wanting to try? Assign it some assets and watch the magic happen. Click some buttons to add more resources as needed.

Such efficient development, fueled by composable microservices and containers that comprise cloud-native development, is a big reason why most IT leaders have taken a “cloud-first” approach to deploying applications. It’s not by accident that worldwide public cloud sales topped $545.8 billion in 2022, a 23 percent increase over 2021, according to IDC.

The public cloud’s low barrier to entry, ease-of-procurement and scalability are among the chief reasons why organizations pursuing digital transformations have re-platformed their IT operating models on such services.

The public cloud’s data taxes

You had to know a but is coming. And you aren’t wrong. Yes, the public cloud provides flexibility and agility as you innovate. And yes, the public cloud provides a lot of options vis-a-vis data, analytics, IoT and AI services.

But the public cloud isn’t always the best option for your business. Like anything else, it’s got its share of drawbacks, namely around portability. As many IT organizations have learned, getting data out of a public cloud can be challenging and costly.

In fact, many IT leaders have come to learn that operating apps in a public cloud comes with what amounts to data taxes. For one, public cloud providers use proprietary data formats, making it difficult to export data your store there to another cloud provider, let alone use it for on-premises apps.

Then there are the data egress fees, or the cost to remove data from a cloud platform, which can be exorbitant. A typical rate is $0.09 per gigabyte but the more data you want to move, the greater the financial penalty you’ll incur.

Finally, have you tried to remove large datasets from a public cloud? Okay, then you know how hard and risky it is – especially datasets stored in several locations. Transferring large datasets courts network latency that impinges application performance. Moreover, because your apps depend on your datasets the more you offload to a public cloud platform the greater the gravity of that data and thus the harder it is to move.

The sheer weight of data gravity is a major reason why so many IT leaders continue to run their software in public clouds, regardless of other available options. After a time, IT leaders feel locked-in to a particular cloud platform(s).

Rebalancing, or optimizing for a cloud experience

Such trappings are among the reasons many organizations are rethinking the public “cloud-first” approach and taking a broader view of optionality.

Many IT departments are assessing the best place to run workloads based on performance, latency, cost and data locality requirements.

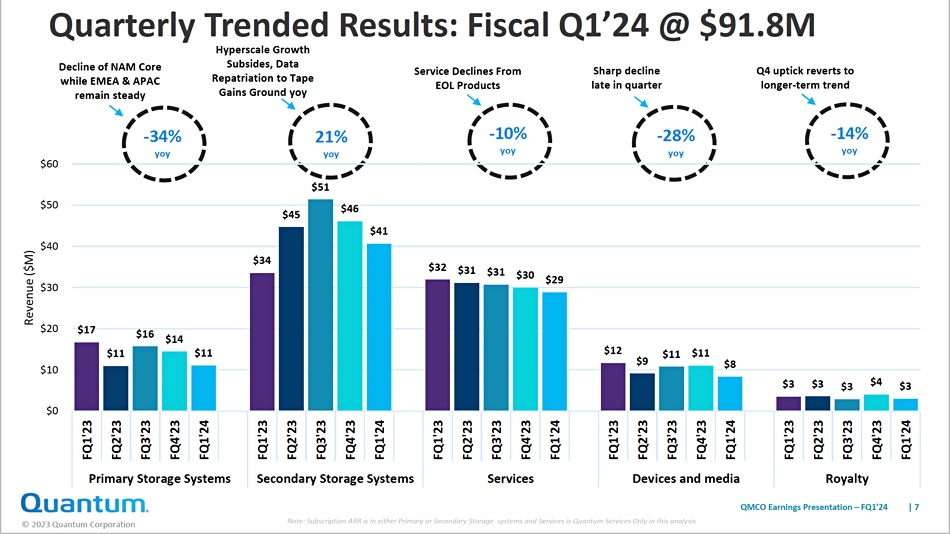

In this cloud optimization or rebalancing, IT organizations are deploying apps intentionally in private clouds, traditional on-premises infrastructure and colocation facilities. In some cases, they are repatriating workloads – moving them from one environment to another.

This multicloud-by-design approach is critical for organizations seeking the optionality to move workloads where they make the most sense without sacrificing the cloud experience they’ve come to enjoy.

The case for optionality

This is one of the reasons Dell designed a ground-to-cloud strategy, which brings our storage software, including block, file and object storage to Amazon Web Services and Microsoft Azure public clouds.

Dell further enables you to manage multicloud storage and Kubernetes container deployments through a single console – critical at a time when many organizations seek application portability and control as they pursue cloud-native development.

Meanwhile, Dell’s cloud-to-ground strategy enables your organization to bring the experience of cloud platforms to datacenter, colo and edge environments while enjoying the security, performance and control of an on-premises solution. Dell APEX Cloud Platforms provide full-stack automation for cloud and Kubernetes orchestration stacks, including Microsoft Azure, Red Hat OpenShift and VMware.

These approaches enable you to deliver a consistent cloud experience while bringing management consistency and experience data mobility across your various IT environments.

Here’s where you can learn more about Dell APEX.

Brought to you by Dell Technologies.