…

Data orchestrator and manager Arcitecta announced Powerhouse, a branch of Australia’s largest museum group, has selected its data management platform, Mediaflux, as its new digital asset management solution (DAMS). Mediaflux is tailored to the museum’s extensive, varied, and valuable collections, marking a substantial recalibration of operations and initiating a significant paradigm shift in the museum’s curatorial processes and user experiences at the museum. With a foothold in higher education, government, media and entertainment, and life sciences, Arcitecta now gets its data management technology into the museum/gallery/cultural asset market.

…

Nothing has been announced by ceramic tablet storage startup Cerabyte or European atom smashing institute CERN but Cerabyte is listed as a partner and participant in CERN’s OpenLab.

…

Data streamer Confluent announced Build with Confluent, a new partner programme that helps system integrators (SIs) speed up the development of data streaming use cases and quickly get them in front of the right audiences. It includes specialised software bundles for developing joint solutions faster, support from data streaming experts to certify offerings, and access to Confluent’s Go-To-Market (GTM) teams to amplify their offering in the market. Build with Confluent aims to monetise real-time solutions built on Confluent’s data streaming platform.

…

William Blair analyst Jason Ader reckons positioning as a consolidating platform for the fragmented analytics estate and the building tailwinds from AI as enterprises start to push projects into production is benefitting Databricks. It has, he tells subscribers, sidestepped budget headwinds that have afflicted other vendors and saw an acceleration in first-quarter revenue growth to mid-60 percent compared to 50 percent growth at the end of fiscal 2024 (January year-end). At of the end of July 2024, Databricks expects to have a roughly $2.4 billion revenue run-rate and NRR above 140 percent. This strong growth is in part a function of Databricks’ expanding portfolio, with the addition of traditional data warehousing capabilities (SQL Warehouse) that is today a $400 million business, as well as new AI capabilities (MosaicML, Notebooks, and the newly announced AI/BI and LakeFlow).

Databricks is becoming a platform of choice for enterprises to manage all of their analytics and AI data needs and should continue to benefit from a number of growth drivers across its business, including successful open-source Spark conversions, added support for Iceberg tables, the early opportunity for its new serverless offering, and the continued cross-sell of new products.

…

IBM announced Storage Ceph v7.1 with support for NVMe over TCP, a VMware vCenter plug in, and NFS v3 for CephFS with metrics. Storage Ceph is focused on the following use cases:

- Object storage as a service

- AI/ML analytics data lake especially for open-source analytics like Presto, Spark, Hadoop

- Cloud-native data pipeline S3 store, especially for open-source Storm analytics

- VMware storage

- File storage as a service

…

Data integrity checker and deep content inspector Index Engines announced aa 99.99 percent service level agreement (SLA) for CyberSense to accurately detect corruption due to ransomware. CyberSense has a machine learning process, which uses thousands of actual ransomware variants, sophisticated intermittent encryption variants, tens of millions of data sets and backup data sets, that feeds its AI-engine. Index Engines says trad data protection methods fall short as they primarily focus on identifying obvious indicators of data compromise such as unusual changes in compression, metadata, and thresholds, which can be easily bypassed by sophisticated ransomware.

Jim McGann, VP of Strategic Partnerships for Index Engines, said: “Our strategic partners, including Dell and Infinidat, benefit from our new SLA to provide their customers with enhanced resiliency, minimizing the impact bad actors have on their business operations.” CyberSense is currently deployed at thousands of organizations worldwide and sold through strategic partnerships with leading organizations. This includes Dell PowerProtect Cyber Recovery, Infinidat Infinisafe with Cyber Detection, and IBM Storage Sentinel.

…

The Nikkei reports that Kioxia has reversed 20 months of NAND production cuts at its Yokkaichi and Kitakami plants, because the market is recovering. t reported a 10.3 billion yen profit for the first calendar 2024 quarter, after six successive quarters of losses. Banks financing Kioxia have re-arranged 540 billion yen ($3.43 billion) loans which would have become repayable this month and set up a new 210 billon yen credit line. New Kioxia spending to increase production at Kitakami has been delayed to 2025 at least.

…

Australia’s Macquarie Cloud Services, part of Macquarie Technology Group, has leveraged strategic relationships with Microsoft and Dell Technologies, to launch Macquarie Flex. It says this is the first Australian hybrid system powered by Microsoft Azure Stack HCI and Dell’s APEX Cloud Platform for Azure. This will provide workload flexibility, one management plane, 24×7 mission-critical support, and compliance across public, private and hybrid cloud environments. Macquarie Cloud Services is the first Dell Technologies partner offering Azure Stack HCI in Australia. This announcement follows its recent launch of Macquarie Guard, a full turnkey SaaS offering that automates practical guardrails into Azure services.

…

NVIDIA released its MLPerf v4.0 Training results, saying it set and maintained records across all categories. Highlights include:

- Achieving new LLM training performance and scale records on industry benchmarks with 11,616 Hopper GPUs.

- Tripling training performance on GPT3-175B benchmark in just one year with near-perfect scaling.

- Increasing Hopper submissions speeds by nearly 30% from new software.

It says these results reinforce its record of demonstrating performance leadership in AI training and inference in every round since the launch of the MLPerf benchmarks in 2018.

…

Omdia’s latest analysis reveals that the global cloud storage services market generated $57 billion in 2023, with Amazon Web Services (AWS) leading with a 30 percent market share. Microsoft and Google follow, while Alibaba and China Telecom are key players in China. Our 2024 Storage Data Services Report projects the market to reach $128 billion by 2028, driven by digital transformation, remote work, and IoT growth. Notably, file storage is set to grow at a 21 percent CAGR, becoming crucial for AI workloads. The analysis forms part of Omdia’s2024 Storage Data Services Report, offering an in-depth breakdown of the cloud data storage services market.

Omdia forecasts robust growth for the global cloud data storage services market, projecting it to reach $128 billion by 2028, with a CAGR of 17% over the next five years. In 2023, the total storage capacity sold in cloud storage services amounted to 2,100 exabytes. Amazon led in terms of global storage capacity consumed, accounting for approximately 38% percent of the market. Object storage, often referred to as cloud storage, dominated the services capacity sold, making up 70 percent of the total storage services capacity.

Dennis Hahn, Omdia Principal Analyst said: “File storage is poised to be the fastest-growing segment, with an expected CAGR of 21 percent through 2028. This growth is largely attributed to the increasing use of file storage as high-performance storage in AI workloads. Despite object storage leading in capacity, storage services revenues are more evenly distributed among object, block, and file storage. This is due to higher per-capacity service charges for block and file storage from most vendors.

…

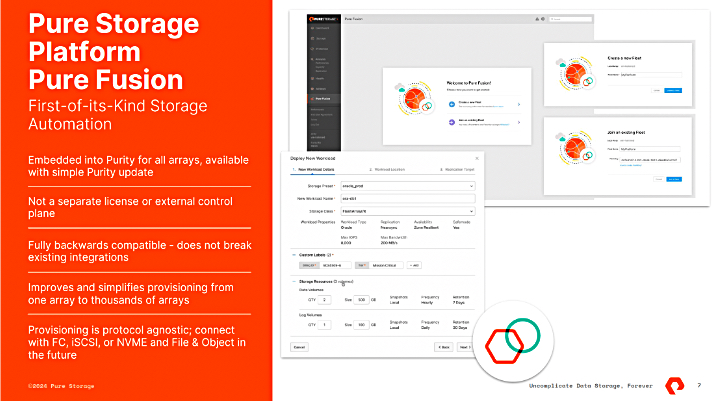

Wedbush analyst Matt Bryson suggests to subscribers that Pure Storage could announce 150TB Direct Flash Modules; its proprietary SSDs, at its Accelerate Conference starting today (June 18). Then again, it might not.

…

Veritas, being bought by Cohesity, announced the latest Data Insight release with availability as a SaaS offering. Veritas Data Insight enables customers to assess and mitigate unstructured and sensitive data compliance and cyber resilience risks across multi-cloud infrastructures. Customers can consume Veritas Data Insight from the cloud as a multi-tenant, Veritas-managed SaaS application. VDS data indexing now requires up to 50% less disk space. Expedited data classification better focuses on content that is relevant. Improved indexing and targeted classification results in more comprehensive compliance. Veritas Data Insight is available as part of all three Veritas data compliance and governance service offerings and we understand it will be in the DataCo part of Veritas left behind after the Cohesity acquisition.

…

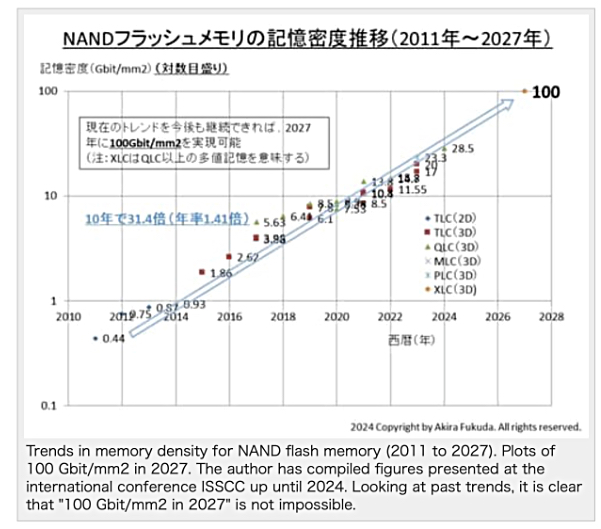

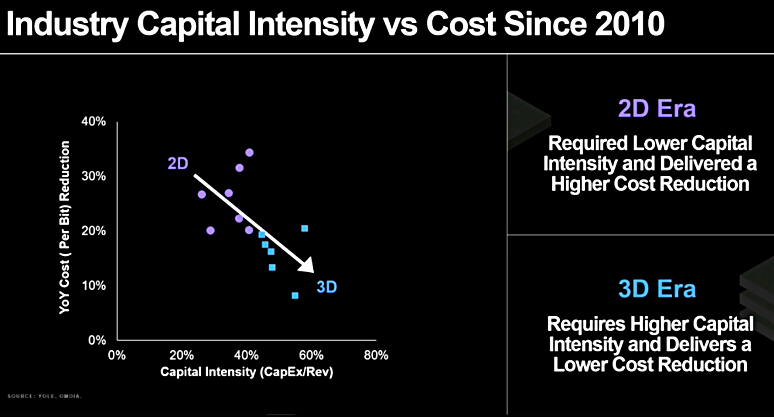

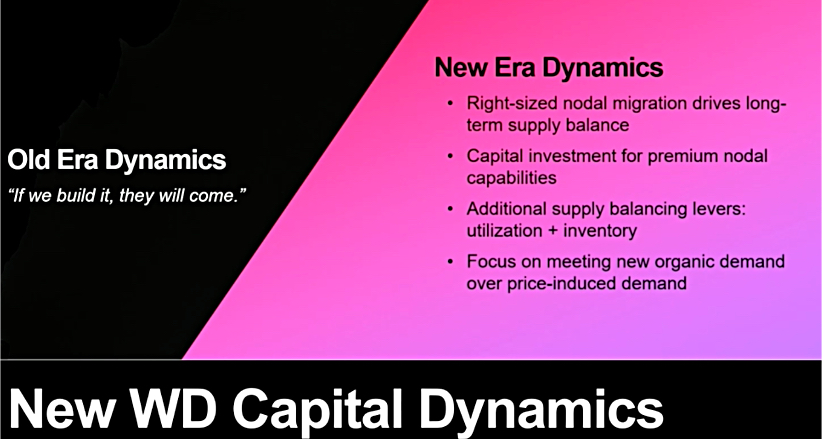

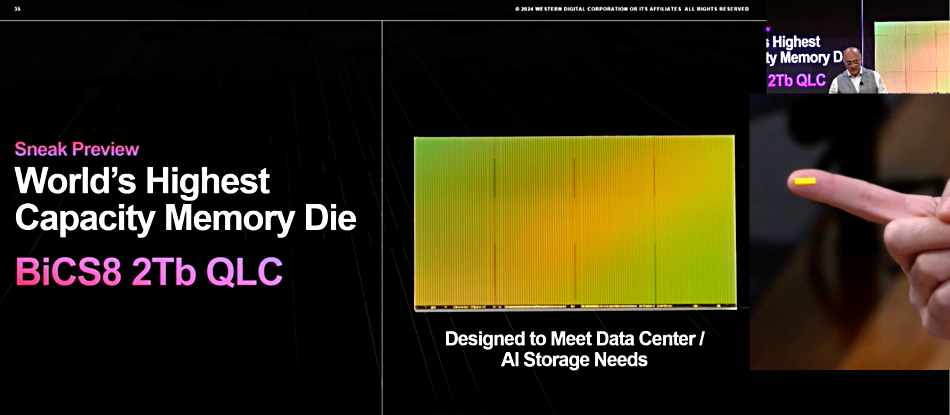

Western Digital previewed a 2Tb QLC (4 bits/cell) NAND chip at a June 10 investor conference. The die uses BiCS8 node 218-layer NAND and a CBA (CMOS bonded to the Array) manufacturing technology. EVP Robert Soderbery talked about the end of the NAND layers race, with a slowing down of NAND layer count jumps to optimise capital deployment. He said “We’re no longer on a hamster wheel of nodal migration.” Nodes must be long-lasting, feature-rich and future-proofed. WD will be supplying premium nodes for premium use cases with stronger WD-customer relationships.